Introduction

Be the change that you wish to see in the world.

- Mohandas Gandhi

Goals of this book

| Cover about |

|---|

| Best practices and tools for the trade |

| Methods for maintaining code quality |

| Options in DevOps |

| Details on Change management and governance |

| Provide Org management tools |

| Version Control |

| Chrome Extension |

| VS Code Extension |

Request to the readers

Please plant fruit trees like King Ashoka did:

“Along roads I have had wells dug and trees planted for the benefit of humans and animals”.

“Along roads I have had banyan trees planted so that they can give shade to animals and men, and I have had mango groves planted.”

Images used are from the Chromecast source.

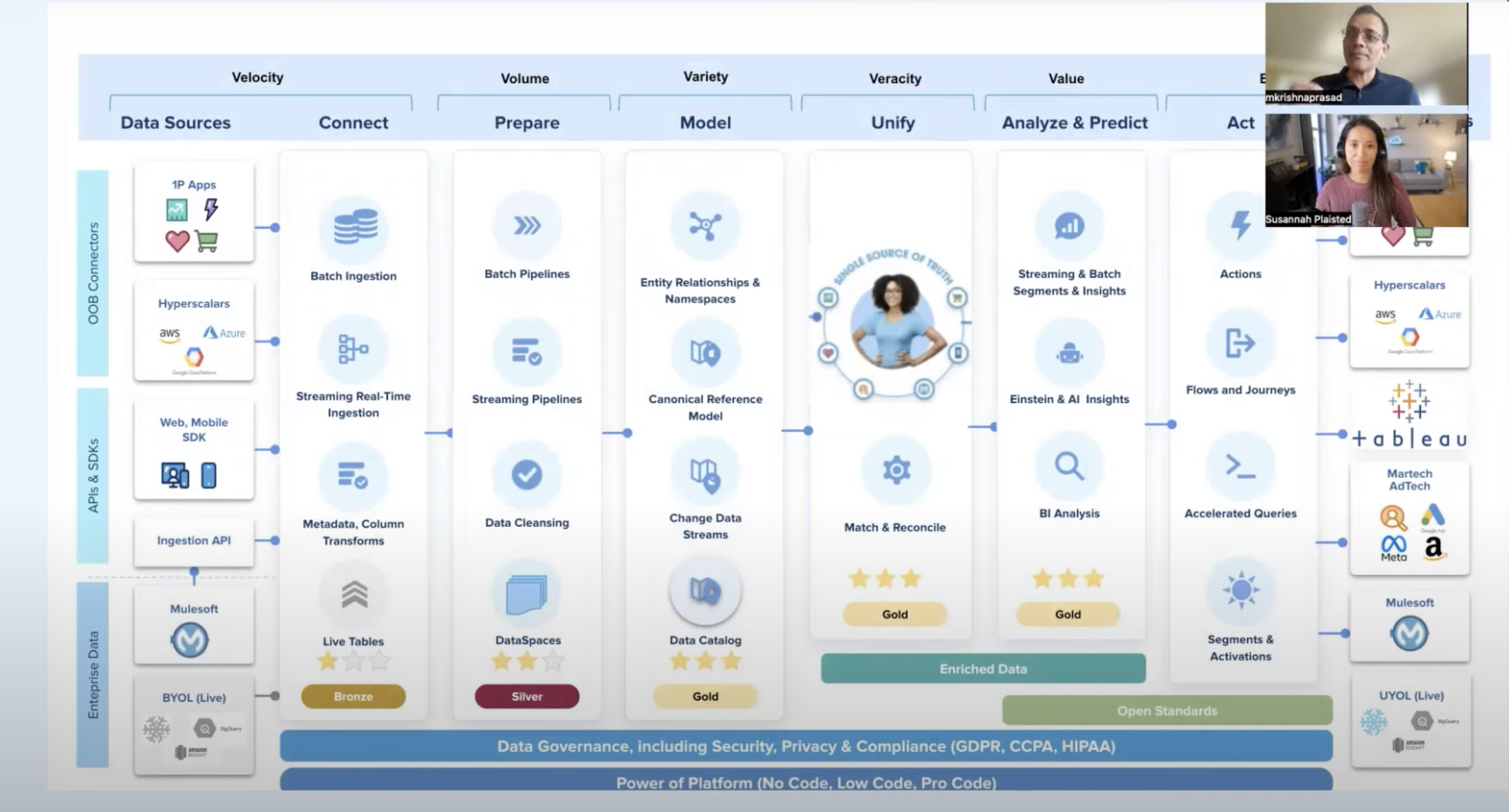

Data Governance

Cost of doing nothing

References

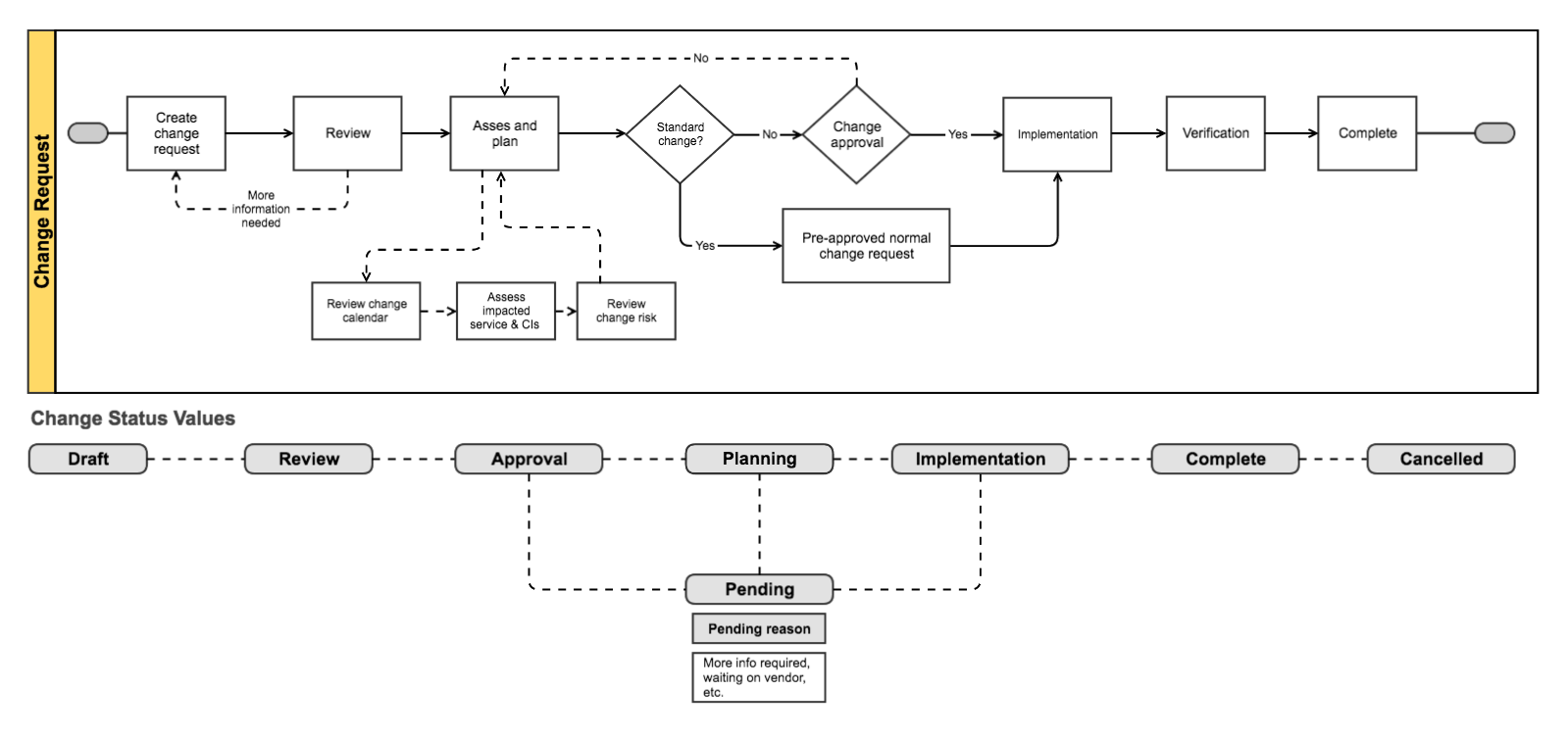

Change Management

- Optimizing the change management is the key to the success of any Salesforce implementation

- Changes are a regular affair when you are bringing in new features/enhancements into your implementation

graph TD;

A[Finding the Need for the Change]-->B[Define Change Scope];

B-->C[Assign Priority to the Change you are bringing in.\n How it enhances/affects current implementation];

C-->D[Submit the change to the Change Approval Board - CAB];

D-->E[Change got approved by CAB];

E-->F[Perform the change];

F-->G[Regression test the change];

G-->H[Change Manager Approves the implemented change.\n Records that change was successful,\n timely, accurately estimated, within budget, and other details ]

Using Atlassian Change Management

-

A project member requests a change. They provide the details like the affected systems, possible risks, and expected implementation.

-

The change manager or peers determine if the change will be successful. They may ask for more information in this step.

-

After review, the team plans how to put the change in place. They record details about:

- the expected outcomes

- resources needed

- timeline

- testing requirements

- undo : ways to roll back the change

-

Depending on the type of change and risk, a change approval board (CAB) may need to review the plan.

-

The team works to implement the change, documenting their procedures and results.

-

The change manager reviews and closes the implemented change.

- They note whether it was successful, timely, accurately estimated, within budget, and other details.

Roles

Change Manager (CM)

- A Change Manager (CM) is a trusted advisor that addresses the people side of change.

- A CM’s primary goal is to help define a clear vision for change and assist in designing a plan that will drive faster adoption, increase utilization of and proficiency with the changes that impact an organization or business.

Project Manager

- Primary point of contact

- Manages Project Plan, Organization, delivery within budget and schedule

- Escalation point for the project team and stakeholders

- Monitor project alignment and goals

Product Owner

- Primary point of contact for Development team

- Defines product roadmap and vision

- Manages product backlog

- Oversees and evaluates development stages and progress

References

Videos

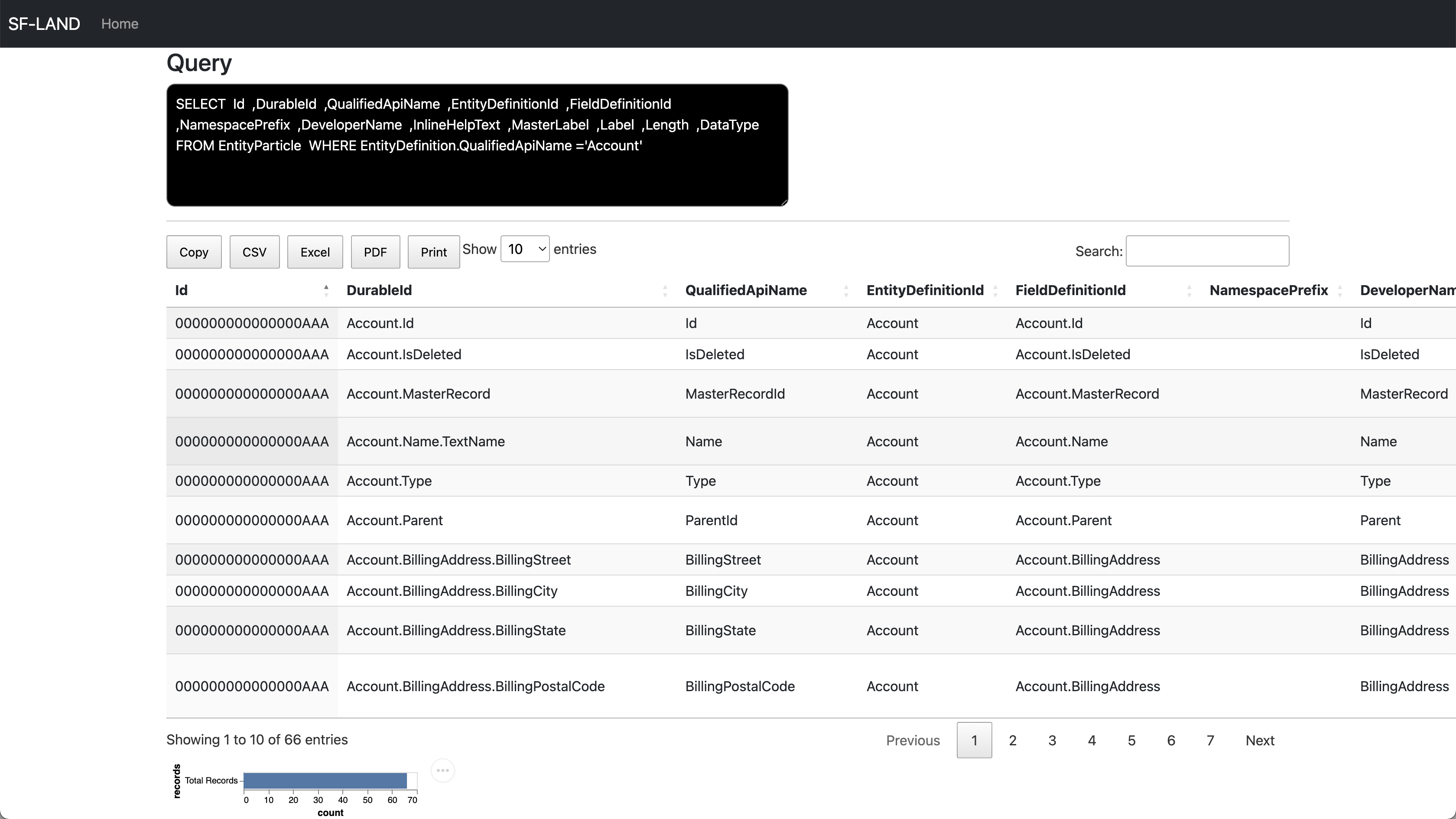

Data Model

Topics

- Tools for extracting Org metadata

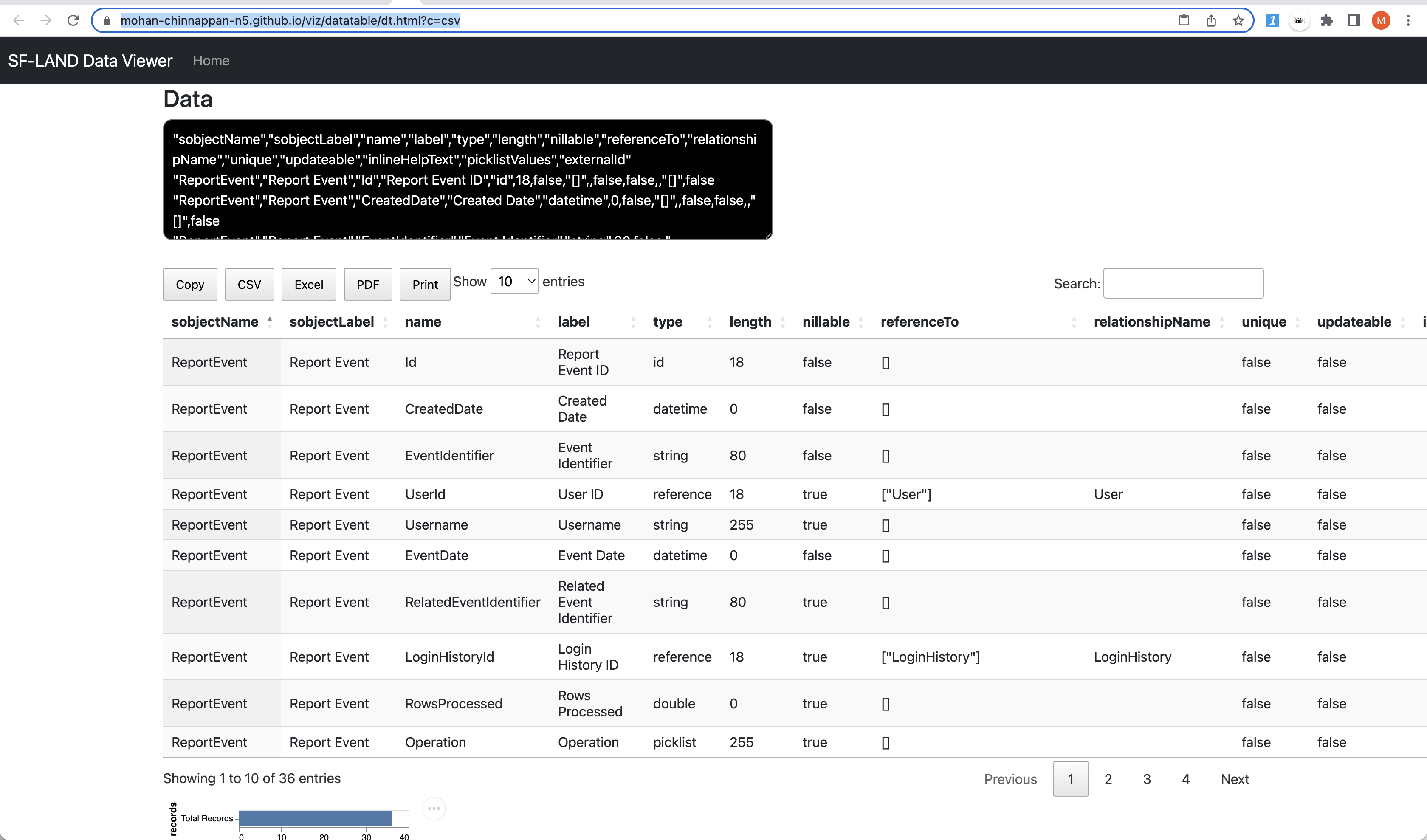

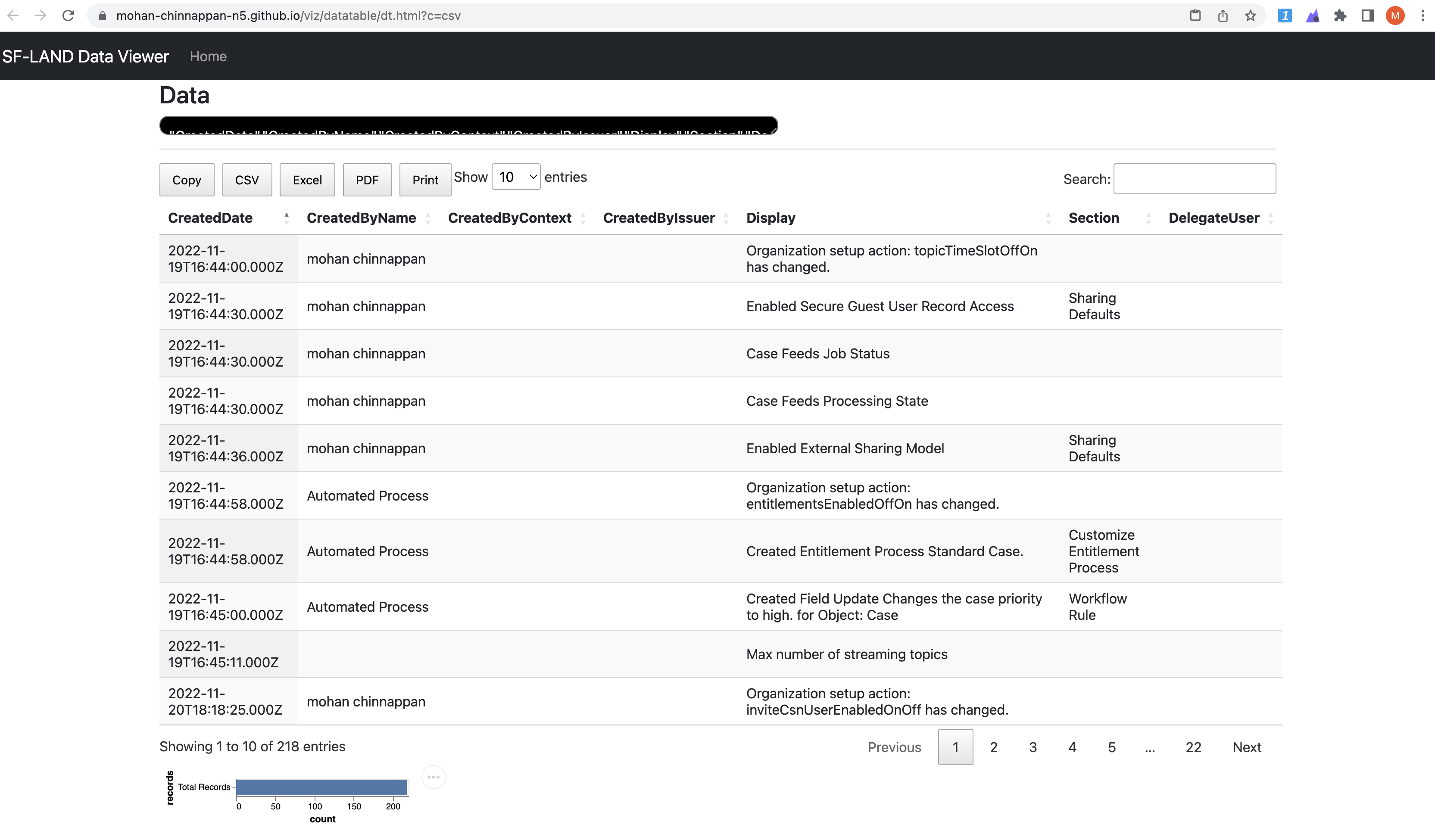

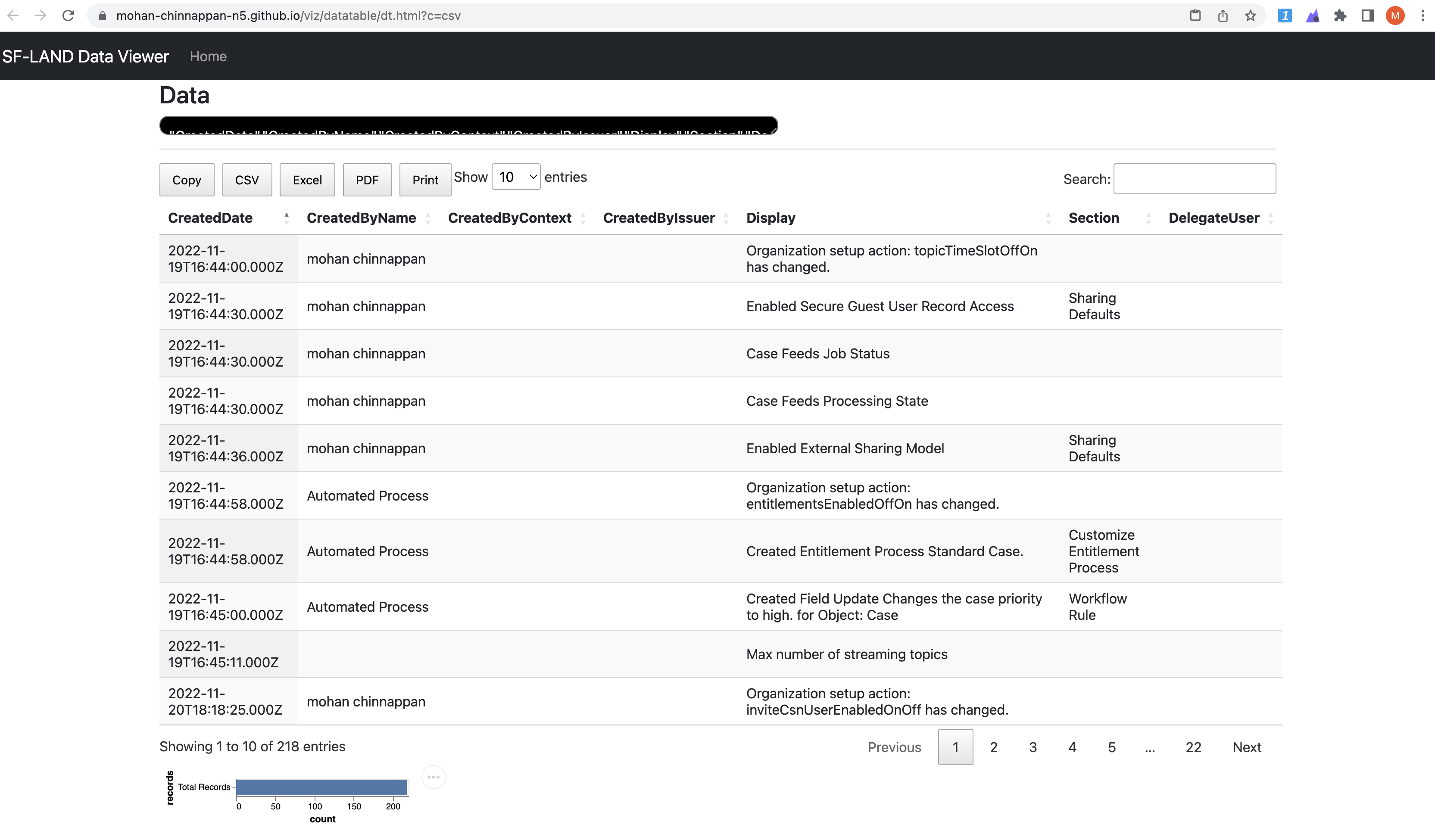

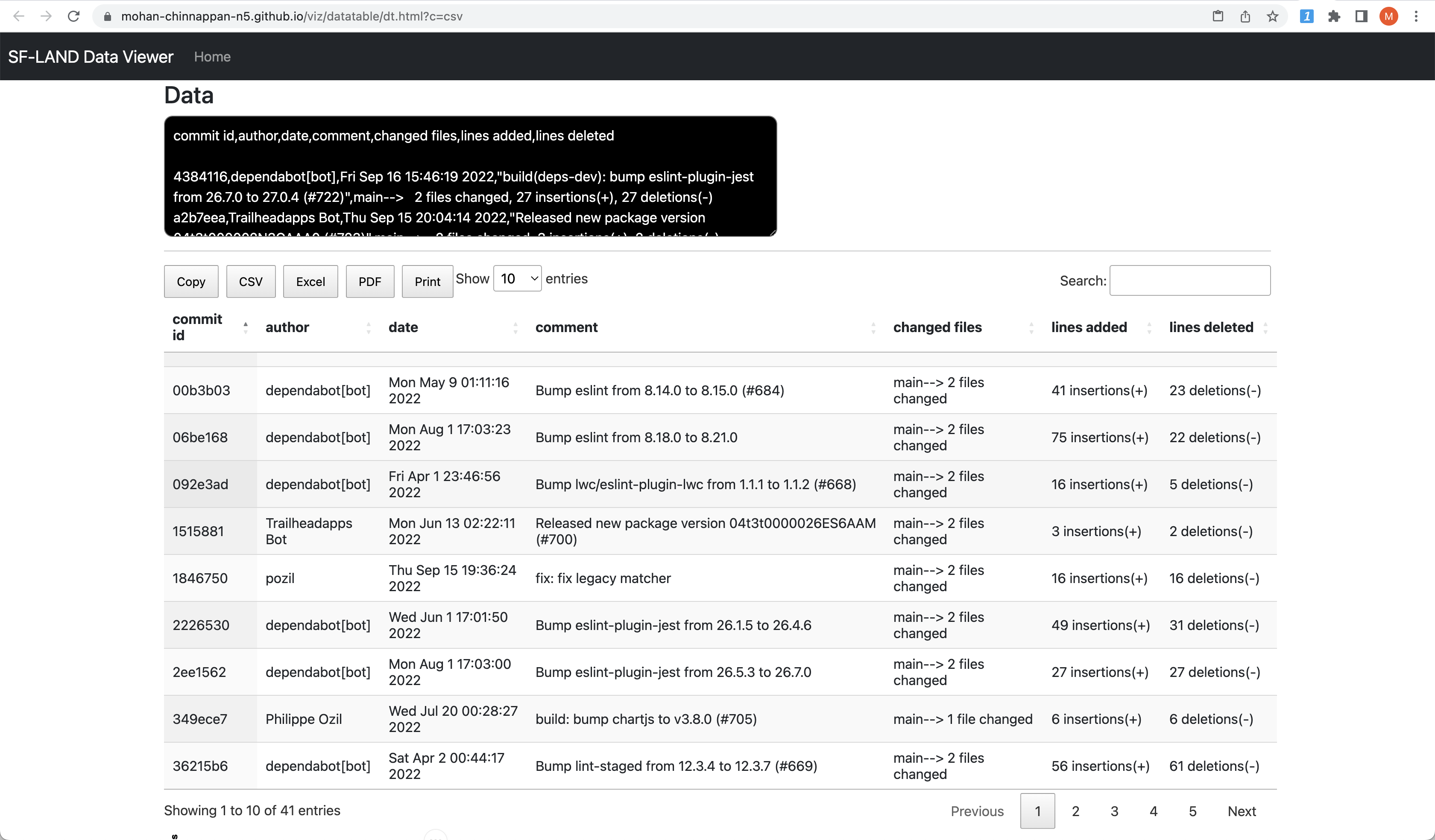

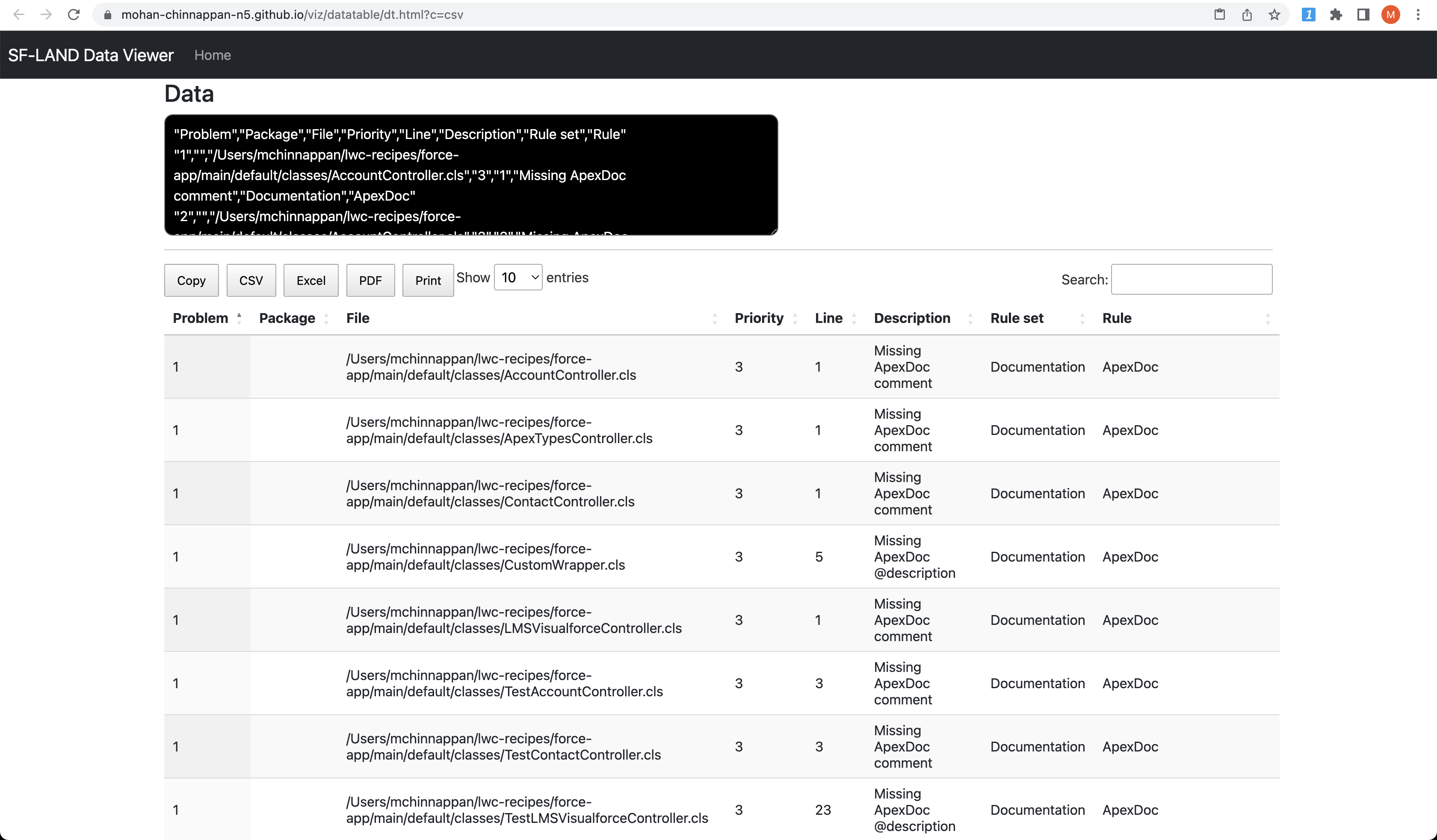

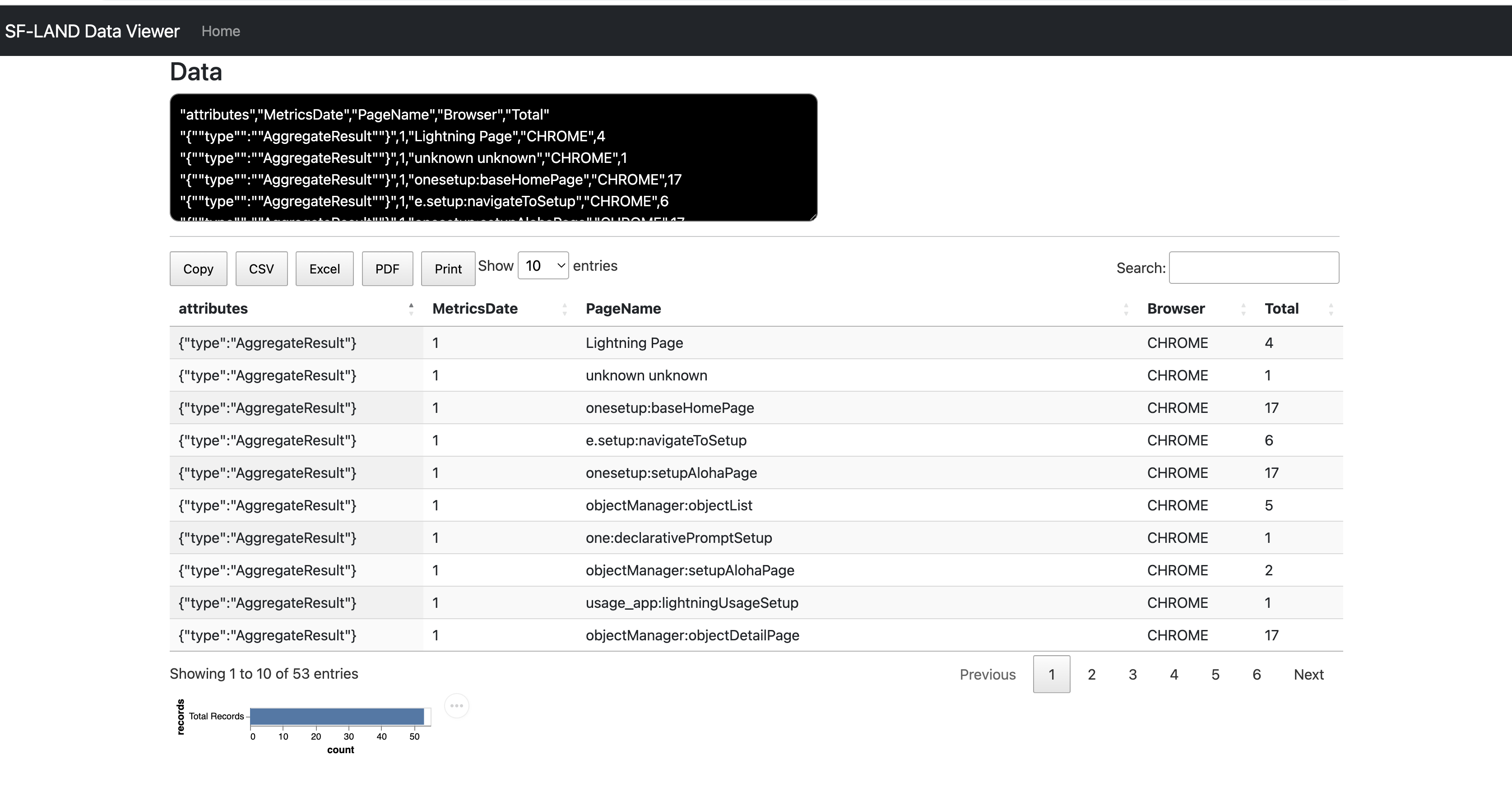

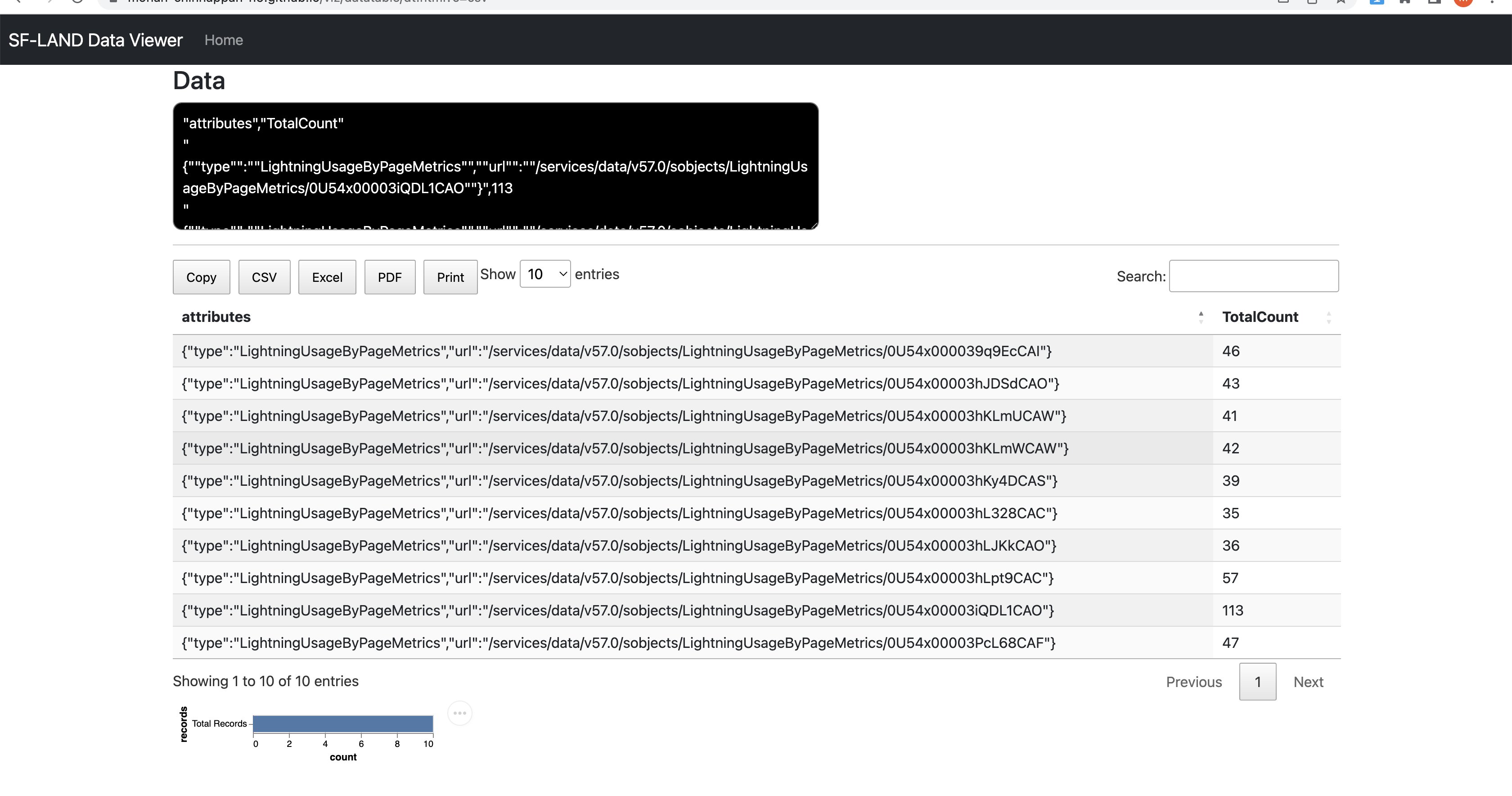

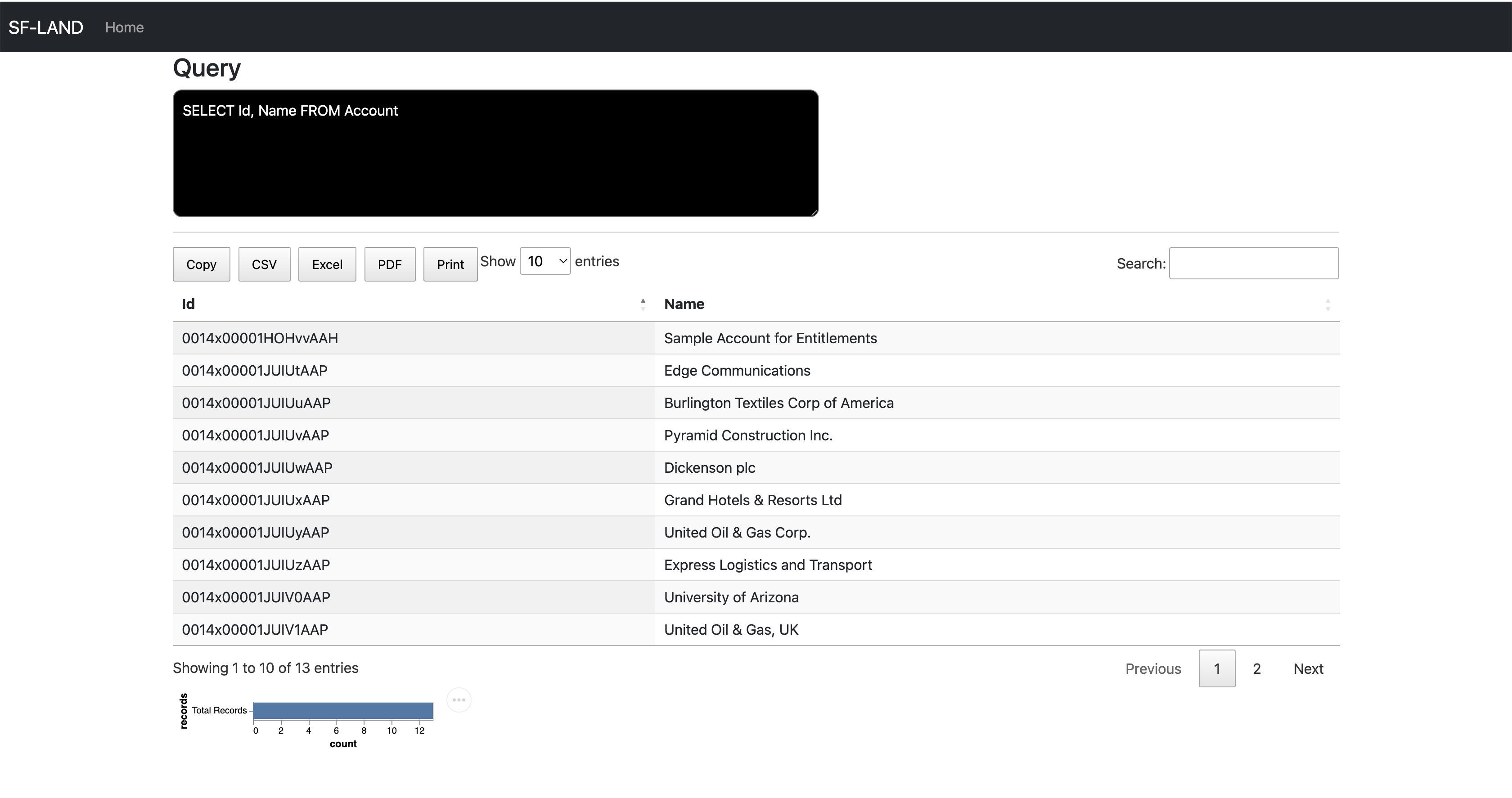

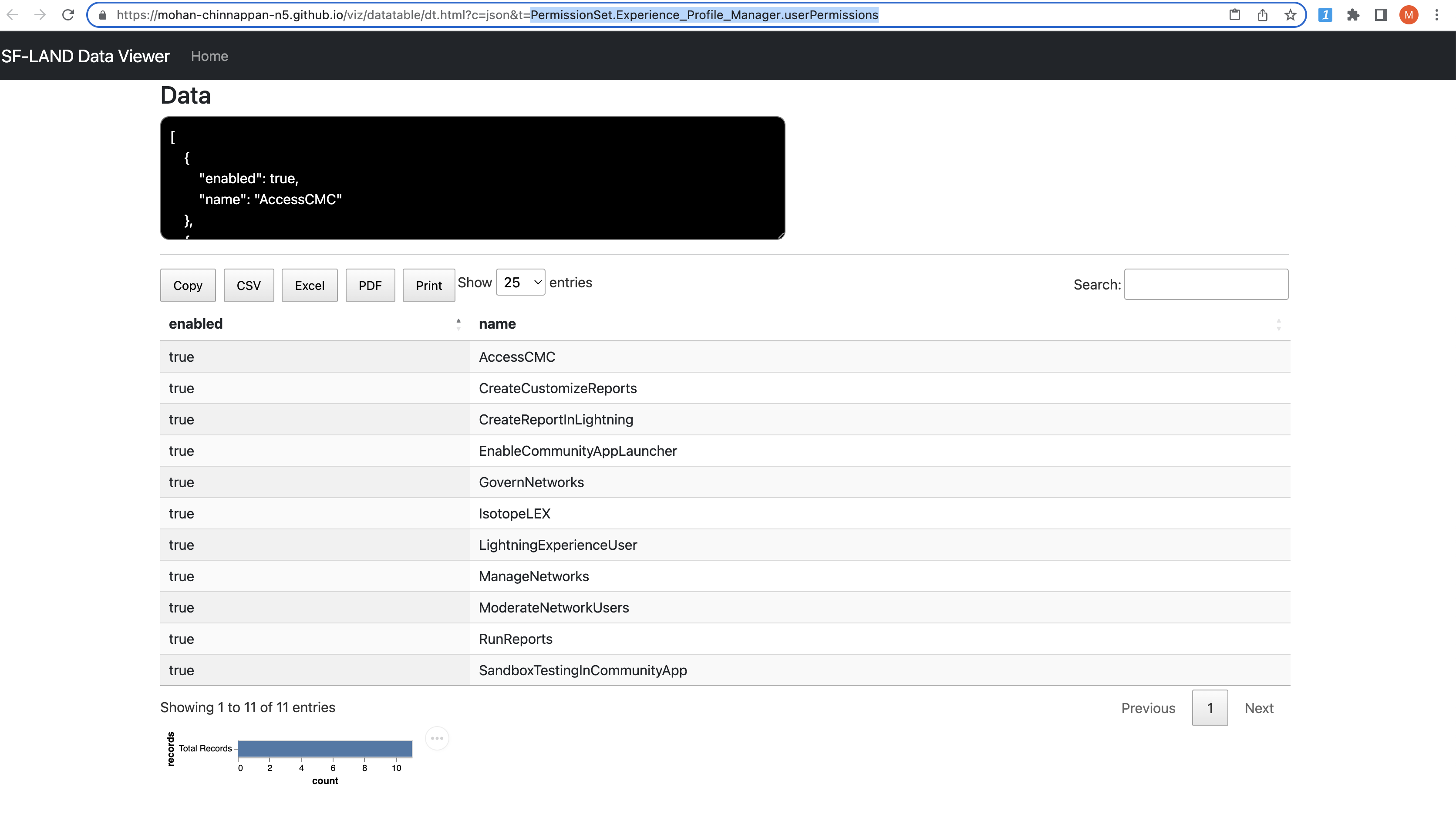

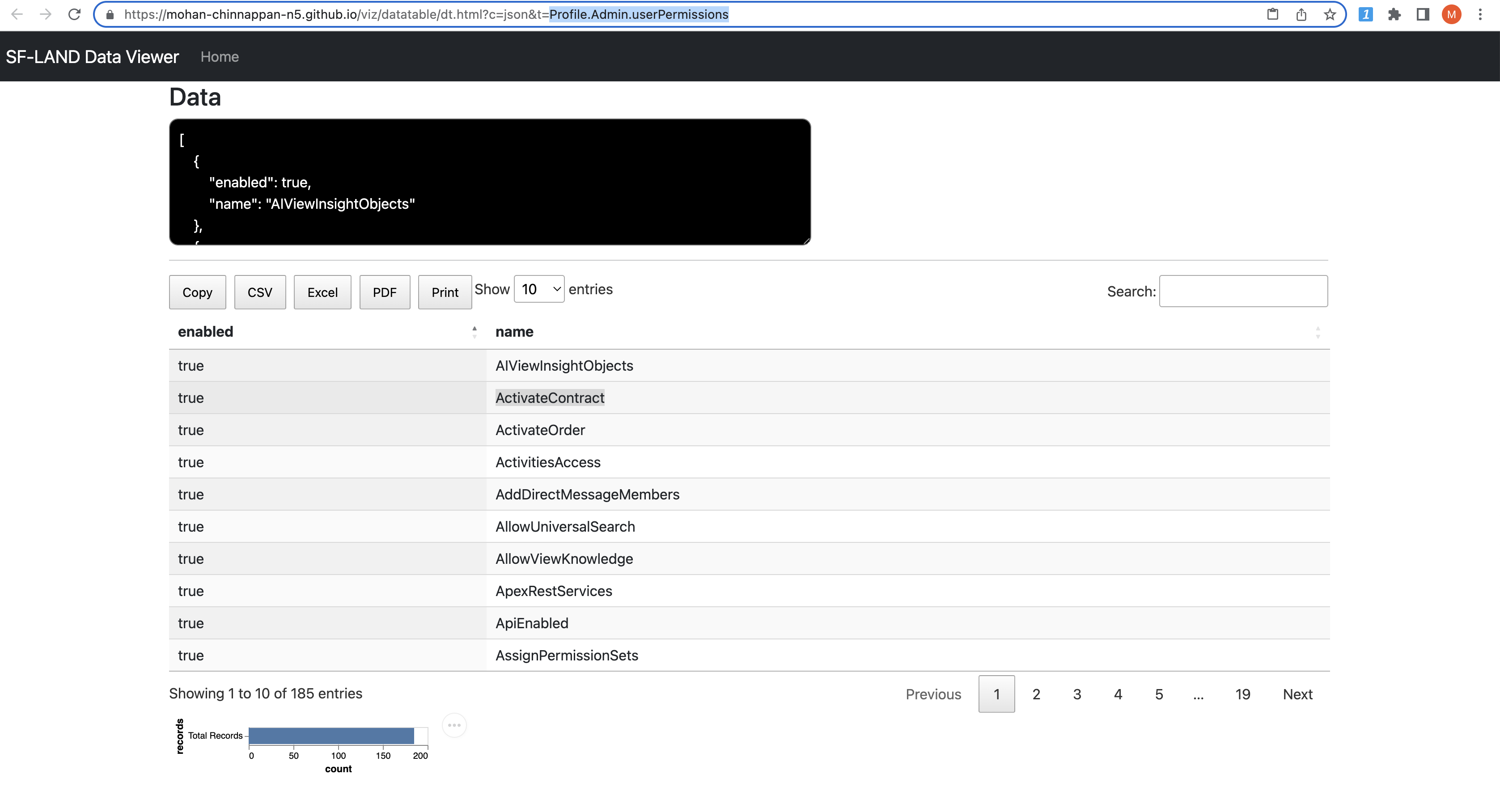

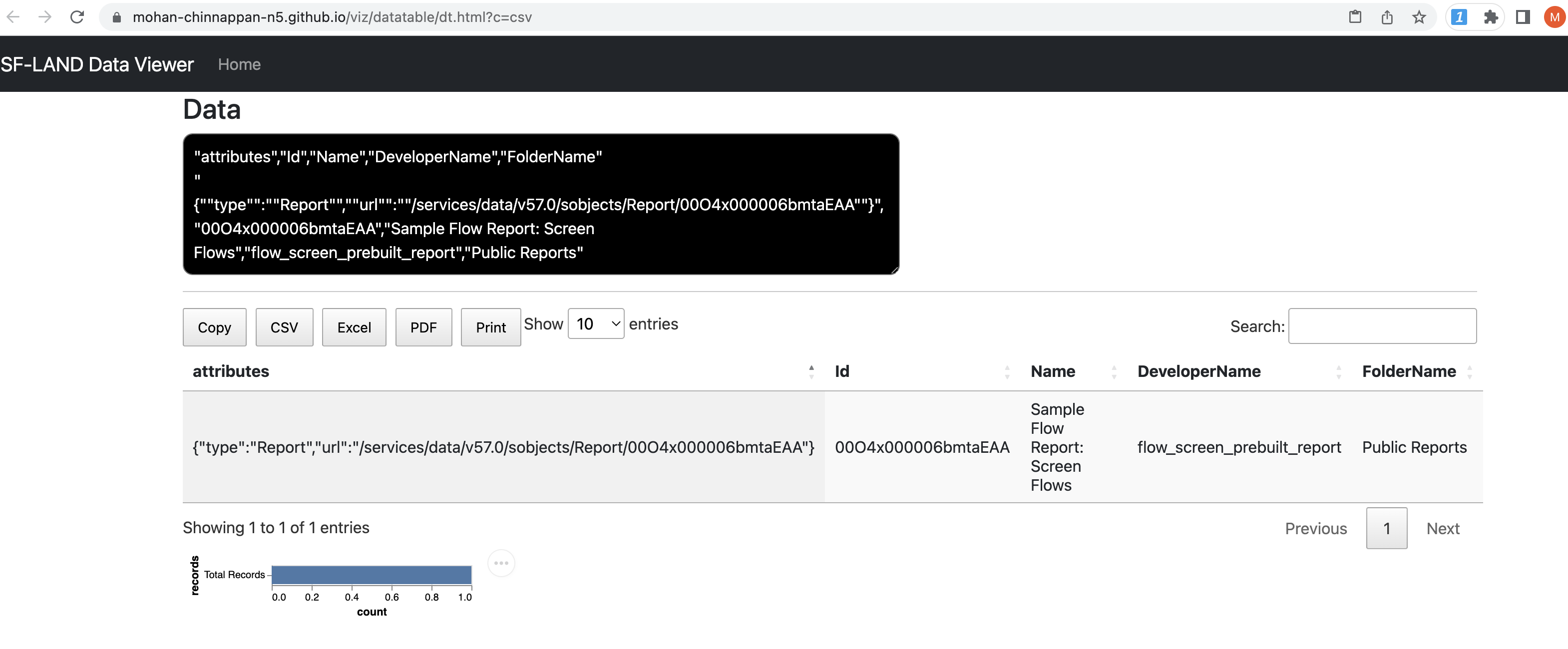

- SF-LAND Data Viz for viewing the metadata

- Getting ERD for given sObjects

Tools for getting the complete metadata of your org

Let us pick a scratch org to show the concepts

ALIAS USERNAME ORG ID EXPIRATION DATE

─ ───────── ───────────────────────────── ────────────────── ───────────────

treeprjSO test-uzsmfdqkhtk7@example.com 00DDM000003raPM2AY 2023-01-28

Let us get the all the objects in the org into a object list txt file

~/treeprj [main] >sfdx mohanc:md:describeGlobal -u test-uzsmfdqkhtk7@example.com > treeprjSO_Objects.txt

You can get the list of objects names in the org from this text file by:

cat treeprjSO_Objects.txt | sed 's/,/\n/g' | bat

───────┬───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

│ STDIN

───────┼───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

1 │ AIApplication

2 │ AIApplicationConfig

3 │ AIInsightAction

4 │ AIInsightFeedback

5 │ AIInsightReason

6 │ AIInsightValue

7 │ AIPredictionEvent

8 │ AIRecordInsight

9 │ AcceptedEventRelation

10 │ Account

11 │ AccountChangeEvent

12 │ AccountCleanInfo

13 │ AccountContactRole

14 │ AccountContactRoleChangeEvent

15 │ AccountFeed

16 │ AccountHistory

17 │ AccountPartner

18 │ AccountShare

19 │ ActionLinkGroupTemplate

20 │ ActionLinkTemplate

21 │ ActiveFeatureLicenseMetric

22 │ ActivePermSetLicenseMetric

23 │ ActiveProfileMetric

24 │ ActivityHistory

25 │ AdditionalNumber

26 │ Address

27 │ AggregateResult

28 │ AlternativePaymentMethod

29 │ AlternativePaymentMethodShare

30 │ Announcement

:

Get the org metadata using this object list text file into treeprjSO_metadata.csv

- This operation may take few minutes based your org metadata

sfdx mohanc:md:describe -u test-uzsmfdqkhtk7@example.com -i treeprjSO_Objects.txt > treeprjSO_metadata.csv

bat treeprjSO_metadata.csv

───────┬───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

│ File: treeprjSO_metadata.csv

───────┼───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

1 │ "sobjectName","sobjectLabel","name","label","type","length","nillable","referenceTo","relationshipName","unique","updateable","inlineHelpText"

│ ,"picklistValues","externalId"

2 │ "AIApplication","AI Application","Id","AI Application ID","id",18,false,"[]",,false,false,,"[]",false

3 │ "AIApplication","AI Application","IsDeleted","Deleted","boolean",0,false,"[]",,false,false,,"[]",false

4 │ "AIApplication","AI Application","DeveloperName","Name","string",80,false,"[]",,false,false,,"[]",false

5 │ "AIApplication","AI Application","Language","Master Language","picklist",40,false,"[]",,false,false,,"[""English"",""German"",""Spanish"",""Fr

│ ench"",""Italian"",""Japanese"",""Swedish"",""Korean"",""Chinese (Traditional)"",""Chinese (Simplified)"",""Portuguese (Brazil)"",""Dutch"",""

│ Danish"",""Thai"",""Finnish"",""Russian"",""Spanish (Mexico)"",""Norwegian""]",false

6 │ "AIApplication","AI Application","MasterLabel","Label","string",80,false,"[]",,false,false,,"[]",false

7 │ "AIApplication","AI Application","NamespacePrefix","Namespace Prefix","string",15,true,"[]",,false,false,,"[]",false

8 │ "AIApplication","AI Application","CreatedDate","Created Date","datetime",0,false,"[]",,false,false,,"[]",false

9 │ "AIApplication","AI Application","CreatedById","Created By ID","reference",18,false,"[""User""]","CreatedBy",false,false,,"[]",false

10 │ "AIApplication","AI Application","LastModifiedDate","Last Modified Date","datetime",0,false,"[]",,false,false,,"[]",false

11 │ "AIApplication","AI Application","LastModifiedById","Last Modified By ID","reference",18,false,"[""User""]","LastModifiedBy",false,false,,"[]"

│ ,false

12 │ "AIApplication","AI Application","SystemModstamp","System Modstamp","datetime",0,false,"[]",,false,false,,"[]",false

13 │ "AIApplication","AI Application","Status","Status","picklist",255,false,"[]",,false,false,,"[""3"",""2"",""1"",""0""]",false

14 │ "AIApplication","AI Application","Type","App Type","picklist",255,false,"[]",,false,false,,"[""5"",""14""]",false

15 │ "sobjectName","sobjectLabel","name","label","type","length","nillable","referenceTo","relationshipName","unique","updateable","inlineHelpText"

│ ,"picklistValues","externalId"

16 │ "AIApplicationConfig","AI Application config","Id","AI Application config Id","id",18,false,"[]",,false,false,,"[]",false

17 │ "AIApplicationConfig","AI Application config","IsDeleted","Deleted","boolean",0,false,"[]",,false,false,,"[]",false

18 │ "AIApplicationConfig","AI Application config","DeveloperName","Name","string",80,false,"[]",,false,false,,"[]",false

19 │ "AIApplicationConfig","AI Application config","Language","Master Language","picklist",40,false,"[]",,false,false,,"[""English"",""German"",""S

│ panish"",""French"",""Italian"",""Japanese"",""Swedish"",""Korean"",""Chinese (Traditional)"",""Chinese (Simplified)"",""Portuguese (Brazil)""

│ ,""Dutch"",""Danish"",""Thai"",""Finnish"",""Russian"",""Spanish (Mexico)"",""Norwegian""]",false

20 │ "AIApplicationConfig","AI Application config","MasterLabel","Label","string",80,false,"[]",,false,false,,"[]",false

21 │ "AIApplicationConfig","AI Application config","NamespacePrefix","Namespace Prefix","string",15,true,"[]",,false,false,,"[]",false

22 │ "AIApplicationConfig","AI Application config","CreatedDate","Created Date","datetime",0,false,"[]",,false,false,,"[]",false

23 │ "AIApplicationConfig","AI Application config","CreatedById","Created By ID","reference",18,false,"[""User""]","CreatedBy",false,false,,"[]",

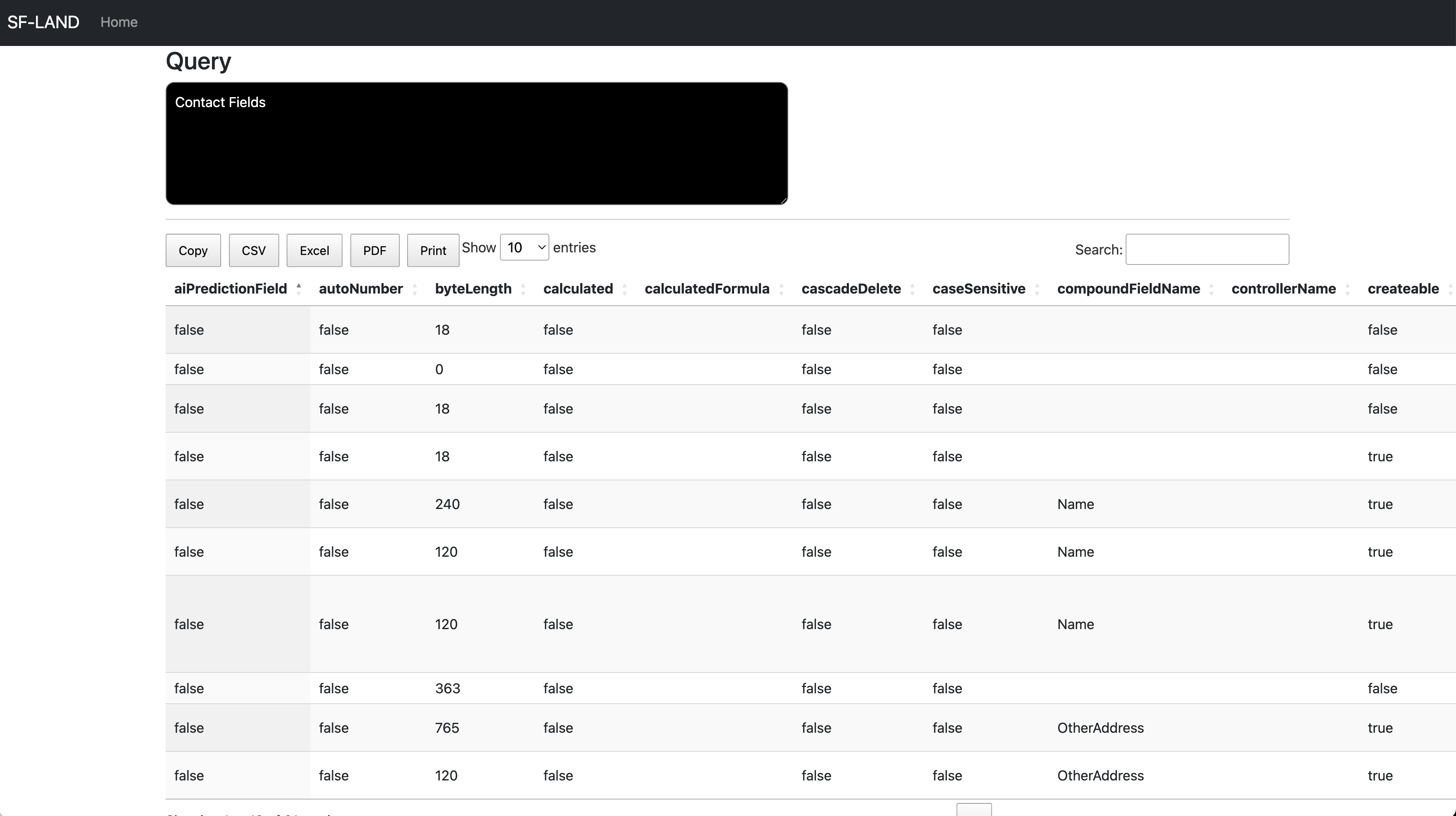

Using SF-LAND Data Viz to view the model file

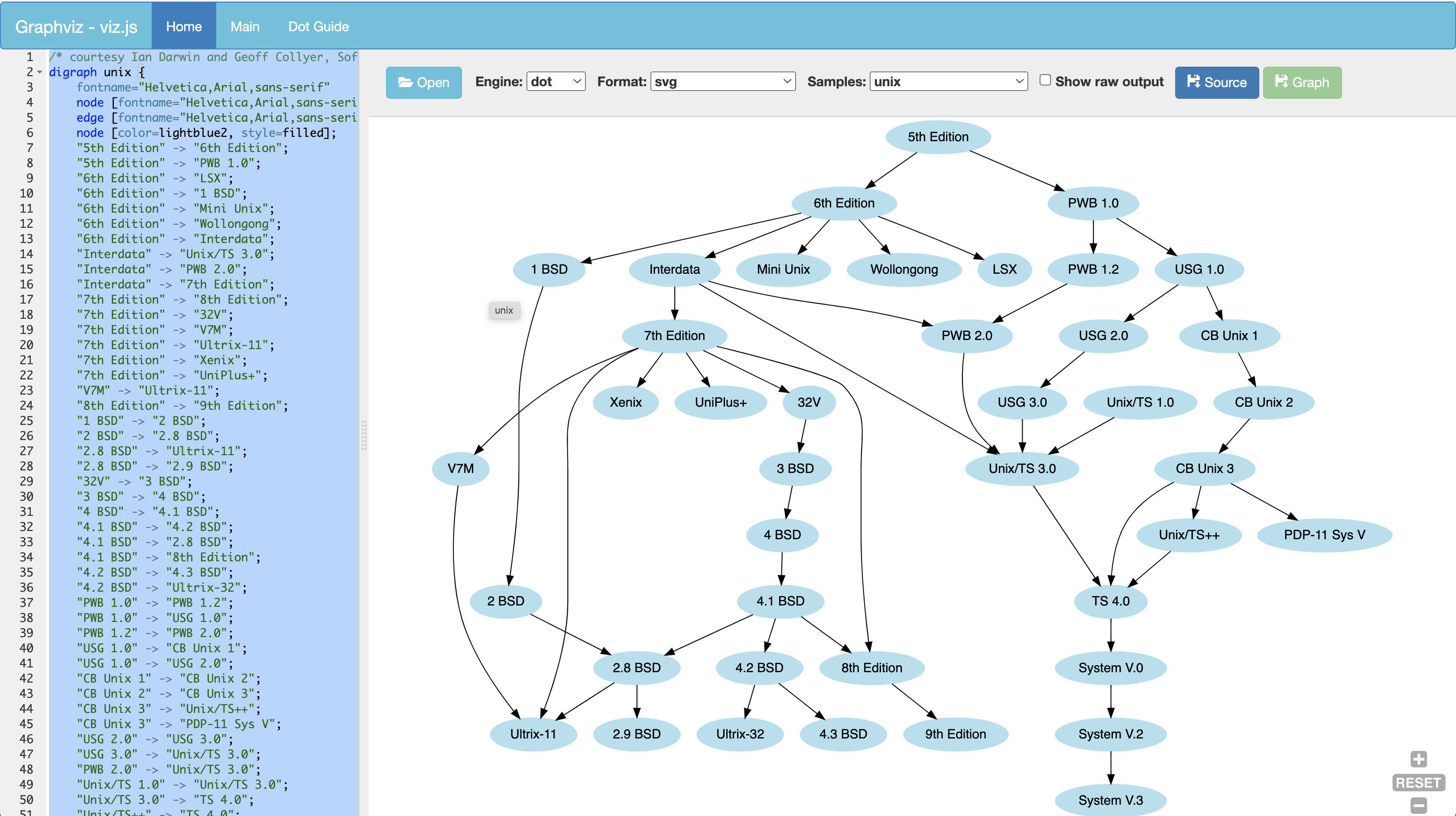

ERD

- Let us get ERD for Contact and Account Objects

- Note: This also emits the metadata for these 2 object in csv - contact-account.csv

sfdx mohanc:md:describe -s Contact,Account -e contact-account.dot -u test-uzsmfdqkhtk7@example.com > contact-account.csv

Get the SVG for this ERD

sfdx mohanc:viz:graphviz:dot2svg -i contact-account.dot

SVG file is written to contact-account.dot.svg

open contact-account.dot.svg

- Click on the image to view the full version

Financial Services Cloud (FSC) Interactive Data Model

Sales Cloud Interactive Data Model

Tools for trade

- We will discuss tools used for maintaining the Salesforce org

Tools for the Salesforce Org

Topics

- Visualizing the org

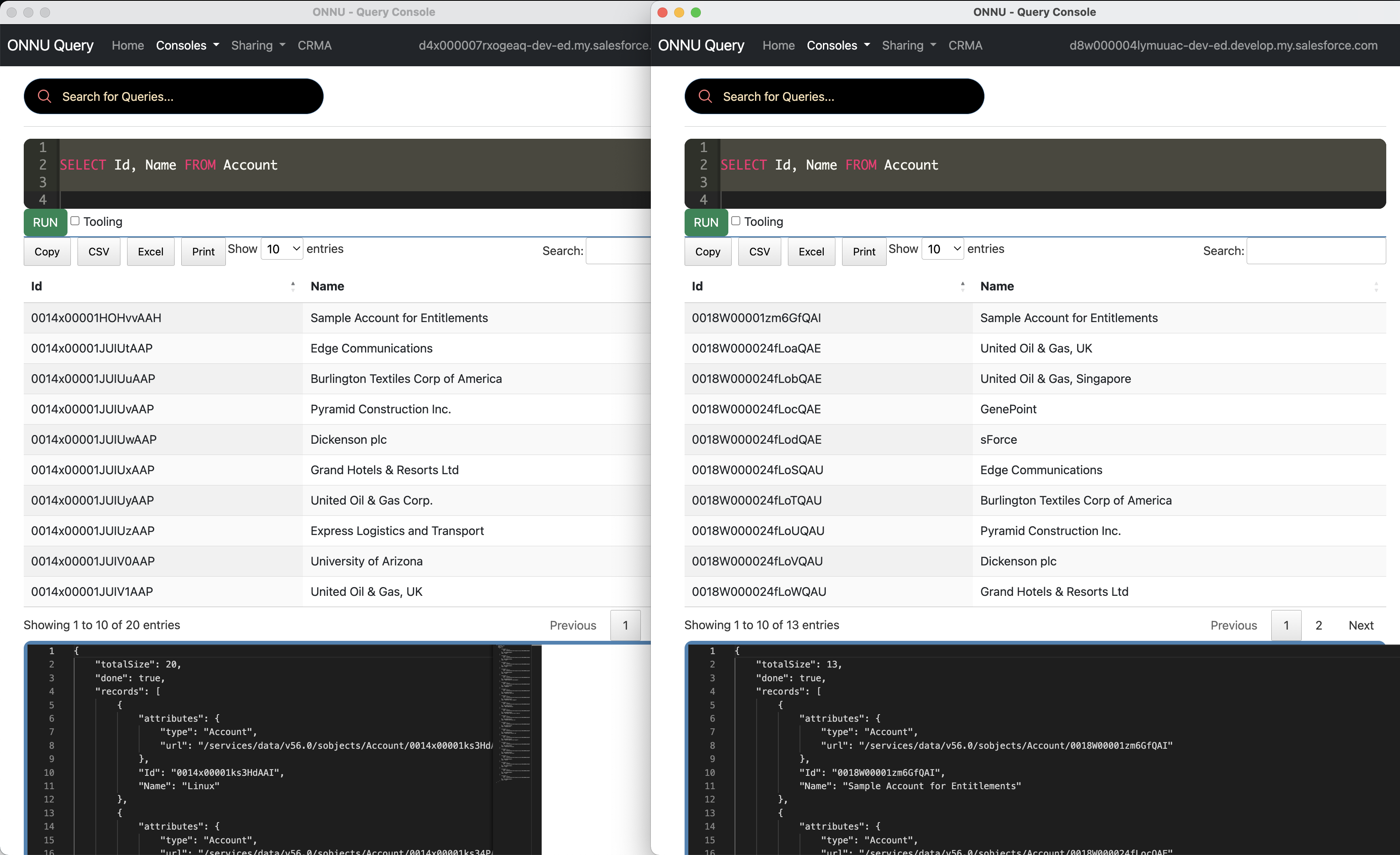

- Comparing query results from 2 orgs

- Compare 2 Salesforce Orgs for a metadata type

- List Metadata item of the given Metadata type and compare it with local

- Export SObject Metadata from an Org

- ERD for the given list of Salesforce Objects

- Compare SObjects in 2 orgs

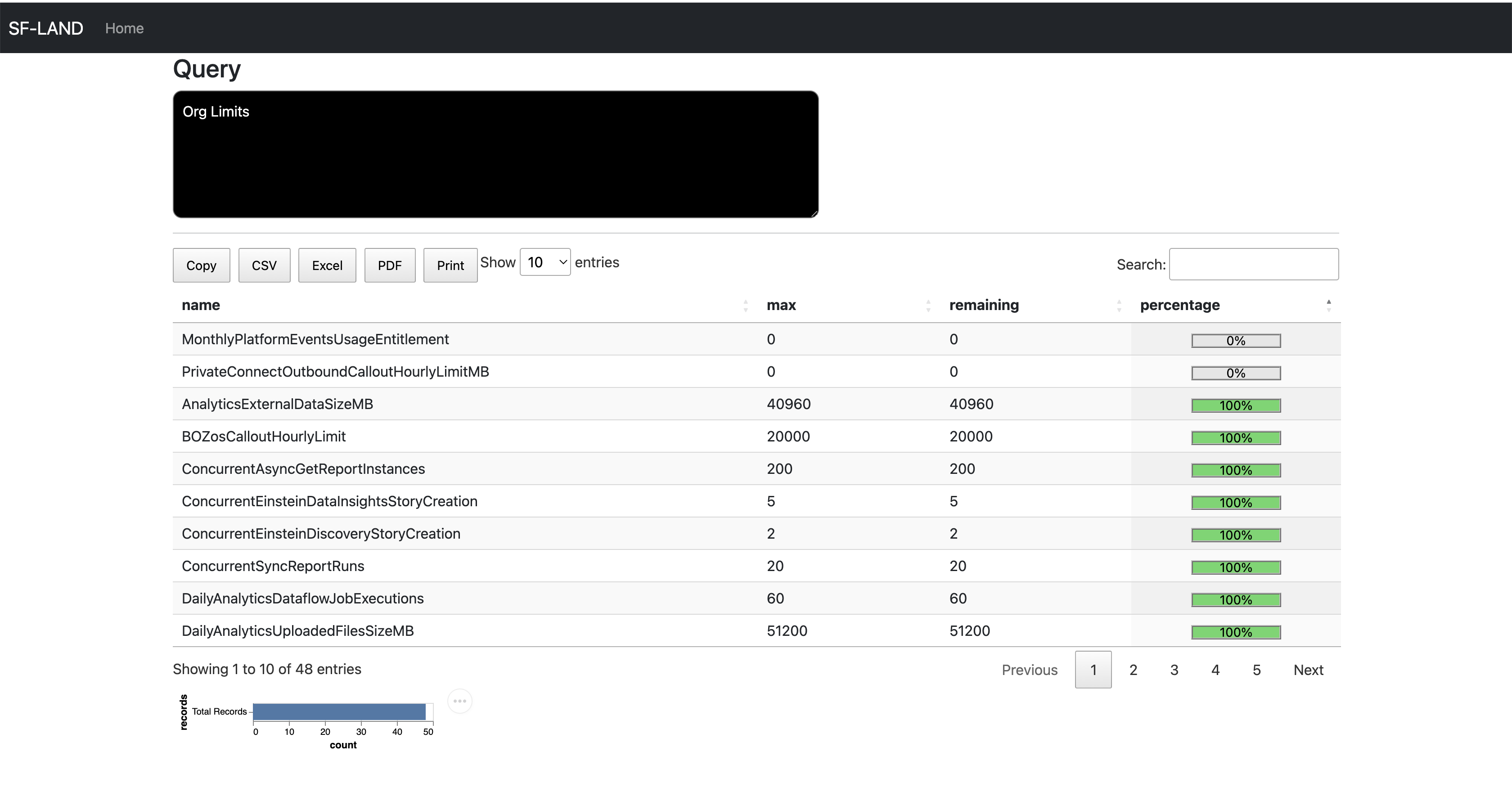

Visualizing the org

sfdx mohanc:org:viz -u test-uzsmfdqkhtk7@example.com --help

Visualize the Org

USAGE

$ sfdx mohanc org viz [-u <string>] [--apiversion <string>] [--json] [--loglevel

trace|debug|info|warn|error|fatal|TRACE|DEBUG|INFO|WARN|ERROR|FATAL]

FLAGS

-u, --targetusername=<value> username or alias for the target org; overrides default target org

--apiversion=<value> override the api version used for api requests made by this command

--json format output as json

--loglevel=(trace|debug|info|warn|error|fatal|TRACE|DEBUG|INFO|WARN|ERROR|FATAL) [default: warn] logging level for this command invocation

DESCRIPTION

Visualize the Org

EXAMPLES

Visualize the org

sfdx mohanc:org:viz

Demo of org:viz

sfdx mohanc:org:viz -u test-uzsmfdqkhtk7@example.com

=== Working on getOrgData ===

=== Working on getOrgLimits ===

=== Working on getCount:ApexClass ===

=== Working on getCount:ApexPage ===

=== Working on getCount:ConnectedApplication ===

=== Working on getCount:CustomPermission ===

=== Working on getCount:ExternalDataSource ===

=== Working on getCount:FieldPermissions ===

=== Working on getCount:FieldSecurityClassification ===

=== Working on getCount:Group ===

=== Working on getCount:GroupMember ===

=== Working on getCount:NamedCredential ===

=== Working on getCount:ObjectPermissions ===

=== Working on getCount:PackageLicense ===

=== Working on getCount:PermissionSet ===

=== Working on getCount:PermissionSetAssignment ===

=== Working on getCount:PermissionSetGroup ===

=== Working on getCount:PermissionSetLicense ===

=== Working on getCount:Profile ===

=== Working on getCount:QueueSobject ===

=== Working on getCount:SetupAssistantStep ===

=== Working on getCount:SetupEntityAccess ===

=== Working on getCount:User ===

=== Working on getCount:UserLicense ===

=== Working on getCount:UserPackageLicense ===

=== Working on getCount:UserRole ===

=== Working on getCount:Account ===

=== Working on getCount:AccountContactRole ===

=== Working on getCount:Asset ===

=== Working on getCount:Campaign ===

=== Working on getCount:CampaignMember ===

=== Working on getCount:Case ===

=== Working on getCount:CaseStatus ===

=== Working on getCount:Contact ===

=== Working on getCount:Contract ===

=== Working on getCount:ContractContactRole ===

=== Working on getCount:Lead ===

=== Working on getCount:Opportunity ===

=== Working on getCount:OpportunityCompetitor ===

=== Working on getCount:OpportunityContactRole ===

=== Working on getCount:OpportunityStage ===

=== Working on getCount:Order ===

=== Working on getCount:Partner ===

=== Working on getCount:PartnerRole ===

=== Working on getCount:Account ===

=== Working on getCount:Case ===

=== Working on getCount:CaseComment ===

=== Working on getCount:CaseHistory ===

=== Working on getCount:CaseSolution ===

=== Working on getCount:Contact ===

=== Working on getCount:Solution ===

=== Working on getPackageInfo ===

=== Working on EntityDefinitionSummary:Account ===

=== Working on EntityDefinitionSummary:Contact ===

=== Working on EntityDefinitionSummary:AccountContactRole ===

=== Working on EntityDefinitionSummary:Opportunity ===

=== Working on EntityDefinitionSummary:OpportunityContactRole ===

=== Working on EntityDefinitionSummary:OpportunityCompetitor ===

=== Working on EntityDefinitionSummary:Lead ===

=== Working on EntityDefinitionSummary:Case ===

=== Working on EntityDefinitionSummary:Campaign ===

=== Working on EntityDefinitionSummary:CampaignMember ===

=== Working on EntityDefinitionSummary:Asset ===

=== Working on EntityDefinitionSummary:Contract ===

=== Working on EntityDefinitionSummary:ContractContactRole ===

=== Working on EntityDefinitionSummary:Order ===

=== Working on getApexCodeCoverage ===

=== Working on getBusinessProcess ===

=== Working on getCertificate ===

=== Working on CspTrustedSite ===

=== Working on getOauthToken ===

=== Working on getSetupAuditTrail ===

=== Working on getInactiveUsers ===

=== Working on getUnusedProfiles ===

=== Working on getUsedProfiles ===

=== Working on getUsedPermissionSets ===

=== Working on getUnusedPermissionSets ===

=== Working on getUsedProfiles ===

=== Working on getUsedRoles ===

=== Working on getSysAdminUsers ===

=== Working on getProfileInfo:System Administrator ===

Error: {

"name": "INVALID_FIELD",

"errorCode": "INVALID_FIELD"

}

=== Working on getProfileInfo:Customer Community Login User ===

Error: {

"name": "INVALID_FIELD",

"errorCode": "INVALID_FIELD"

}

=== Working on getPermissionSetInfo:Sales_Ops ===

Error: {

"name": "INVALID_FIELD",

"errorCode": "INVALID_FIELD"

}

=== Working on getProfileForUserLicense:Guest ===

Error: {

"name": "INVALID_FIELD",

"errorCode": "INVALID_FIELD"

}

=== Working on getUserLicenseInfo ===

=== Working on getNetworkMemberInfo ===

Error: {

"name": "INVALID_TYPE",

"errorCode": "INVALID_TYPE"

}

=== Working on getHasEinsteinDataDiscovery ===

=== Working on getHasEDD ===

=== Working on getHasEinsteinDataDiscovery ===

=== Working on getCountTooling:flow ===

=== Working on getFlows ===

=== Working on getFlowMetadata ===

=== Writing Org JSON in file Org.json ...

=== Writing visualization in file Org.svg ...

Visualization done. "open Org.svg" in Chrome Browser to view the Visualization

Org SVG

Comparing query results from 2 orgs

sfdx mohanc:org:compare --help

Compare 2 orgs for the given metadata type query

USAGE

$ sfdx mohanc org compare -o <string> [-m <string>] [-q <string>] [--json] [--loglevel

trace|debug|info|warn|error|fatal|TRACE|DEBUG|INFO|WARN|ERROR|FATAL]

FLAGS

-m, --mtype=<value> metadata type, exmaple: profile

-o, --orgusernames=<value> (required) Comma separated orgUserName, example:

user1@email.com,user2@email.com

-q, --inputfilename=<value> soql file or url for soql

--json format output as json

--loglevel=(trace|debug|info|warn|error|fatal|TRACE|DEBUG|INFO|WARN|ERROR|FATAL) [default: warn] logging level for this command invocation

DESCRIPTION

Compare 2 orgs for the given metadata type query

EXAMPLES

Compare 2 orgs for the given metadata type query

sfdx mohanc:org:compare -o orgUserName1,orgUserName2 -m metadataType

when -m is used, query here: https://github.com/mohan-chinnappan-n/soql will be used

to refer ObjectPermissions

(https://raw.githubusercontent.com/mohan-chinnappan-n/soql/main/ObjectPermissions.soql)

use: sfdx mohanc:org:compare -o orgUserName1,orgUserName2 -m ObjectPermissions

sfdx mohanc:org:compare -o orgUserName1,orgUserName2 -i inputquery.soql

Note: inputquery.soql can be a local file or url

Example

sfdx mohanc:org:compare -o mohan.chinnappan.n.sel@gmail.com,mohan.chinnappan.n.sel2@gmail.com -m profile

sfdx mohanc:org:compare -o mohan.chinnappan.n.sel@gmail.com,mohan.chinnappan.n.sel2@gmail.com -q inputquery.soql

Demo of Org query compare tool

Compare 2 Salesforce Orgs for a metadata type

sfdx mohanc:mdapi:helper:rc2 -m Profile -o mohan.chinnappan.n.sel@gmail.com,mohan.chinnappan.n.sel2@gmail.com -n "Admin" --help

Retrieve Metadata from 2 orgs and compares them

USAGE

$ sfdx mohanc mdapi helper rc2 -o <string> -m <string> -n <string> [-u <string>] [--apiversion <string>] [--json] [--loglevel

trace|debug|info|warn|error|fatal|TRACE|DEBUG|INFO|WARN|ERROR|FATAL]

FLAGS

-m, --mtype=<value> (required) Metadata type, example: Profile

-n, --name=<value> (required) Name of the metadata type

-o, --orgusernames=<value> (required) 2 Org usernames comma separated

-u, --targetusername=<value> username or alias for the target org; overrides default target org

--apiversion=<value> override the api version used for api requests made by this command

--json format output as json

--loglevel=(trace|debug|info|warn|error|fatal|TRACE|DEBUG|INFO|WARN|ERROR|FATAL) [default: warn] logging level for this command invocation

DESCRIPTION

Retrieve Metadata from 2 orgs and compares them

EXAMPLES

retrieve a metadata type from 2 orgs and compare them

sfdx mohanc:mdapi:helper:rc -m <metadata type> -o orgUserName1,orgUserName2 -n <name>

Example:

sfdx mohanc:mdapi:helper:rc2 -m PermissionSet -o orgUserName1,orgUserName2 -n PermissionSetName

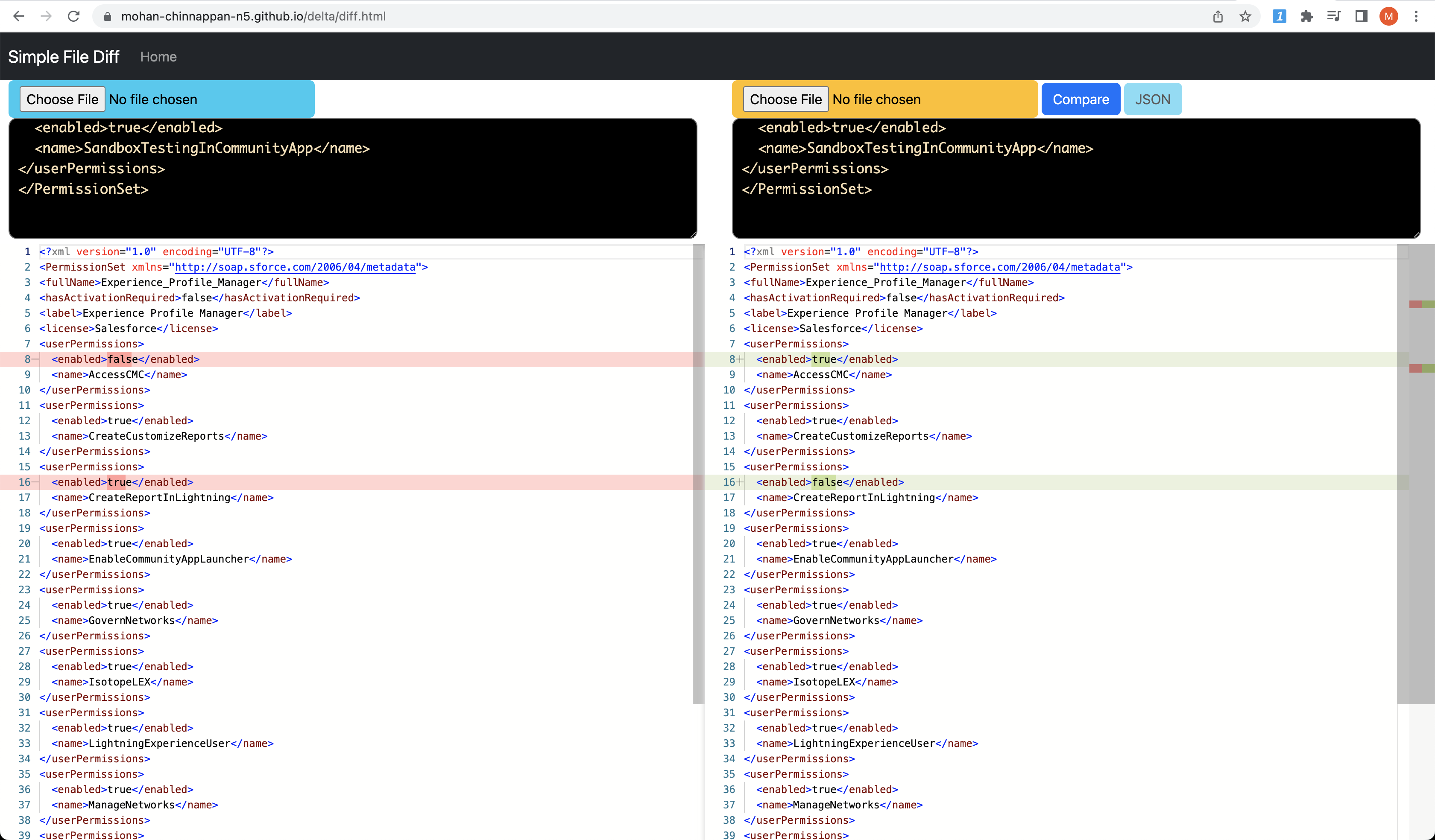

Demo of Compare 2 Salesforce Orgs for a metadata type

List Metadata item of the given Metadata type and compare it with local

sfdx mohanc:mdapi:helper:list -m Profile -u mohan.chinnappan.n.sel@gmail.com -p force-app/main/default/ --help

List Metadata item of the given Metadata type and compare it with local

USAGE

$ sfdx mohanc mdapi helper list -m <string> -p <string> [-u <string>] [--apiversion <string>] [--json] [--loglevel

trace|debug|info|warn|error|fatal|TRACE|DEBUG|INFO|WARN|ERROR|FATAL]

FLAGS

-m, --mtype=<value> (required) Metadata type, example: Profile

-p, --prjfolder=<value> (required) DX Project folder path (example:

~/treeprj/force-app/main/default/

-u, --targetusername=<value> username or alias for the target org; overrides default target org

--apiversion=<value> override the api version used for api requests made by this command

--json format output as json

--loglevel=(trace|debug|info|warn|error|fatal|TRACE|DEBUG|INFO|WARN|ERROR|FATAL) [default: warn] logging level for this command invocation

DESCRIPTION

List Metadata item of the given Metadata type and compare it with local

EXAMPLES

List Metadata item of the given Metadata type and compare it with local

sfdx mohanc:mdapi:helper:list -m <metadata type> -p <dxProjectFolder>

Example:

sfdx mohanc:mdapi:helper:list -m Profile -p ~/treeprj/force-app/main/default/

sfdx mohanc:mdapi:helper:list -m Profile -u mohan.chinnappan.n.sel@gmail.com -p force-app/main/default/

force-app/main/default/profiles/SolutionManager.profile-meta.xml true

force-app/main/default/profiles/Analytics Cloud Security User.profile-meta.xml true

force-app/main/default/profiles/Partner App Subscription User.profile-meta.xml true

force-app/main/default/profiles/Guest License User.profile-meta.xml true

force-app/main/default/profiles/HighVolumePortal.profile-meta.xml true

force-app/main/default/profiles/Analytics Cloud Integration User.profile-meta.xml true

force-app/main/default/profiles/Identity User.profile-meta.xml true

force-app/main/default/profiles/Customer Community User.profile-meta.xml true

force-app/main/default/profiles/Authenticated Website.profile-meta.xml true

force-app/main/default/profiles/Customer Portal Manager Standard.profile-meta.xml true

force-app/main/default/profiles/External Identity User.profile-meta.xml true

force-app/main/default/profiles/Customer Community Plus Login User.profile-meta.xml true

force-app/main/default/profiles/Chatter External User.profile-meta.xml true

force-app/main/default/profiles/Customer Portal Manager Custom.profile-meta.xml true

force-app/main/default/profiles/Chatter Moderator User.profile-meta.xml true

force-app/main/default/profiles/Cross Org Data Proxy User.profile-meta.xml true

force-app/main/default/profiles/Custom%3A Marketing Profile.profile-meta.xml false

force-app/main/default/profiles/StandardAul.profile-meta.xml true

force-app/main/default/profiles/Custom%3A Support Profile.profile-meta.xml false

force-app/main/default/profiles/Force%2Ecom - App Subscription User.profile-meta.xml false

force-app/main/default/profiles/mohanc Profile.profile-meta.xml true

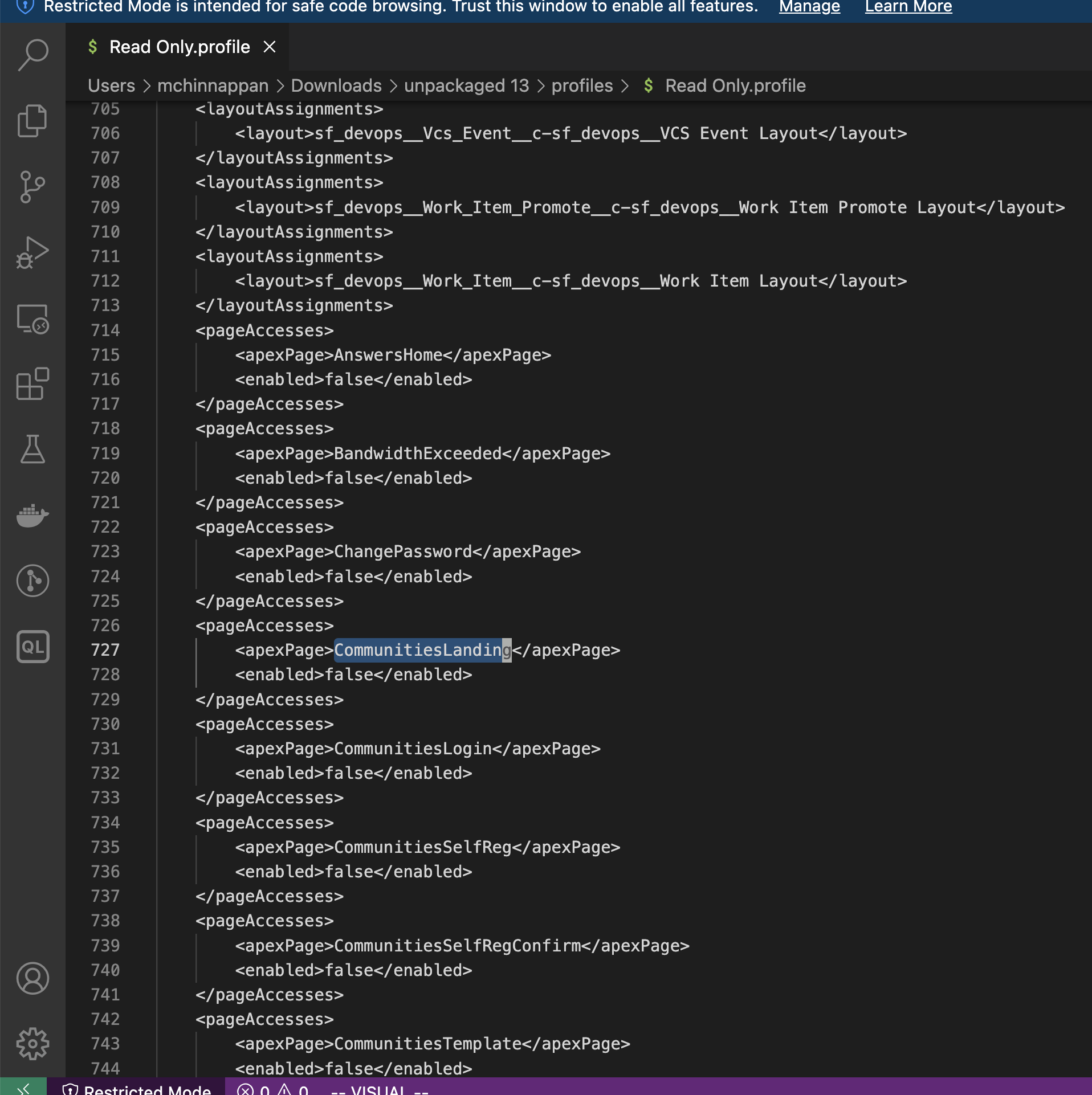

force-app/main/default/profiles/Read Only.profile-meta.xml true

force-app/main/default/profiles/Force%2Ecom - Free User.profile-meta.xml false

force-app/main/default/profiles/PlatformPortal.profile-meta.xml true

force-app/main/default/profiles/Chatter Free User.profile-meta.xml true

force-app/main/default/profiles/Gold Partner User.profile-meta.xml true

force-app/main/default/profiles/Partner Community User.profile-meta.xml true

force-app/main/default/profiles/Customer Community Login User.profile-meta.xml true

force-app/main/default/profiles/Minimum Access - Salesforce.profile-meta.xml true

force-app/main/default/profiles/Work%2Ecom Only User.profile-meta.xml false

force-app/main/default/profiles/Customer Community Plus User.profile-meta.xml true

force-app/main/default/profiles/Custom%3A Sales Profile.profile-meta.xml false

force-app/main/default/profiles/Admin.profile-meta.xml true

force-app/main/default/profiles/ContractManager.profile-meta.xml true

force-app/main/default/profiles/Silver Partner User.profile-meta.xml true

force-app/main/default/profiles/External Apps Login User.profile-meta.xml true

force-app/main/default/profiles/Partner Community Login User.profile-meta.xml true

force-app/main/default/profiles/Standard.profile-meta.xml true

force-app/main/default/profiles/MarketingProfile.profile-meta.xml true

force-app/main/default/profiles/High Volume Customer Portal User.profile-meta.xml true

Demo of List Metadata item of the given Metadata type and compare it with local

Export SObject Metadata from an Org

sfdx mohanc:md:describeGlobal -u mohan.chinnappan.n.sel2@gmail.com > objects.txt

sfdx mohanc:md:describe -u mohan.chinnappan.n.sel2@gmail.com -i objects.txt > org-md.csv

Making package.xml for all the objects in the org

# make_package.xml.sh

# reads stdin of lines to create package.xml

# mchinnappan

API_VERSION=56.0

prefix=$(cat <<EOF

<?xml version="1.0" encoding="UTF-8"?>

<Package xmlns="http://soap.sforce.com/2006/04/metadata">

<version>${API_VERSION}</version>

<types>

<name>CustomObject</name>

EOF

)

echo ${prefix}

while read line; do

echo " <members>${line}</members>"

done

suffix=$(cat << EOF

</types>

</Package>

EOF

)

echo ${suffix}

# if you do not have bat in *nix box, use cat

# get all custom objects

sfdx mohanc:md:describeGlobal -u mohan.chinnappan.n_ea2@gmail.com | tr ',' '\n' | grep -i "__c$" | bash ~/shell-scripts/bash/make_package.xml.sh > _package.xml; xmllint --format _package.xml > package.xml; bat package.xml

───────┬──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

│ File: package.xml

───────┼──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

1 │ <?xml version="1.0" encoding="UTF-8"?>

2 │ <Package xmlns="http://soap.sforce.com/2006/04/metadata">

3 │ <version>56.0</version>

4 │ <types>

5 │ <name>CustomObject</name>

6 │ <members>AcquiredAccount__c</members>

7 │ <members>Broker__c</members>

8 │ <members>GanttLink__c</members>

9 │ <members>GanttTask__c</members>

10 │ <members>MyFilter__c</members>

11 │ <members>OpportunityHistory__c</members>

12 │ <members>Property__c</members>

13 │ <members>Stock_Position__c</members>

14 │ <members>Teacher__c</members>

15 │ <members>TruncateTest__c</members>

...

Get the metadata for these assets in the package.xml

sfdx force:mdapi:retrieve -k ./package.xml -u mohan.chinnappan.n_ea2@gmail.com -r .

Retrieving v56.0 metadata from mohan.chinnappan.n_ea2@gmail.com using the v57.0 SOAP API

Retrieve ID: 09S3h000007GKGdEAO

Retrieving metadata from mohan.chinnappan.n_ea2@gmail.com... done

Wrote retrieve zip to /private/tmp/meta-export/mdexport/unpackaged.zip

jar tvf unpackaged.zip

16429 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Object_Activity__c.object

16058 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Pipeline_Stage__c.object

7087 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Pipeline__c.object

5616 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/MyFilter__c.object

8383 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Project__c.object

11274 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Remote_Change__c.object

11079 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Repository__c.object

7874 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Source_Member_Reference__c.object

11355 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Vcs_Event__c.object

5159 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/Teacher__c.object

5888 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/Stock_Position__c.object

7700 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/Broker__c.object

17198 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/Property__c.object

9307 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Async_Operation_Result__c.object

8572 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Back_Sync__c.object

10427 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Change_Bundle_Install__c.object

7122 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Change_Bundle__c.object

13690 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Change_Submission__c.object

13408 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Environment__c.object

7164 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Hidden_Remote_Change__c.object

12752 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Work_Item_Promote__c.object

7874 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/AcquiredAccount__c.object

26381 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Work_Item__c.object

10679 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/OpportunityHistory__c.object

6115 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/GanttLink__c.object

6390 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/GanttTask__c.object

7916 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Deploy_Component__c.object

7924 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Branch__c.object

2576 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/WrikeAccountSettings__c.object

1487 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/Wrike_API__c.object

1488 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/Wrike_Bindings__c.object

9129 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Submit_Component__c.object

7598 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Merge_Result__c.object

10249 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/sf_devops__Deployment_Result__c.object

5484 Tue Apr 04 17:52:02 EDT 2023 unpackaged/objects/TruncateTest__c.object

1976 Tue Apr 04 17:52:02 EDT 2023 unpackaged/package.xml

ERD for the given list of Salesforce Objects using CLI

sfdx mohanc:md:describe -u mohan.chinnappan.n.sel2@gmail.com -s Contact,Account -e contact-account.dot > contact-account.csv

cat contact-account.dot | pbcopy

open "https://mohan-chinnappan-n.github.io/viz/viz.html?c=1"

Compare SObjects in 2 orgs

View the SObjects in the org

sfdx mohanc:md:describeGlobal -u mohan.chinnappan.n.sel@gmail.com | tr ',' '\n' | pbcopy; open "https://mohan-chinnappan-n5.github.io/viz/datatable/dt.html?c=csv"

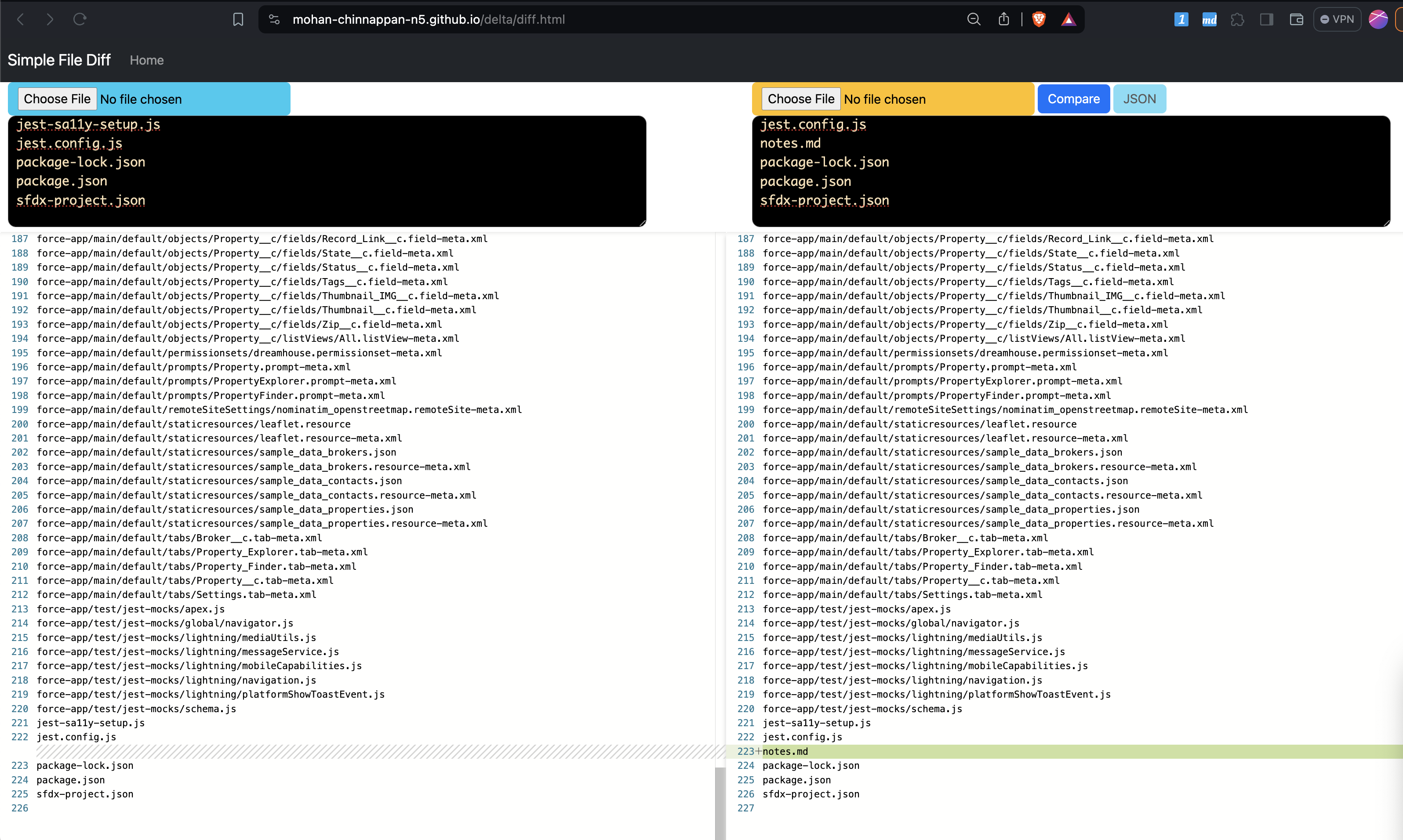

Using Diff app

-

Run the org-sobjects-compare

-

Usage

./org-sobjects-compare.sh

Org SObjects Compare tool - compares SObjects in the given two orgs

Usage: org-sobjects-compare.sh <username1> <username2>

- Run

org-sobjects-compare.sh mohan.chinnappan.n.sel@gmail.com mohan.chinnappan.n.sel2@gmail.com

Org SObjects Compare tool - compares SObjects in the given two orgs

=== Getting sobjects for the org with username: mohan.chinnappan.n.sel@gmail.com... ===

Paste the clipboard content into the left side of the diff app. Then press enter to continue to the next org...

=== Getting sobjects for the org with username: mohan.chinnappan.n.sel2@gmail.com... ===

=== Now you can paste the content in the clipboard into the right side of the diff app and press Compare button... ===

- Demo

Data Loading BulkAPI2

How use the DX plugin for BulkAPI 2 data load ?

Topics

How use mohanc:data:bulkapi:load?

$ sfdx mohanc:data:bulkapi:load -h

Data Load using BulkAPI 2

USAGE

$ sfdx mohanc:data:bulkapi:load

OPTIONS

-e, --lineending=lineending Line Ending (LF or CRLF), default: LF

-f, --inputfile=inputfile CSV file to load, default: input.csv

-o, --sobject=sobject sObject to load into, default: Case

-u, --targetusername=targetusername username or alias for the target org; overrides default target org

--apiversion=apiversion override the api version used for api requests made by this command

--json format output as json

--loglevel=(trace|debug|info|warn|error|fatal) logging level for this command invocation

EXAMPLE

sfdx mohanc:bulkapi:load -u <username> -f input.csv -e LF -o Case

Example

$ sfdx mohanc:data:bulkapi:load -u mohan.chinnappan.n_ea2@gmail.com -f /tmp/input.csv -e LF -o Case

Input file

$ cat /tmp/input.csv

Subject,Priority

Engine cylinder has knocking,High

Wiper Blade needs replacement,Low

Output

=== CREATE JOB ===

{

id: '7503h000003pgNYAAY',

operation: 'insert',

object: 'Case',

createdById: '0053h000002xQ5sAAE',

createdDate: '2020-08-04T04:32:41.000+0000',

systemModstamp: '2020-08-04T04:32:41.000+0000',

state: 'Open',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 49,

contentUrl: 'services/data/v49.0/jobs/ingest/7503h000003pgNYAAY/batches',

lineEnding: 'LF',

columnDelimiter: 'COMMA'

}

jobId: 7503h000003pgNYAAY

=== JOB STATUS ===

=== JOB STATUS for job: 7503h000003pgNYAAY ===

{

id: '7503h000003pgNYAAY',

operation: 'insert',

object: 'Case',

createdById: '0053h000002xQ5sAAE',

createdDate: '2020-08-04T04:32:41.000+0000',

systemModstamp: '2020-08-04T04:32:41.000+0000',

state: 'Open',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 49,

jobType: 'V2Ingest',

contentUrl: 'services/data/v49.0/jobs/ingest/7503h000003pgNYAAY/batches',

lineEnding: 'LF',

columnDelimiter: 'COMMA',

retries: 0,

totalProcessingTime: 0,

apiActiveProcessingTime: 0,

apexProcessingTime: 0

}

=== PUT DATA ===

result: status: 201, statusText: Created

=== JOB STATUS ===

=== JOB STATUS for job: 7503h000003pgNYAAY ===

{

id: '7503h000003pgNYAAY',

operation: 'insert',

object: 'Case',

createdById: '0053h000002xQ5sAAE',

createdDate: '2020-08-04T04:32:41.000+0000',

systemModstamp: '2020-08-04T04:32:41.000+0000',

state: 'Open',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 49,

jobType: 'V2Ingest',

contentUrl: 'services/data/v49.0/jobs/ingest/7503h000003pgNYAAY/batches',

lineEnding: 'LF',

columnDelimiter: 'COMMA',

numberRecordsProcessed: 0,

numberRecordsFailed: 0,

retries: 0,

totalProcessingTime: 0,

apiActiveProcessingTime: 0,

apexProcessingTime: 0

}

=== PATCH STATE ===

{

id: '7503h000003pgNYAAY',

operation: 'insert',

object: 'Case',

createdById: '0053h000002xQ5sAAE',

createdDate: '2020-08-04T04:32:41.000+0000',

systemModstamp: '2020-08-04T04:32:41.000+0000',

state: 'UploadComplete',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 49

}

=== JOB STATUS ===

=== JOB STATUS for job: 7503h000003pgNYAAY ===

jobStatus {

id: '7503h000003pgNYAAY',

operation: 'insert',

object: 'Case',

createdById: '0053h000002xQ5sAAE',

createdDate: '2020-08-04T04:32:41.000+0000',

systemModstamp: '2020-08-04T04:32:43.000+0000',

state: 'InProgress',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 49,

jobType: 'V2Ingest',

lineEnding: 'LF',

columnDelimiter: 'COMMA',

numberRecordsProcessed: 0,

numberRecordsFailed: 0,

retries: 0,

totalProcessingTime: 0,

apiActiveProcessingTime: 0,

apexProcessingTime: 0

}

WAITING...

{

id: '7503h000003pgNYAAY',

operation: 'insert',

object: 'Case',

createdById: '0053h000002xQ5sAAE',

createdDate: '2020-08-04T04:32:41.000+0000',

systemModstamp: '2020-08-04T04:32:43.000+0000',

state: 'JobComplete',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 49,

jobType: 'V2Ingest',

lineEnding: 'LF',

columnDelimiter: 'COMMA',

numberRecordsProcessed: 2,

numberRecordsFailed: 0,

retries: 0,

totalProcessingTime: 152,

apiActiveProcessingTime: 57,

apexProcessingTime: 0

}

=== JOB Failure STATUS ===

=== JOB Failure STATUS for job: 7503h000003pgNYAAY ===

"sf__Id","sf__Error",Priority,Subject

=== JOB getUnprocessedRecords STATUS ===

=== JOB getUnprocessedRecords STATUS for job: 7503h000003pgNYAAY ===

Subject,Priority

Loading Platform Events via Bulk API 2.0

Resources

$ cat ~/tmp/pe_msg.csv

message__c

Power Off

$ sfdx mohanc:data:bulkapi:load -u mohan.chinnappan.n_ea2@gmail.com -f ~/tmp/pe_msg.csv -e LF -o Notification__e

=== CREATE JOB ===

{

id: '7503h000009XNPkAAO',

operation: 'insert',

object: 'Notification__e',

createdById: '0053h000002xQ5sAAE',

createdDate: '2021-04-05T22:40:39.000+0000',

systemModstamp: '2021-04-05T22:40:39.000+0000',

state: 'Open',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 51,

contentUrl: 'services/data/v51.0/jobs/ingest/7503h000009XNPkAAO/batches',

lineEnding: 'LF',

columnDelimiter: 'COMMA'

}

jobId: 7503h000009XNPkAAO

=== JOB STATUS ===

=== JOB STATUS for job: 7503h000009XNPkAAO ===

{

id: '7503h000009XNPkAAO',

operation: 'insert',

object: 'Notification__e',

createdById: '0053h000002xQ5sAAE',

createdDate: '2021-04-05T22:40:39.000+0000',

systemModstamp: '2021-04-05T22:40:39.000+0000',

state: 'Open',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 51,

jobType: 'V2Ingest',

contentUrl: 'services/data/v51.0/jobs/ingest/7503h000009XNPkAAO/batches',

lineEnding: 'LF',

columnDelimiter: 'COMMA',

retries: 0,

totalProcessingTime: 0,

apiActiveProcessingTime: 0,

apexProcessingTime: 0

}

=== PUT DATA ===

result: status: 201, statusText: Created

=== JOB STATUS ===

=== JOB STATUS for job: 7503h000009XNPkAAO ===

{

id: '7503h000009XNPkAAO',

operation: 'insert',

object: 'Notification__e',

createdById: '0053h000002xQ5sAAE',

createdDate: '2021-04-05T22:40:39.000+0000',

systemModstamp: '2021-04-05T22:40:39.000+0000',

state: 'Open',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 51,

jobType: 'V2Ingest',

contentUrl: 'services/data/v51.0/jobs/ingest/7503h000009XNPkAAO/batches',

lineEnding: 'LF',

columnDelimiter: 'COMMA',

numberRecordsProcessed: 0,

numberRecordsFailed: 0,

retries: 0,

totalProcessingTime: 0,

apiActiveProcessingTime: 0,

apexProcessingTime: 0

}

=== PATCH STATE ===

{

id: '7503h000009XNPkAAO',

operation: 'insert',

object: 'Notification__e',

createdById: '0053h000002xQ5sAAE',

createdDate: '2021-04-05T22:40:39.000+0000',

systemModstamp: '2021-04-05T22:40:39.000+0000',

state: 'UploadComplete',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 51

}

=== JOB STATUS ===

=== JOB STATUS for job: 7503h000009XNPkAAO ===

jobStatus {

id: '7503h000009XNPkAAO',

operation: 'insert',

object: 'Notification__e',

createdById: '0053h000002xQ5sAAE',

createdDate: '2021-04-05T22:40:39.000+0000',

systemModstamp: '2021-04-05T22:40:41.000+0000',

state: 'InProgress',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 51,

jobType: 'V2Ingest',

lineEnding: 'LF',

columnDelimiter: 'COMMA',

numberRecordsProcessed: 1,

numberRecordsFailed: 0,

retries: 0,

totalProcessingTime: 61,

apiActiveProcessingTime: 12,

apexProcessingTime: 0

}

WAITING...

{

id: '7503h000009XNPkAAO',

operation: 'insert',

object: 'Notification__e',

createdById: '0053h000002xQ5sAAE',

createdDate: '2021-04-05T22:40:39.000+0000',

systemModstamp: '2021-04-05T22:40:42.000+0000',

state: 'JobComplete',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 51,

jobType: 'V2Ingest',

lineEnding: 'LF',

columnDelimiter: 'COMMA',

numberRecordsProcessed: 1,

numberRecordsFailed: 0,

retries: 0,

totalProcessingTime: 61,

apiActiveProcessingTime: 12,

apexProcessingTime: 0

}

=== JOB Failure STATUS ===

=== JOB Failure STATUS for job: 7503h000009XNPkAAO ===

"sf__Id","sf__Error",message__c

=== JOB getUnprocessedRecords STATUS ===

=== JOB getUnprocessedRecords STATUS for job: 7503h000009XNPkAAO ===

message__c

Results

User Loading

Table of contents

Using CLI for BulkAPI 2.0

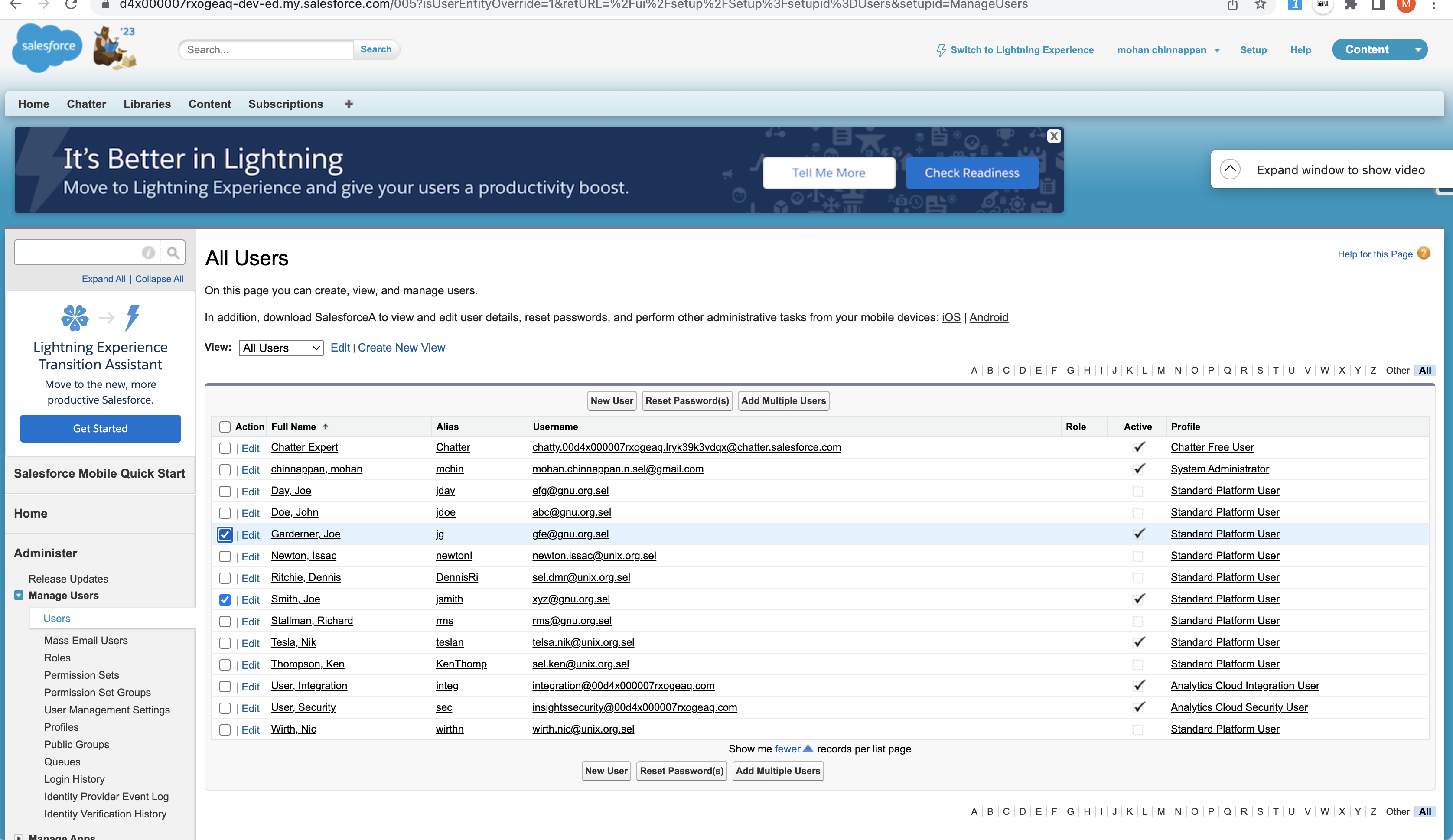

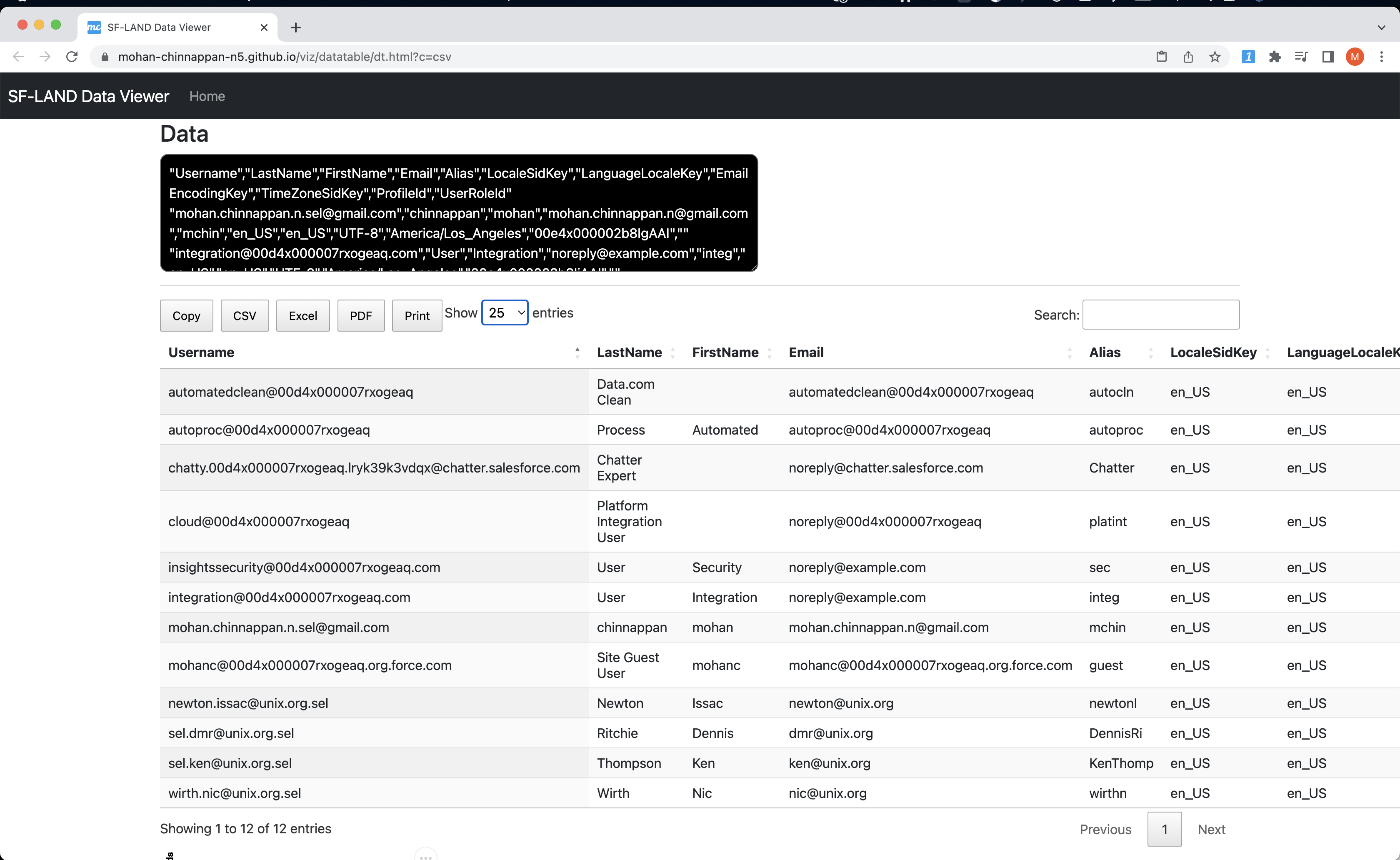

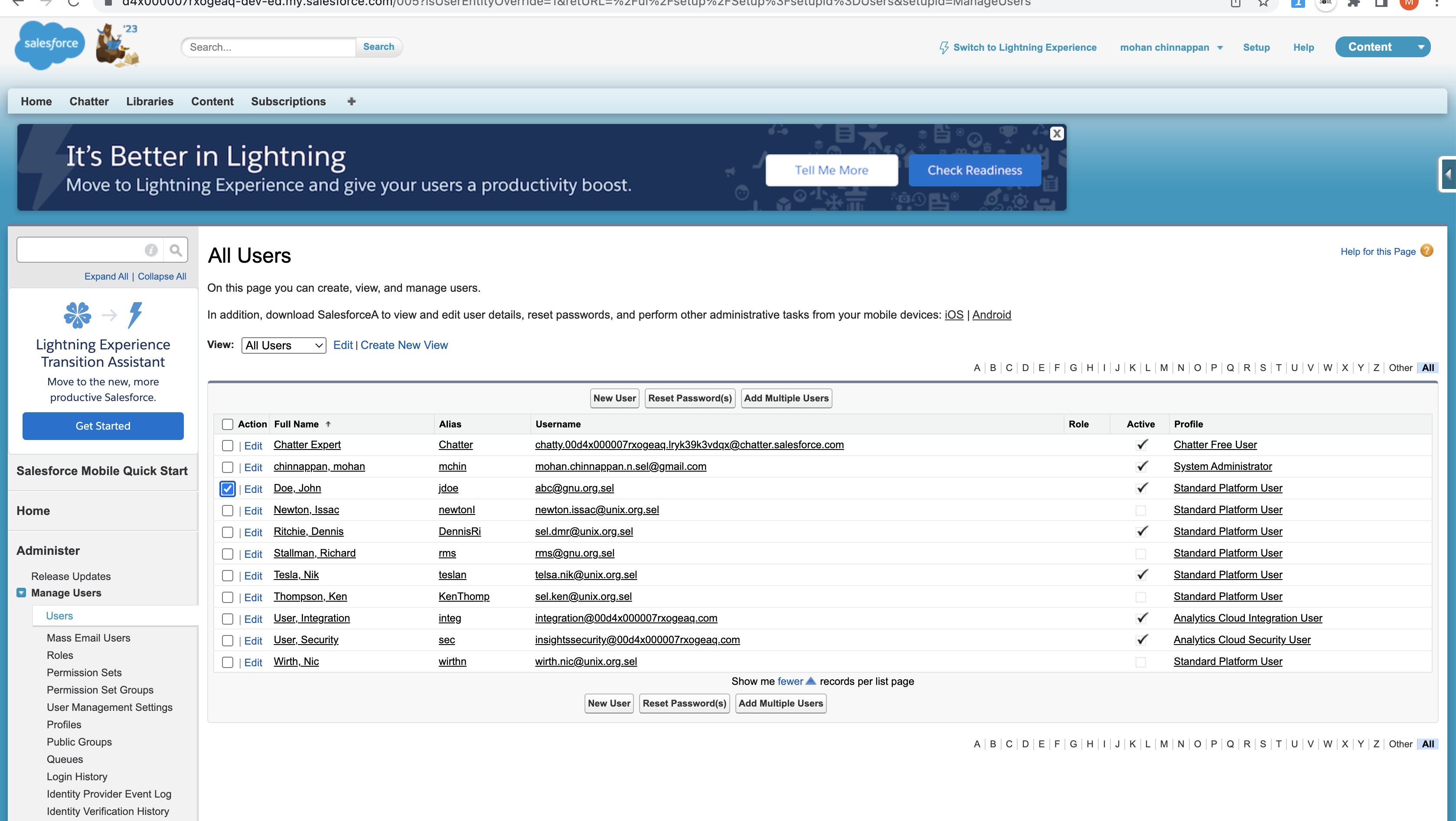

1. Query the current users in the org

sfdx mohanc:data:bulkapi:query -u mohan.chinnappan.n.sel@gmail.com -q ~/.soql/users.soql; pbcopy < ~/.soql/users.soql.csv ; open "https://mohan-chinnappan-n5.github.io/viz/datatable/dt.html?c=csv"

-- query used

SELECT Username, LastName, FirstName, Email, Alias,

LOCALESIDKEY,LANGUAGELOCALEKEY,EMAILENCODINGKEY,TIMEZONESIDKEY,PROFILEID,USERROLEID

FROM User

https://d4x000007rxogeaq-dev-ed.my.salesforce.com/services/data/v57.0/jobs/query

{

id: '7504x00000XkfyMAAR',

operation: 'query',

object: 'User',

createdById: '0054x000006Riv4AAC',

createdDate: '2023-03-21T10:43:31.000+0000',

systemModstamp: '2023-03-21T10:43:31.000+0000',

state: 'UploadComplete',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 57,

lineEnding: 'LF',

columnDelimiter: 'COMMA'

}

=== JOB STATUS ===

=== JOB STATUS for job: 7504x00000XkfyMAAR ===

{

id: '7504x00000XkfyMAAR',

operation: 'query',

object: 'User',

createdById: '0054x000006Riv4AAC',

createdDate: '2023-03-21T10:43:31.000+0000',

systemModstamp: '2023-03-21T10:43:31.000+0000',

state: 'InProgress',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 57,

jobType: 'V2Query',

lineEnding: 'LF',

columnDelimiter: 'COMMA',

numberRecordsProcessed: 0,

retries: 0,

totalProcessingTime: 0

}

WAITING...

{

id: '7504x00000XkfyMAAR',

operation: 'query',

object: 'User',

createdById: '0054x000006Riv4AAC',

createdDate: '2023-03-21T10:43:31.000+0000',

systemModstamp: '2023-03-21T10:43:32.000+0000',

state: 'JobComplete',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 57,

jobType: 'V2Query',

lineEnding: 'LF',

columnDelimiter: 'COMMA',

numberRecordsProcessed: 12,

retries: 0,

totalProcessingTime: 157

}

==== Job State: JobComplete ====

=== Total time taken to process the job : 157 milliseconds ===

=== Total records processed : 12 ===

https://d4x000007rxogeaq-dev-ed.my.salesforce.com/services/data/v57.0/jobs/query/7504x00000XkfyMAAR/results

==== Output CSV file written into : /Users/mchinnappan/.soql/users.soql.csv ===

==== View the output file : /Users/mchinnappan/.soql/users.soql.csv using:

cat /Users/mchinnappan/.soql/users.soql.csv ===

=== JOB Failure STATUS ===

=== JOB Failure STATUS for job: 7504x00000XkfyMAAR === "sf__Id","sf__Error","Username","LastName","FirstName","Email","Alias","LocaleSidKey","LanguageLocaleKey","EmailEncodingKey","TimeZoneSidKey","ProfileId","UserRoleId"

===

"sf__Id","sf__Error","Username","LastName","FirstName","Email","Alias","LocaleSidKey","LanguageLocaleKey","EmailEncodingKey","TimeZoneSidKey","ProfileId","UserRoleId"

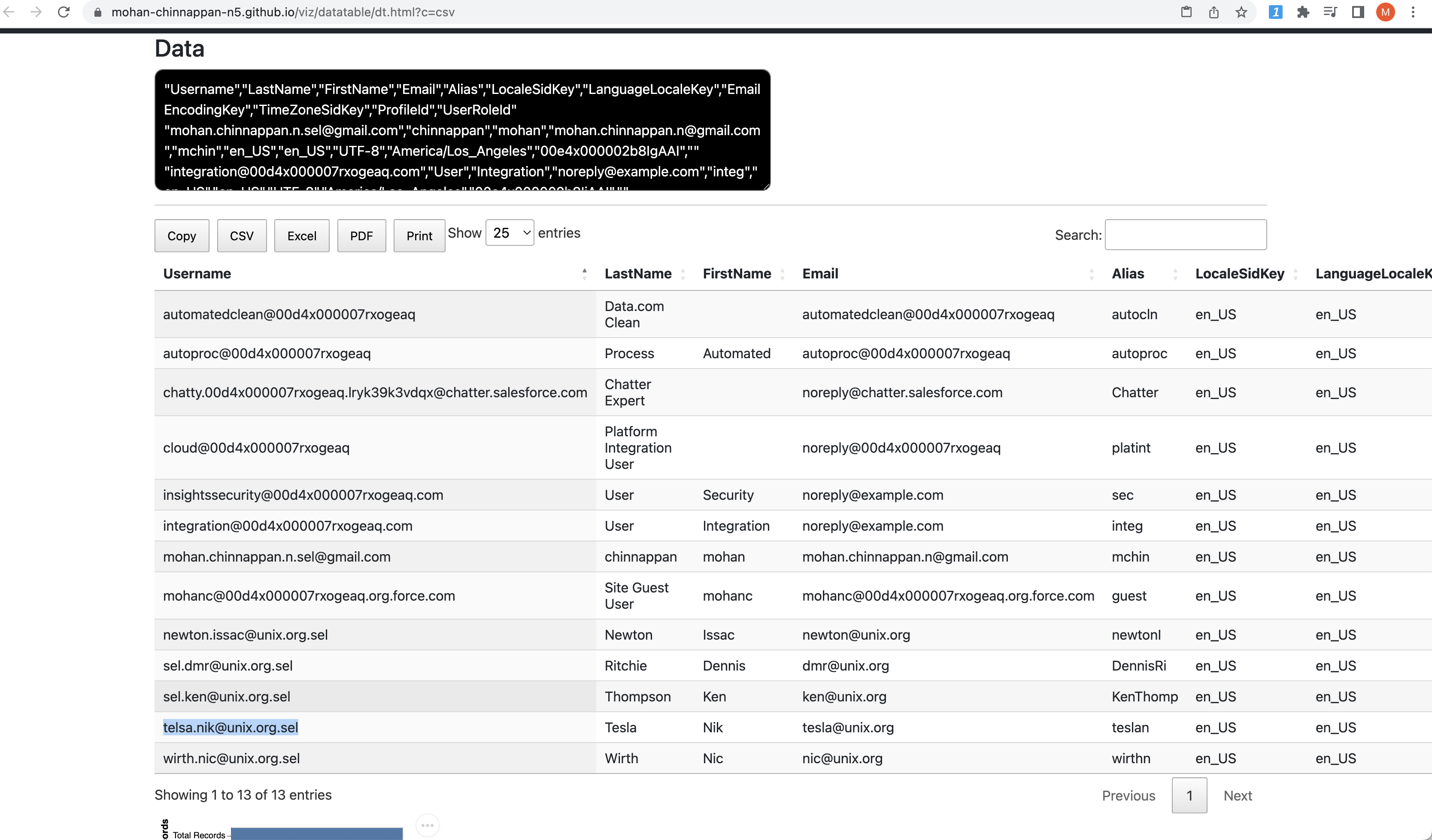

2. View the user using this app which is automatically opened by the above command #1

3. Edit the query results in your favorite app (I am using vim to do this, you can use apps like Excel) to add your user(s) to load

- I have my data input file looks like:

"Username","LastName","FirstName","Email","Alias","LocaleSidKey","LanguageLocaleKey","EmailEncodingKey","TimeZoneSidKey","ProfileId","UserRoleId"

"telsa.nik@unix.org.sel","Tesla","Nik","tesla@unix.org","teslan","en_US","en_US","UTF-8","America/Los_Angeles","00e4x000002b8InAAI",""

4. Let us load the data for the new user(s)

sfdx mohanc:data:bulkapi:load -u mohan.chinnappan.n.sel@gmail.com -f /Users/mchinnappan/.soql/users.soql.csv -e LF -o User

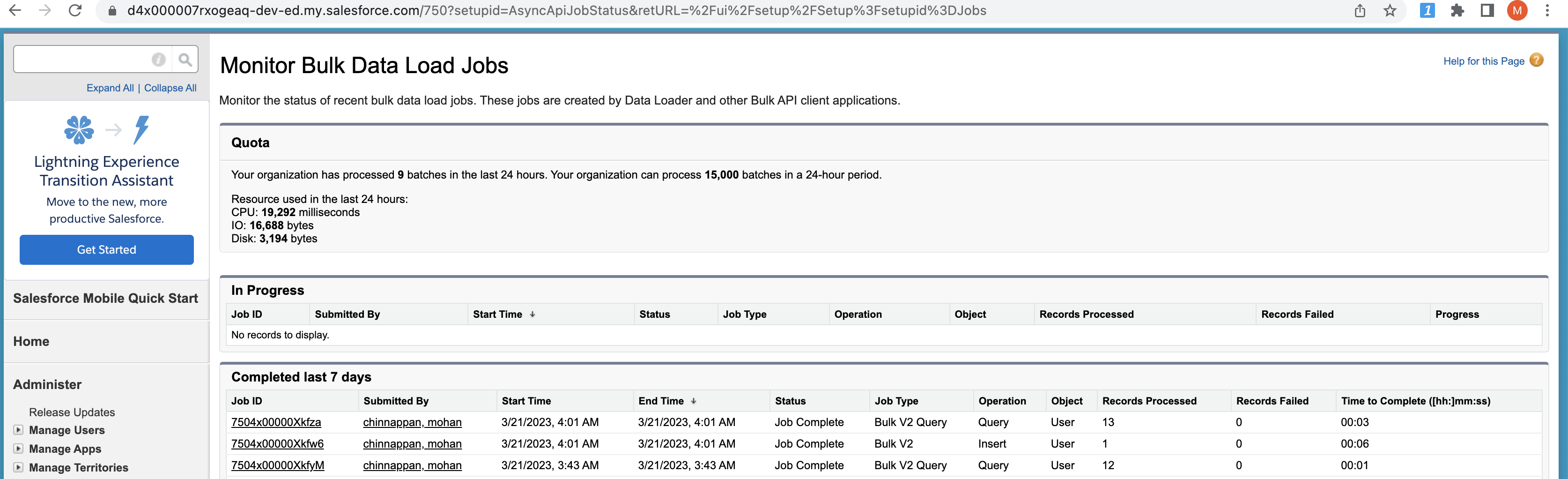

5. Query the user Object to check our loading

- Check the bulk data load jobs status for these jobs we created

Apex Way

List<User> users = new List<User>();

users.add( new User (

Username = 'abc@gnu.org.sel',

Email = 'abc@gnu.org.invalid',

FirstName = 'John',

LastName = 'Doe',

Alias = 'jdoe',

TimeZoneSidKey = 'America/New_York',

LocaleSidKey = 'en_US',

EmailEncodingKey = 'ISO-8859-1',

ProfileId = [SELECT Id FROM Profile WHERE Name ='Standard Platform User' LIMIT 1].Id,

LanguageLocaleKey = 'en_US'

));

// users.add( new User(...)

Insert users;

- running it

sfdx mohanc:tooling:execute -u mohan.chinnappan.n.sel@gmail.com -a ~/.apex/addUsers.cls

apexCode: //String profile = 'Standard Platform User';

//Id profileId = [SELECT Id FROM Profile WHERE Name =: profile LIMIT 1].Id;

List<User> users = new List<User>();

users.add( new User (

Username = 'abc@gnu.org.sel',

Email = 'abc@gnu.org.invalid',

FirstName = 'John',

LastName = 'Doe',

Alias = 'jdoe',

TimeZoneSidKey = 'America/New_York',

LocaleSidKey = 'en_US',

EmailEncodingKey = 'ISO-8859-1',

ProfileId = [SELECT Id FROM Profile WHERE Name ='Standard Platform User' LIMIT 1].Id,

LanguageLocaleKey = 'en_US'

));

// users.add( new User(...)

Insert users;

compiled?: true

executed?: true

{

line: -1,

column: -1,

compiled: true,

success: true,

compileProblem: null,

exceptionStackTrace: null,

exceptionMessage: null

}

Is there a script which can create this apex code ?

- Yes!

cat users.csv

"Username","LastName","FirstName","Email","Alias","LocaleSidKey","LanguageLocaleKey","EmailEncodingKey","TimeZoneSidKey","ProfileName"

"gfe@gnu.org.sel","Garderner","Joe","jg@gnu.org.invalid","jg","en_US","en_US","ISO-8859-1","America/New_York","Standard Platform User"

"xyz@gnu.org.sel","Smith","Joe","xyz@gnu.org.invalid","jsmith","en_US","en_US","ISO-8859-1","America/New_York","Standard Platform User"

-

Download the script userApexgen.py

-

Run the script

python3 userApexgen.py users.csv > useradd.cls

cat useradd.cls

List<User> users = new List<User>();

users.add( new User (

Username = 'gfe@gnu.org.sel',

Email = 'jg@gnu.org.invalid',

FirstName = 'Joe',

LastName = 'Garderner',

Alias = 'jg',

TimeZoneSidKey = 'America/New_York',

LocaleSidKey = 'en_US',

EmailEncodingKey = 'ISO-8859-1',

ProfileId = [SELECT Id FROM Profile WHERE Name ='Standard Platform User' LIMIT 1].Id,

LanguageLocaleKey = 'en_US'

));

users.add( new User (

Username = 'xyz@gnu.org.sel',

Email = 'xyz@gnu.org.invalid',

FirstName = 'Joe',

LastName = 'Smith',

Alias = 'jsmith',

TimeZoneSidKey = 'America/New_York',

LocaleSidKey = 'en_US',

EmailEncodingKey = 'ISO-8859-1',

ProfileId = [SELECT Id FROM Profile WHERE Name ='Standard Platform User' LIMIT 1].Id,

LanguageLocaleKey = 'en_US'

));

Insert users;

- Run the apex code to add the users

sfdx mohanc:tooling:execute -u mohan.chinnappan.n.sel@gmail.com -a useradd.cls

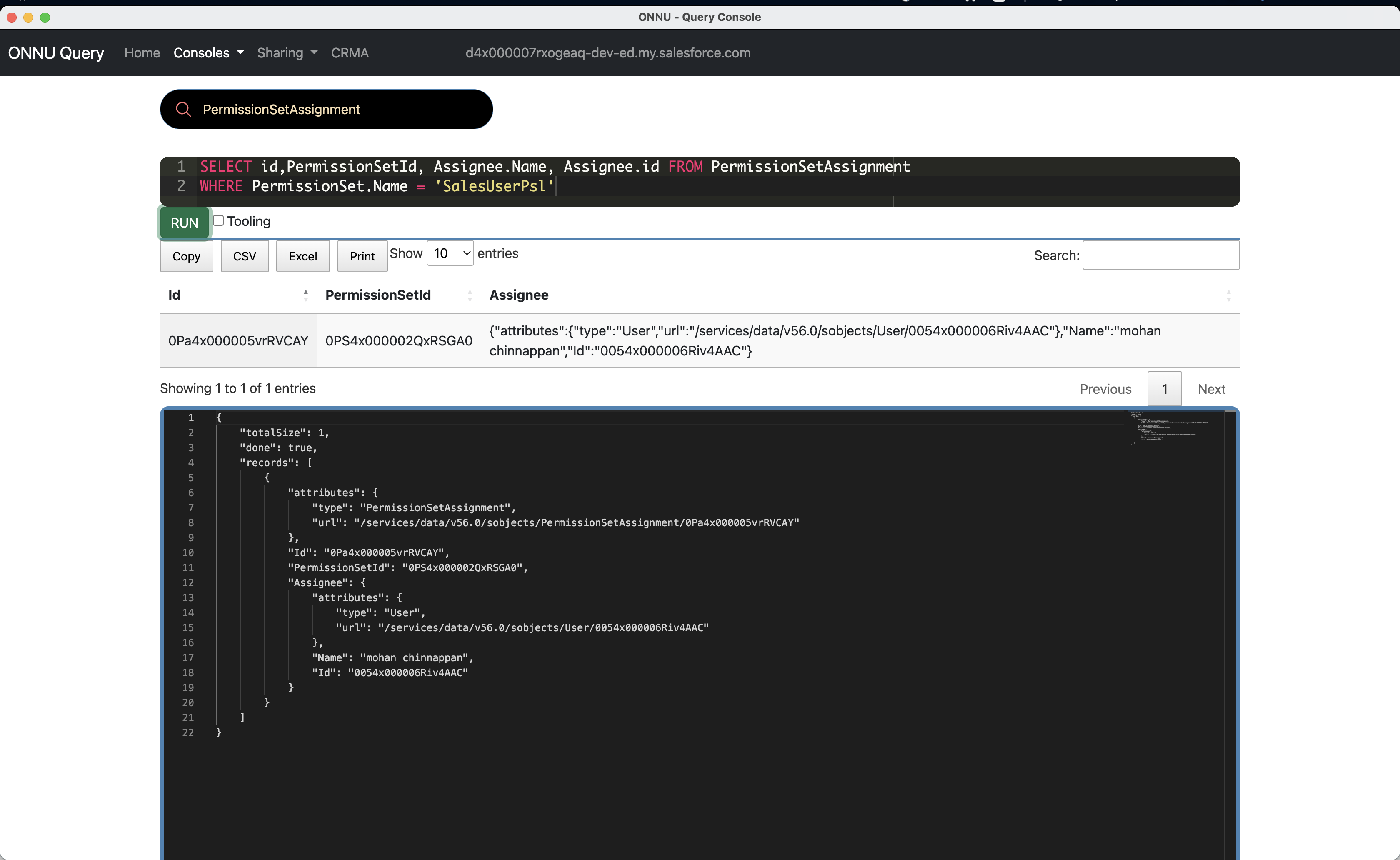

Mass assign permission sets to users

- Querying User, PermissionSet and PermissionSetAssignment

SELECT Id

,Username

FROM User

SELECT

Id

,Name

,NamespacePrefix

,Description

,HasActivationRequired

,IsCustom

,IsOwnedByProfile

,Label

,LicenseId

,PermissionSetGroupId

,ProfileId

,Type

FROM PermissionSet

SELECT

Id

,AssigneeId

,IsActive

,ExpirationDate

,PermissionSetId

,PermissionSetGroupId

FROM PermissionSetAssignment

- Users

Id,Username

0054x000007avznAAA,sel.dmr@unix.org.sel

0054x000007avdcAAA,sel.ken@unix.org.sel

- Permissionsets to assign

Id,Name

0PS4x000002QxRNGA0,B2BBuyer

- Assignments

AssigneeId,PermissionSetId

0054x000007avznAAA,0PS4x000002QxRNGA0

0054x000007avdcAAA,0PS4x000002QxRNGA0

Data loader way

Product2 Loading and Updating

Topics

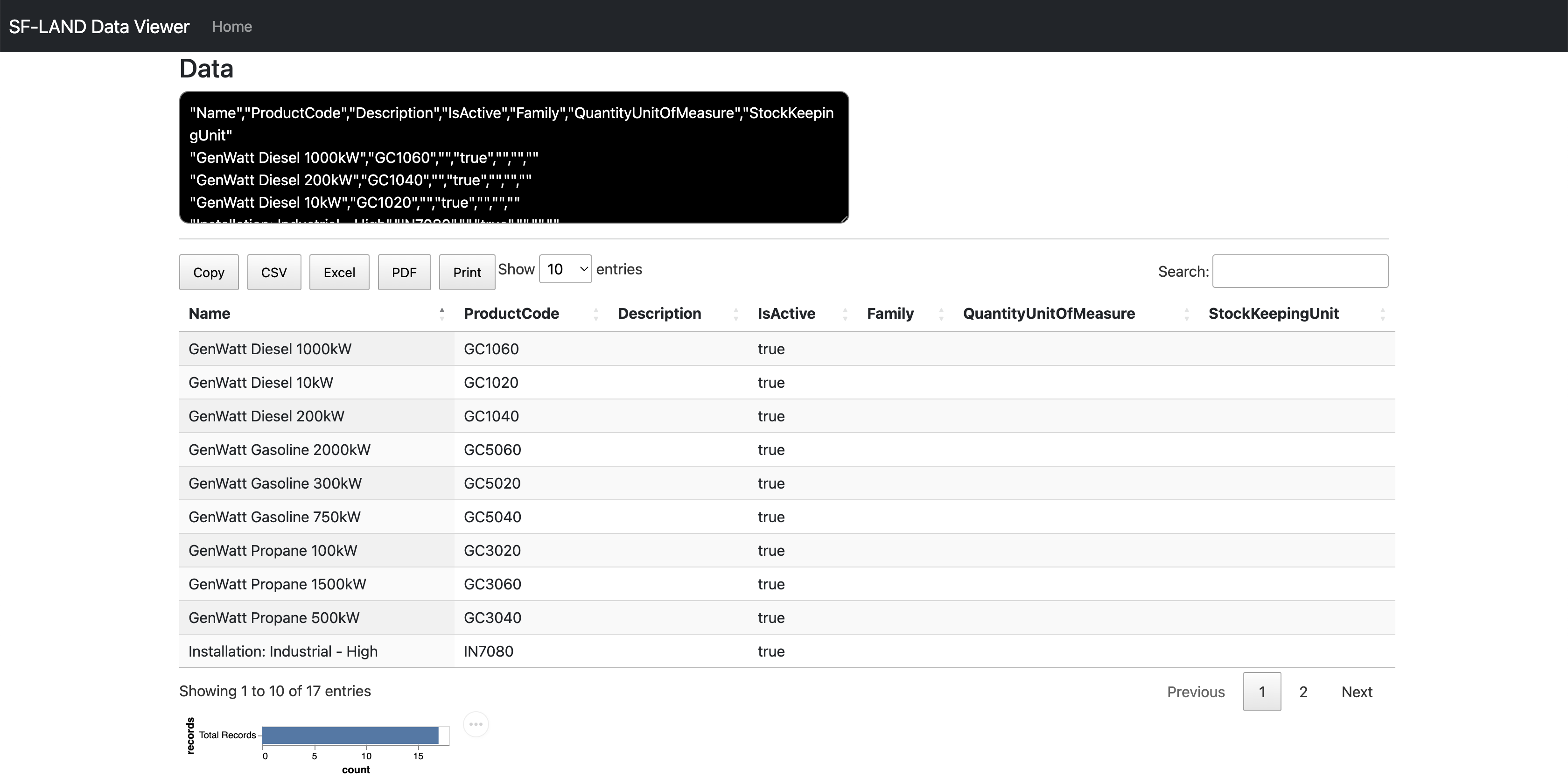

Loading

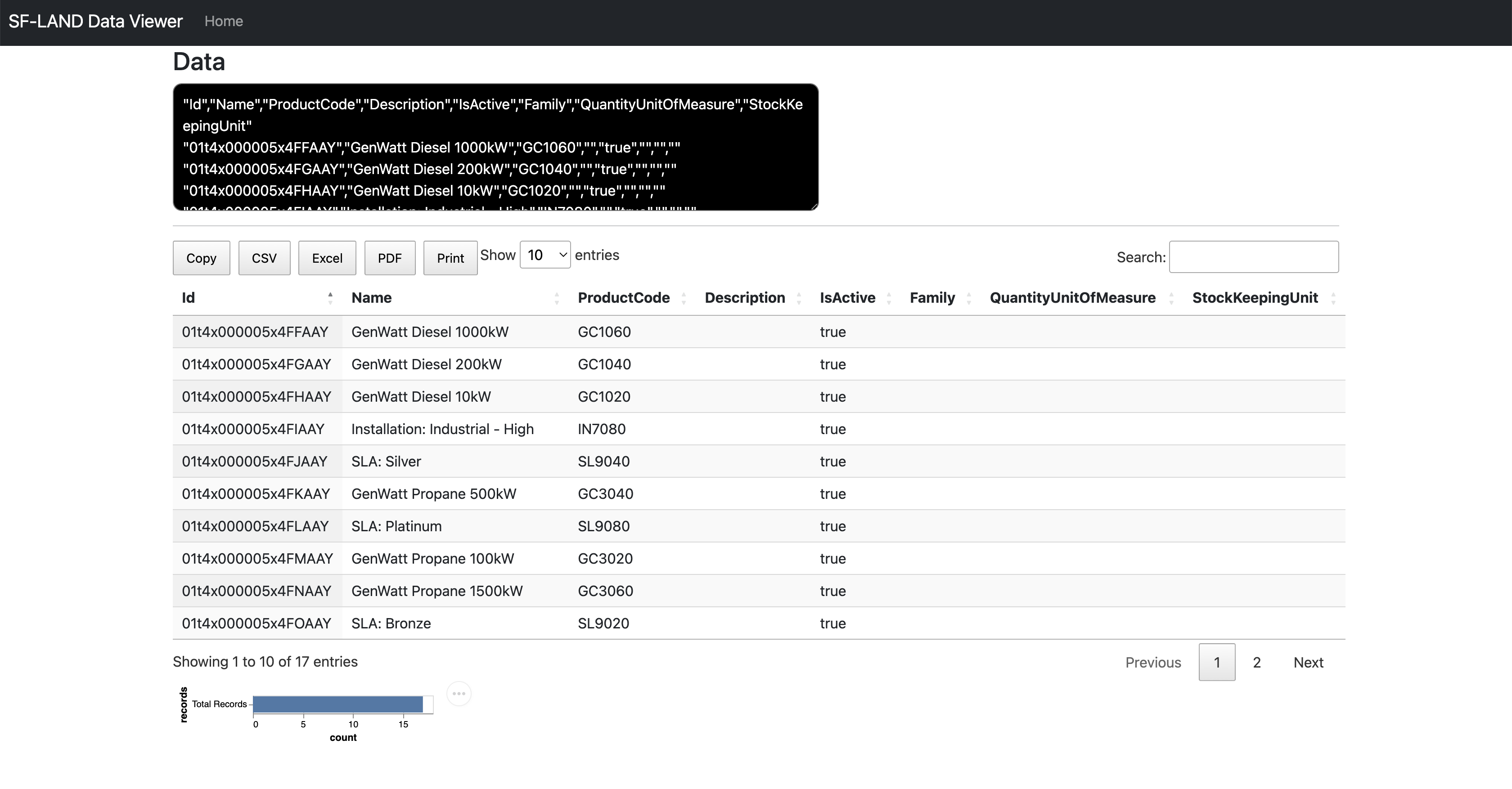

sfdx mohanc:data:bulkapi:query -u mohan.chinnappan.n.sel@gmail.com -q ~/.soql/product2.soql; pbcopy < ~/.soql/product2.soql.csv ; open "https://mohan-chinnappan-n5.github.io/viz/datatable/dt.html?c=csv"

SELECT Name, ProductCode, Description, IsActive, Family, QuantityUnitOfMeasure,StockKeepingUnit

FROM Product2

https://d4x000007rxogeaq-dev-ed.my.salesforce.com/services/data/v57.0/jobs/query

{

id: '7504x00000Xkf9FAAR',

operation: 'query',

object: 'Product2',

createdById: '0054x000006Riv4AAC',

createdDate: '2023-03-21T12:36:50.000+0000',

systemModstamp: '2023-03-21T12:36:50.000+0000',

state: 'UploadComplete',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 57,

lineEnding: 'LF',

columnDelimiter: 'COMMA'

}

=== JOB STATUS ===

=== JOB STATUS for job: 7504x00000Xkf9FAAR ===

{

id: '7504x00000Xkf9FAAR',

operation: 'query',

object: 'Product2',

createdById: '0054x000006Riv4AAC',

createdDate: '2023-03-21T12:36:50.000+0000',

systemModstamp: '2023-03-21T12:36:50.000+0000',

state: 'InProgress',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 57,

jobType: 'V2Query',

lineEnding: 'LF',

columnDelimiter: 'COMMA',

numberRecordsProcessed: 0,

retries: 0,

totalProcessingTime: 0

}

WAITING...

{

id: '7504x00000Xkf9FAAR',

operation: 'query',

object: 'Product2',

createdById: '0054x000006Riv4AAC',

createdDate: '2023-03-21T12:36:50.000+0000',

systemModstamp: '2023-03-21T12:36:51.000+0000',

state: 'JobComplete',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 57,

jobType: 'V2Query',

lineEnding: 'LF',

columnDelimiter: 'COMMA',

numberRecordsProcessed: 17,

retries: 0,

totalProcessingTime: 208

}

==== Job State: JobComplete ====

=== Total time taken to process the job : 208 milliseconds ===

=== Total records processed : 17 ===

https://d4x000007rxogeaq-dev-ed.my.salesforce.com/services/data/v57.0/jobs/query/7504x00000Xkf9FAAR/results

==== Output CSV file written into : /Users/mchinnappan/.soql/product2.soql.csv ===

==== View the output file : /Users/mchinnappan/.soql/product2.soql.csv using:

cat /Users/mchinnappan/.soql/product2.soql.csv ===

=== JOB Failure STATUS ===

=== JOB Failure STATUS for job: 7504x00000Xkf9FAAR === "sf__Id","sf__Error","Id","ProductCode","Description","IsActive","Family","QuantityUnitOfMeasure","StockKeepingUnit"

===

"sf__Id","sf__Error","Id","ProductCode","Description","IsActive","Family","QuantityUnitOfMeasure","StockKeepingUnit"

Input file

cat /Users/mchinnappan/.soql/product2.soql2.csv

"Name","ProductCode","Description","IsActive","Family","QuantityUnitOfMeasure","StockKeepingUnit"

"Autopilot","MC123456","","true","","",""

Load

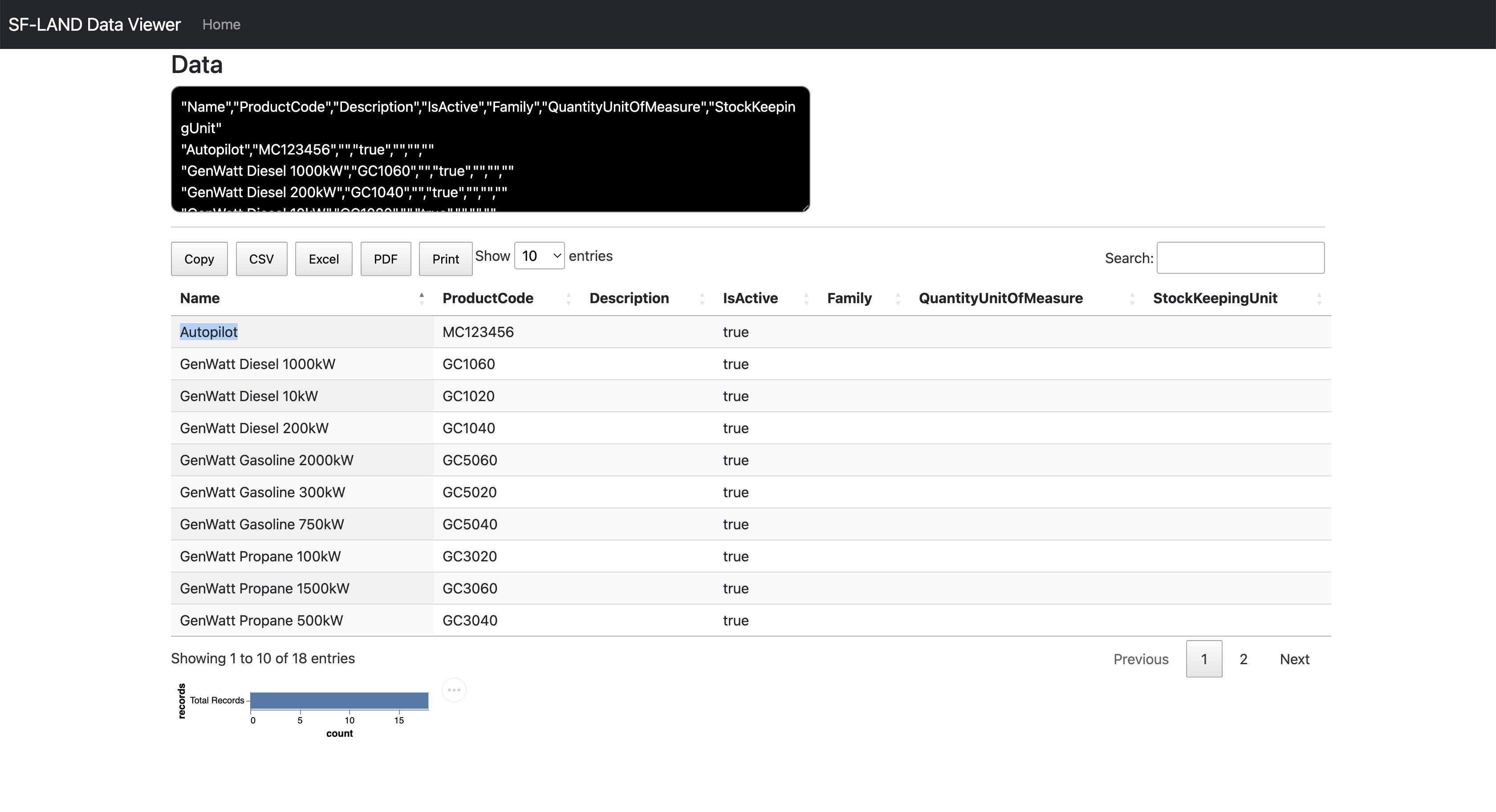

sfdx mohanc:data:bulkapi:load -u mohan.chinnappan.n.sel@gmail.com -f /Users/mchinnappan/.soql/product2.soql2.csv -e LF -o Product2

Query the results

sfdx mohanc:data:bulkapi:query -u mohan.chinnappan.n.sel@gmail.com -q ~/.soql/product2.soql; pbcopy < ~/.soql/product2.soql.csv ; open "https://mohan-chinnappan-n5.github.io/viz/datatable/dt.html?c=csv"

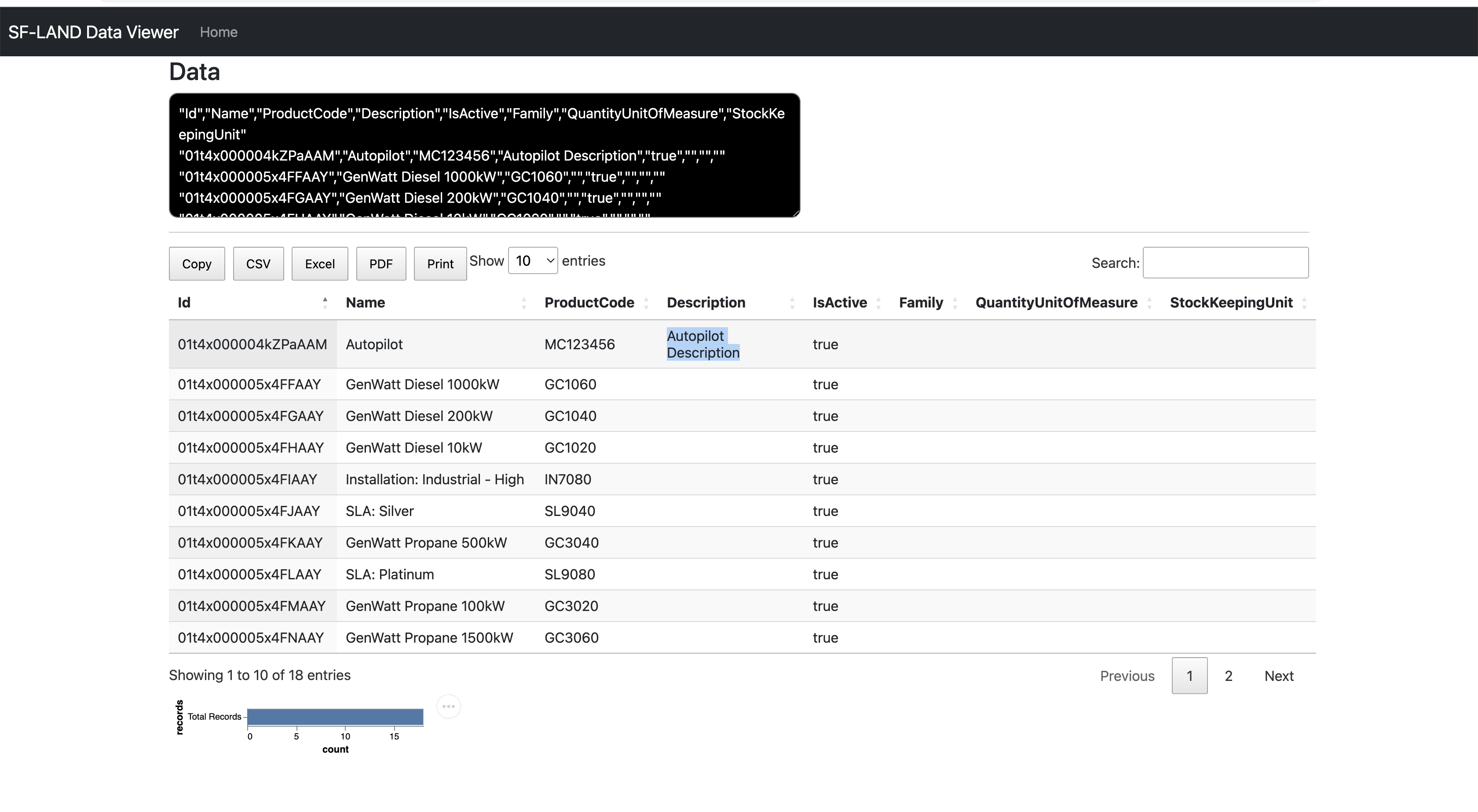

Update example

cat ~/.soql/product2-id.soql.csv

"Id","Description"

"01t4x000004kZPaAAM","Autopilot Description"

- Update

sfdx mohanc:data:bulkapi:update -u mohan.chinnappan.n.sel@gmail.com -f /Users/mchinnappan/.soql/product2-id.soql.csv -e LF -o Product2

- Query

sfdx mohanc:data:bulkapi:query -u mohan.chinnappan.n.sel@gmail.com -q ~/.soql/product2-id.soql; pbcopy < ~/.soql/product2-id.soql.csv ; open "https://mohan-chinnappan-n5.github.io/viz/datatable/dt.html?c=csv"

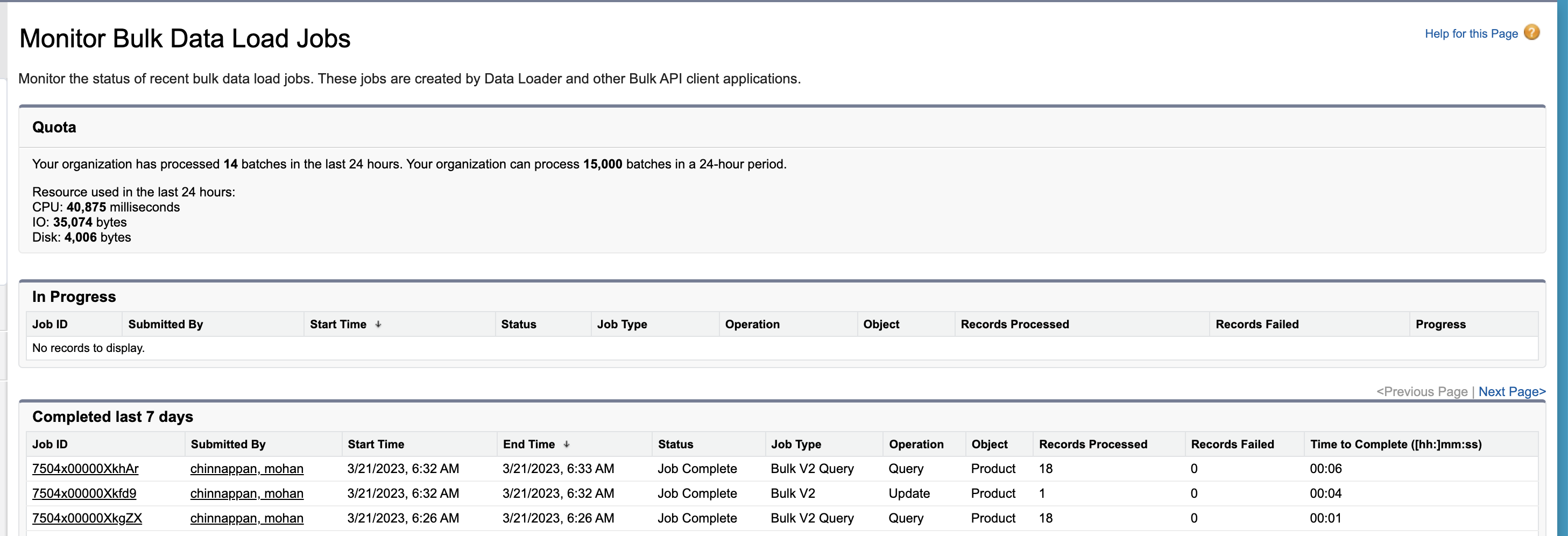

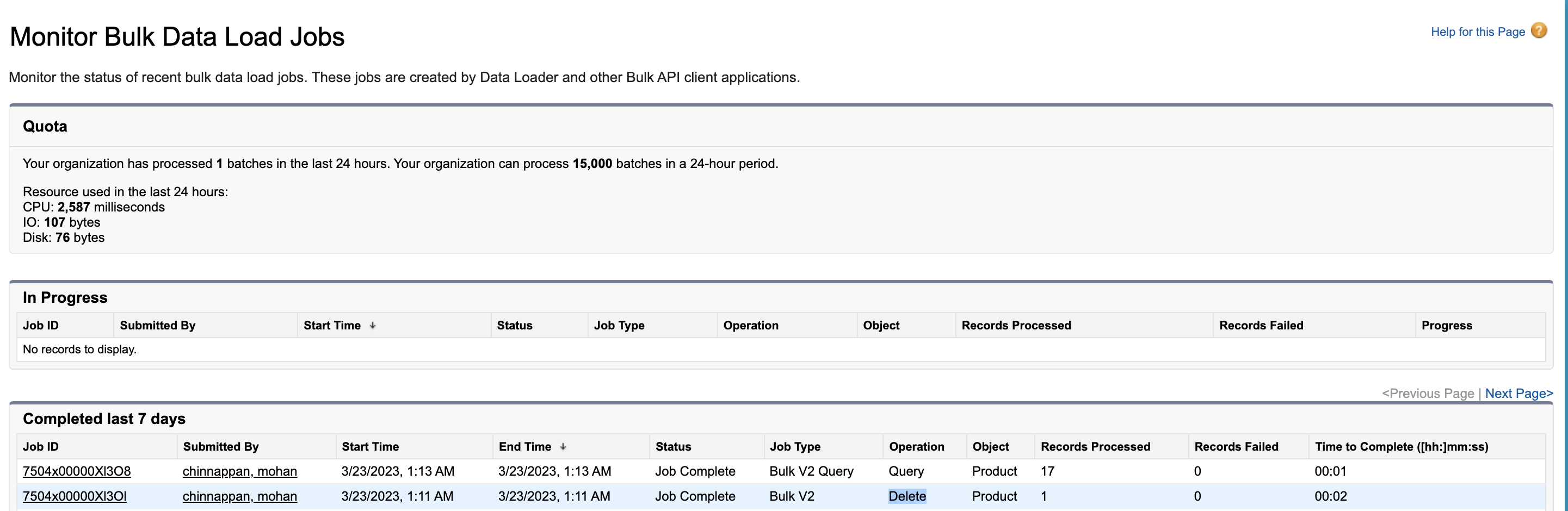

Bulk data load status

Delete

sfdx mohanc:data:bulkapi:delete -u mohan.chinnappan.n.sel@gmail.com -f /Users/mchinnappan/.soql/product2-id.del.csv -e LF -o Product2

/Users/mchinnappan/.soql/product2-id.del.csv Product2 LF

=== CREATE JOB ===

{

id: '7504x00000Xl3OlAAJ',

operation: 'delete',

object: 'Product2',

createdById: '0054x000006Riv4AAC',

createdDate: '2023-03-23T08:11:55.000+0000',

systemModstamp: '2023-03-23T08:11:55.000+0000',

state: 'Open',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 57,

contentUrl: 'services/data/v57.0/jobs/ingest/7504x00000Xl3OlAAJ/batches',

lineEnding: 'LF',

columnDelimiter: 'COMMA'

}

jobId: 7504x00000Xl3OlAAJ

=== JOB STATUS ===

=== JOB STATUS for job: 7504x00000Xl3OlAAJ ===

{

id: '7504x00000Xl3OlAAJ',

operation: 'delete',

object: 'Product2',

createdById: '0054x000006Riv4AAC',

createdDate: '2023-03-23T08:11:55.000+0000',

systemModstamp: '2023-03-23T08:11:55.000+0000',

state: 'Open',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 57,

jobType: 'V2Ingest',

contentUrl: 'services/data/v57.0/jobs/ingest/7504x00000Xl3OlAAJ/batches',

lineEnding: 'LF',

columnDelimiter: 'COMMA',

retries: 0,

totalProcessingTime: 0,

apiActiveProcessingTime: 0,

apexProcessingTime: 0

}

=== PUT DATA === SIZE: == 26 MB ==

result: status: 201, statusText: Created

=== JOB STATUS ===

=== JOB STATUS for job: 7504x00000Xl3OlAAJ ===

{

id: '7504x00000Xl3OlAAJ',

operation: 'delete',

object: 'Product2',

createdById: '0054x000006Riv4AAC',

createdDate: '2023-03-23T08:11:55.000+0000',

systemModstamp: '2023-03-23T08:11:55.000+0000',

state: 'Open',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 57,

jobType: 'V2Ingest',

contentUrl: 'services/data/v57.0/jobs/ingest/7504x00000Xl3OlAAJ/batches',

lineEnding: 'LF',

columnDelimiter: 'COMMA',

numberRecordsProcessed: 0,

numberRecordsFailed: 0,

retries: 0,

totalProcessingTime: 0,

apiActiveProcessingTime: 0,

apexProcessingTime: 0

}

=== PATCH STATE ===

{

id: '7504x00000Xl3OlAAJ',

operation: 'delete',

object: 'Product2',

createdById: '0054x000006Riv4AAC',

createdDate: '2023-03-23T08:11:55.000+0000',

systemModstamp: '2023-03-23T08:11:55.000+0000',

state: 'UploadComplete',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 57

}

=== JOB STATUS ===

=== JOB STATUS for job: 7504x00000Xl3OlAAJ ===

jobStatus {

id: '7504x00000Xl3OlAAJ',

operation: 'delete',

object: 'Product2',

createdById: '0054x000006Riv4AAC',

createdDate: '2023-03-23T08:11:55.000+0000',

systemModstamp: '2023-03-23T08:11:57.000+0000',

state: 'InProgress',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 57,

jobType: 'V2Ingest',

lineEnding: 'LF',

columnDelimiter: 'COMMA',

numberRecordsProcessed: 0,

numberRecordsFailed: 0,

retries: 0,

totalProcessingTime: 0,

apiActiveProcessingTime: 0,

apexProcessingTime: 0

}

WAITING...

{

id: '7504x00000Xl3OlAAJ',

operation: 'delete',

object: 'Product2',

createdById: '0054x000006Riv4AAC',

createdDate: '2023-03-23T08:11:55.000+0000',

systemModstamp: '2023-03-23T08:11:58.000+0000',

state: 'JobComplete',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 57,

jobType: 'V2Ingest',

lineEnding: 'LF',

columnDelimiter: 'COMMA',

numberRecordsProcessed: 1,

numberRecordsFailed: 0,

retries: 0,

totalProcessingTime: 363,

apiActiveProcessingTime: 289,

apexProcessingTime: 0

}

=== JOB Failure STATUS ===

=== JOB Failure STATUS for job: 7504x00000Xl3OlAAJ ===

"sf__Id","sf__Error","Id"

=== JOB getUnprocessedRecords STATUS ===

=== JOB getUnprocessedRecords STATUS for job: 7504x00000Xl3OlAAJ ===

"Id"

Query After delete

sfdx mohanc:data:bulkapi:query -u mohan.chinnappan.n.sel@gmail.com -q ~/.soql/product2-id.soql; pbcopy < ~/.soql/product2-id.soql.csv ; open "https://mohan-chinnappan-n5.github.io/viz/datatable/dt.html?c=csv"

SELECT Id,Name,ProductCode, Description, IsActive, Family, QuantityUnitOfMeasure,StockKeepingUnit

FROM Product2

https://d4x000007rxogeaq-dev-ed.my.salesforce.com/services/data/v57.0/jobs/query

{

id: '7504x00000Xl3O8AAJ',

operation: 'query',

object: 'Product2',

createdById: '0054x000006Riv4AAC',

createdDate: '2023-03-23T08:13:04.000+0000',

systemModstamp: '2023-03-23T08:13:04.000+0000',

state: 'UploadComplete',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 57,

lineEnding: 'LF',

columnDelimiter: 'COMMA'

}

=== JOB STATUS ===

=== JOB STATUS for job: 7504x00000Xl3O8AAJ ===

{

id: '7504x00000Xl3O8AAJ',

operation: 'query',

object: 'Product2',

createdById: '0054x000006Riv4AAC',

createdDate: '2023-03-23T08:13:04.000+0000',

systemModstamp: '2023-03-23T08:13:04.000+0000',

state: 'InProgress',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 57,

jobType: 'V2Query',

lineEnding: 'LF',

columnDelimiter: 'COMMA',

numberRecordsProcessed: 0,

retries: 0,

totalProcessingTime: 0

}

WAITING...

{

id: '7504x00000Xl3O8AAJ',

operation: 'query',

object: 'Product2',

createdById: '0054x000006Riv4AAC',

createdDate: '2023-03-23T08:13:04.000+0000',

systemModstamp: '2023-03-23T08:13:05.000+0000',

state: 'JobComplete',

concurrencyMode: 'Parallel',

contentType: 'CSV',

apiVersion: 57,

jobType: 'V2Query',

lineEnding: 'LF',

columnDelimiter: 'COMMA',

numberRecordsProcessed: 17,

retries: 0,

totalProcessingTime: 220

}

==== Job State: JobComplete ====

=== Total time taken to process the job : 220 milliseconds ===

=== Total records processed : 17 ===

https://d4x000007rxogeaq-dev-ed.my.salesforce.com/services/data/v57.0/jobs/query/7504x00000Xl3O8AAJ/results

==== Output CSV file written into : /Users/mchinnappan/.soql/product2-id.soql.csv ===

==== View the output file : /Users/mchinnappan/.soql/product2-id.soql.csv using:

cat /Users/mchinnappan/.soql/product2-id.soql.csv ===

=== JOB Failure STATUS ===

=== JOB Failure STATUS for job: 7504x00000Xl3O8AAJ === "sf__Id","sf__Error","Id","Name","ProductCode","Description","IsActive","Family","QuantityUnitOfMeasure","StockKeepingUnit"

===

"sf__Id","sf__Error","Id","Name","ProductCode","Description","IsActive","Family","QuantityUnitOfMeasure","StockKeepingUnit"

FlowInterview Query and Deletion using CLI (BulkAPI2.0)

Query

- Note:

- Username used in the examples is

mohan.chinnappan.n.sel@gmail.com - You need to replace it with your username after authenticationg with

# For PROD and DE sfdx force:auth:web:login -r https://login.salesforce.com # For Sandboxes sfdx force:auth:web:login -r https://test.salesforce.com - Username used in the examples is

Query for the FlowInterview records with Error InterviewStatus

SELECT Id, CurrentElement, FlowVersionViewId,Guid,InterviewLabel,

InterviewStatus,Name,OwnerId,PauseLabel,

WasPausedFromScreen

FROM FlowInterview

WHERE InterviewStatus ='Error'

-

Store above SOQL in a file say

flowInterview.soql -

Execute the query

sfdx mohanc:data:bulkapi:query -u mohan.chinnappan.n.sel@gmail.com -q flowInterview.soql

- This will create a csv output in

flowInterview.soql.csv. Check the records for deletion. - If you have decided to delete the these records, you can run the following query

SELECT Id

FROM FlowInterview

WHERE InterviewStatus ='Error'

-

Store above SOQL in a file say

flowInterviewIds.soql -

Execute the query

sfdx mohanc:data:bulkapi:query -u mohan.chinnappan.n.sel@gmail.com -q flowInterviewIds.soql

Deletion

sfdx mohanc:data:bulkapi:delete -u mohan.chinnappan.n.sel@gmail.com -f flowInterviewIds.soql.csv -e LF -o FlowInterview

- This will delete all the FlowInterview records with Ids in

flowInterviewIds.soql.csv

References

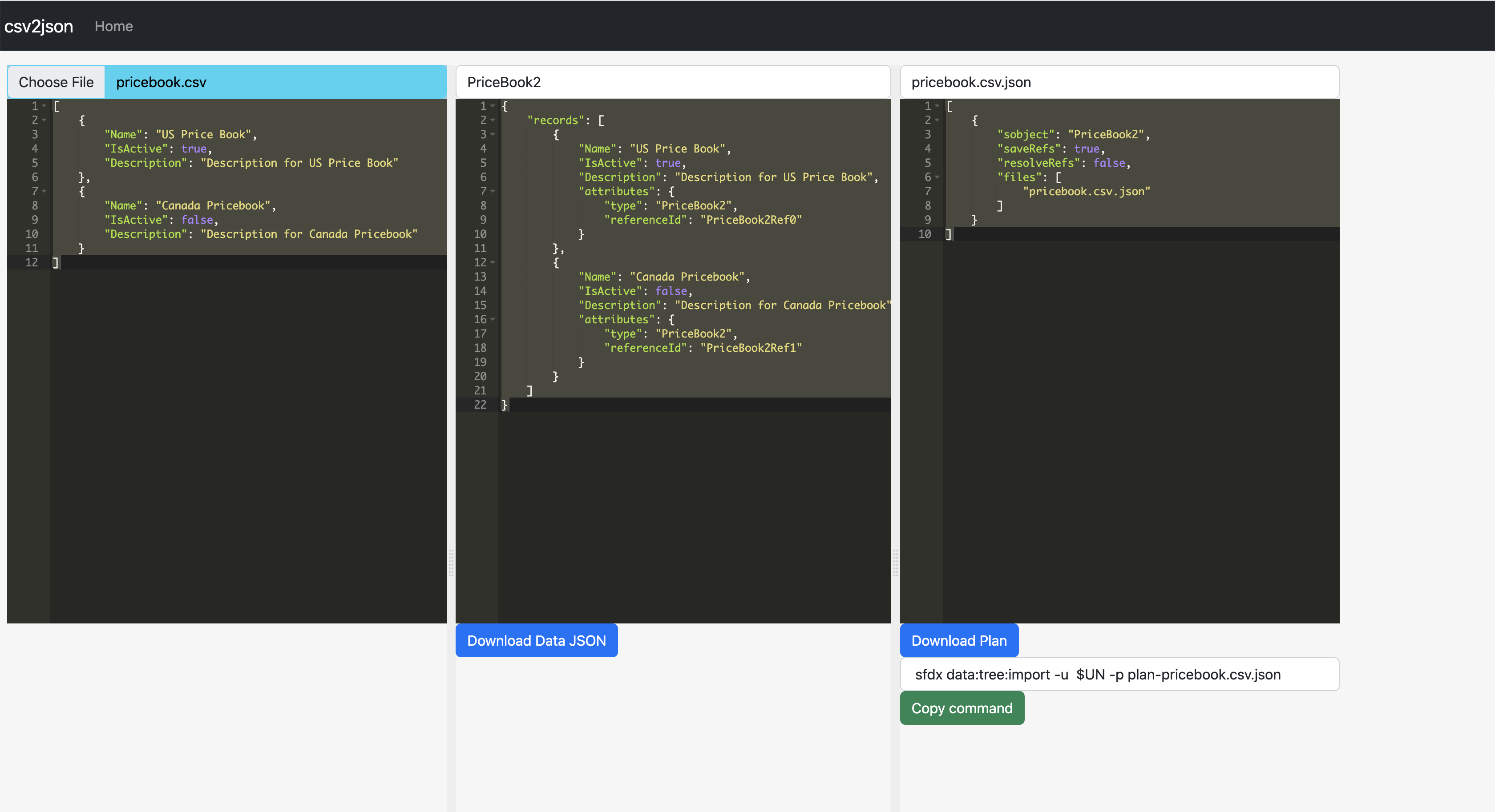

Prepare and Load for data tree import

Convert the given CSV to data:tree:import JSON and plan file

Using csv2json app

- pass url parameter 'so' to set the SObject required for loading

Demo

Sample files

- pricebook.csv

"Name","IsActive","Description"

"US Price Book","true","Description for US Price Book"

"Canada Pricebook","false","Description for Canada Pricebook"

- pricebook.csv.json

{

"records": [

{

"Name": "US Price Book",

"IsActive": true,

"Description": "Description for US Price Book",

"attributes": {

"type": "PriceBook2",

"referenceId": "PriceBook2Ref0"

}

},

{

"Name": "Canada Pricebook",

"IsActive": false,

"Description": "Description for Canada Pricebook",

"attributes": {

"type": "PriceBook2",

"referenceId": "PriceBook2Ref1"

}

}

]

}

- plan-pricebook.csv.json

[

{

"sobject": "PriceBook2",

"saveRefs": true,

"resolveRefs": false,

"files": [

"pricebook.csv.json"

]

}

]

Use CSV to SF JSON and Plan file script

python csv2sf_json.py --csv-file input.csv --sobject=Account

Conversion Done.

Import data into your org with: sfdx data:tree:import -u $USERNAME -p input.csv.json-plan.json

Loading State and Country Picklist

-

Requirement for this tool to help the customers since You can’t use the Metadata API to create or delete new states, countries, or territories

-

This app helps to fill-in this gap, of creating new states and countries, or territories

-

This app uses Chrome's new Recorder feature

Topics

Demo

Where I can find this app?

Using SF-LAND Data Viz to get All Countries data

-

I prepared this data by web scraping the Wikipedia, feel free to use it.

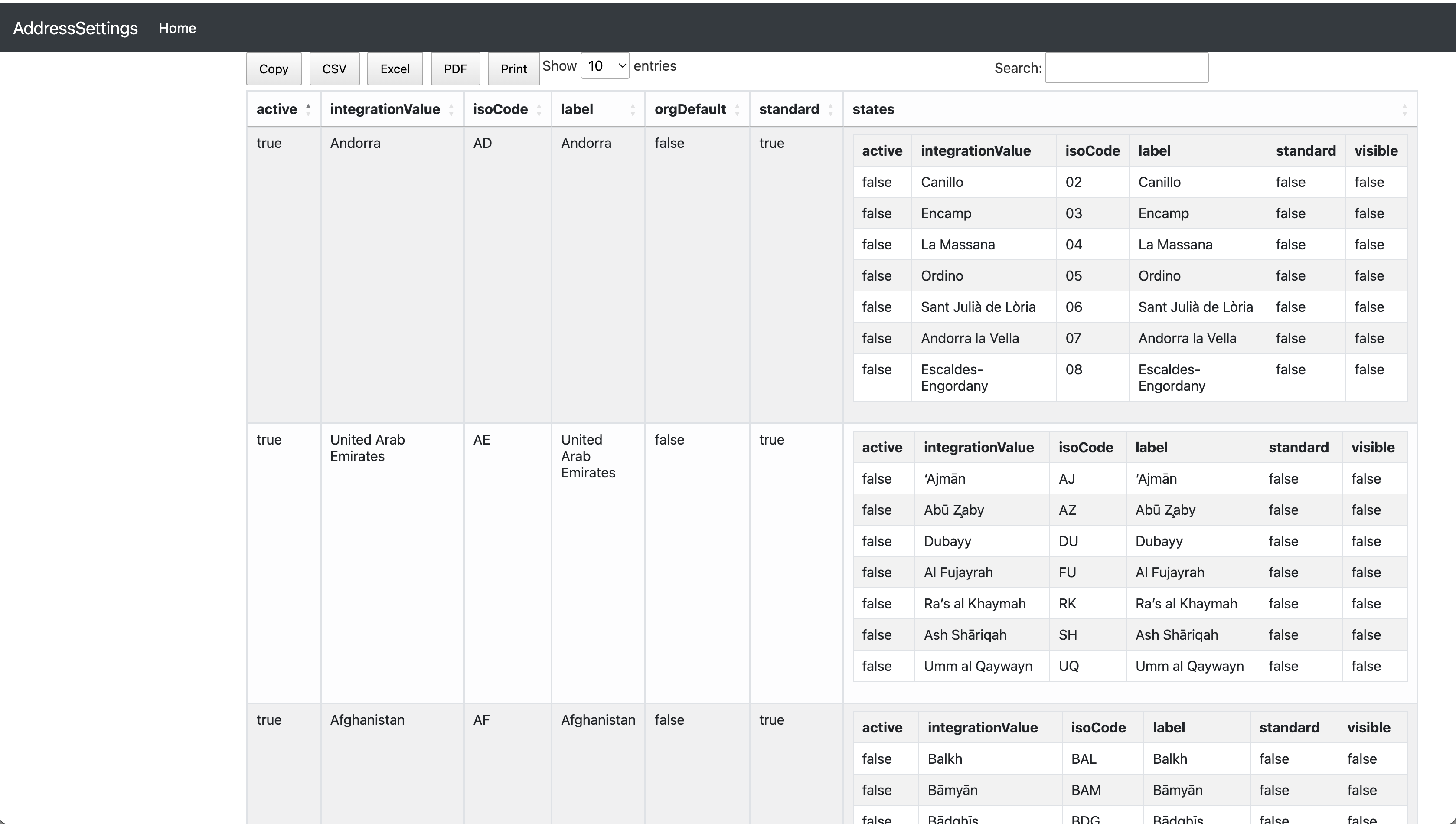

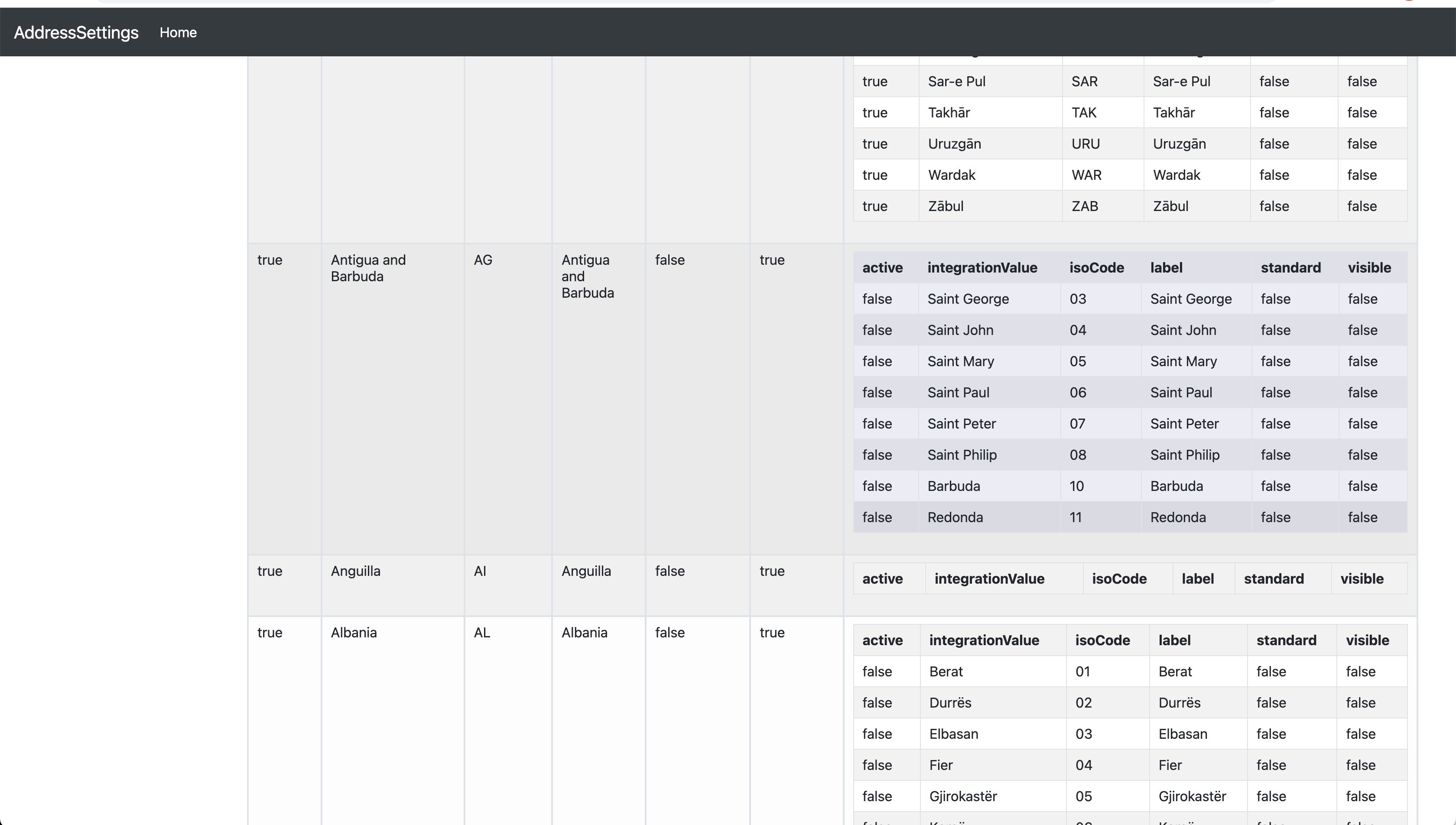

Visualizing State and Country - AddressSettings

- package.xml to retrieve the data

<?xml version="1.0" encoding="UTF-8"?> <Package xmlns="http://soap.sforce.com/2006/04/metadata"> <types> <members>Address</members> <name>Settings</name> </types> <version>56.0</version> </Package>

- Transform to get the visualization

xmlutil transform --xsl=addresssettings --xml=Address.settings --out=/tmp/address.html

References

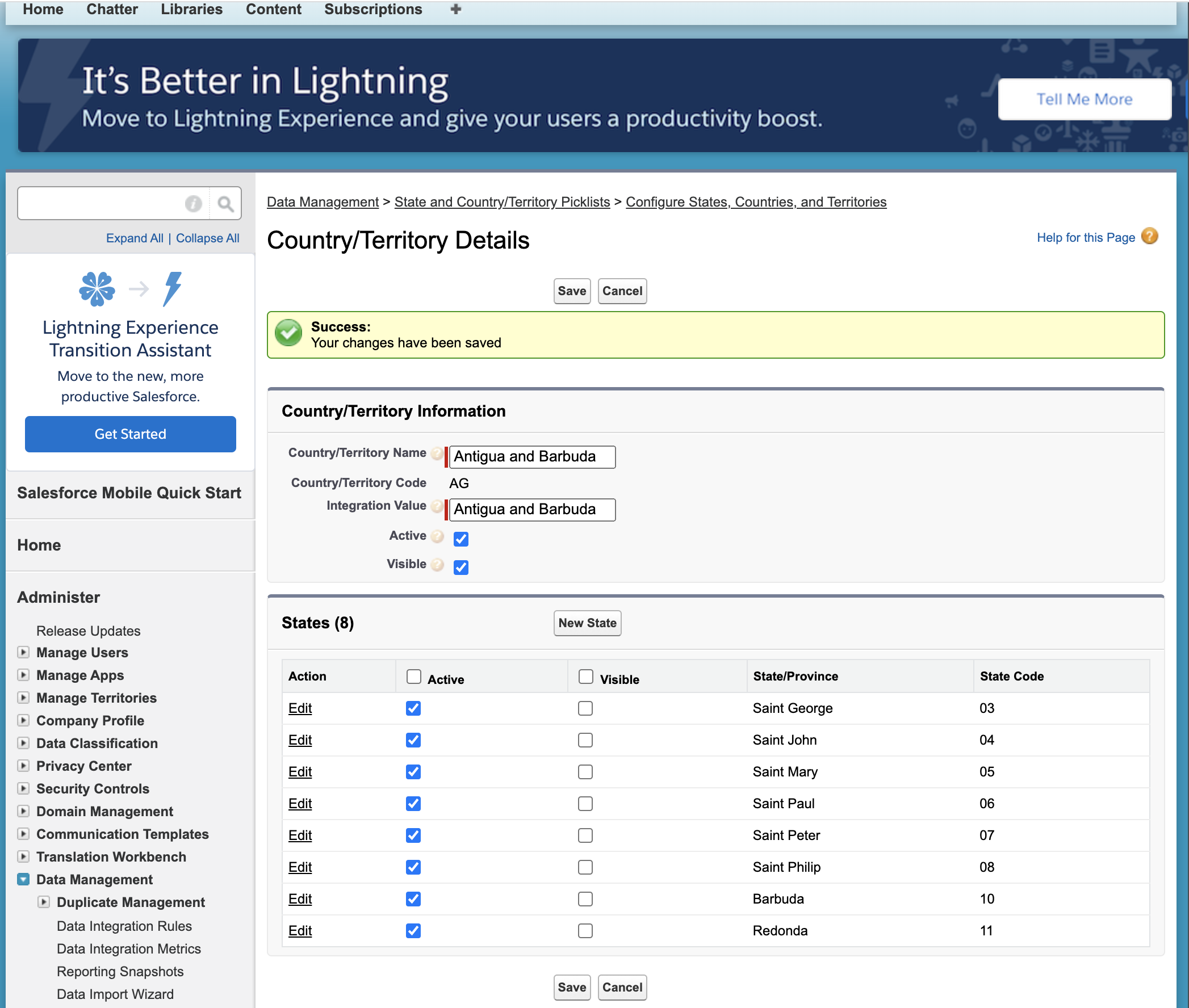

Updating State and Country Picklist

-

You can use the Metadata API to edit existing states, countries, and territories in state and country/territory picklists. You can’t use the Metadata API to create or delete new states, countries, or territories. Refer

-

Once you have loaded State and Country Picklist you can update them, like selectively activating a country as per your use case.

-

Options described here explains how to activate a particular country or countries.

Topics

- Option-1 Activating using Recorder application

- Option-2 Using ONNU Chrome Extension

- Option-3 Using CLI mdapi

- Option-4 retrieve into SFDX project using source:retrieve

Option-1 Activating using Recorder application

Before

Replying the recording

After

Option-2 Using ONNU Chrome Extension

Option-3 Using CLI mdapi

Retrieve

sfdx force:mdapi:retrieve -k unpackaged/package.xml -u mohan.chinnappan.n.sel@gmail.com -r .Retrieving v56.0 metadata from mohan.chinnappan.n.sel@gmail.com using the v57.0 SOAP API Retrieve ID: 09S4x00000F0DGMEA3 Retrieving metadata from mohan.chinnappan.n.sel@gmail.com... done Wrote retrieve zip to /Users/mchinnappan/Downloads/updates/unpackaged.zipjar tvf unpackaged.zip 569563 Mon Mar 27 23:41:02 EDT 2023 unpackaged/settings/Address.settings 226 Mon Mar 27 23:41:02 EDT 2023 unpackaged/package.xml- Edit Address.settings as in the Option-2 (ONNU option) to update active tags to make updatedSCPL.zip zip file

Deploy the edited Address.settings file

sfdx force:mdapi:deploy -u mohan.chinnappan.n.sel@gmail.com -f updatedSCPL.zip -c --verbose --loglevel TRACE -w 1000Deploying metadata to mohan.chinnappan.n.sel@gmail.com using the v57.0 SOAP API Deploy ID: 0Af4x00000YsXnoCAF DEPLOY PROGRESS | ████████████████████████████████████████ | 1/1 Components === Deployed Source Type File Name Id ─────────────── ──────────────────────────────────── ─────────── ── unpackaged/package.xml package.xml AddressSettings unpackaged/settings/Address.settings Address

Option-4 retrieve into SFDX project using source:retrieve

cat package.xml<?xml version="1.0" encoding="UTF-8"?> <Package xmlns="http://soap.sforce.com/2006/04/metadata"> <types> <members>Address</members> <name>Settings</name> </types> <version>56.0</version> </Package>sfdx force:source:retrieve -u mohan.chinnappan.n.sel@gmail.com -x package.xmlRetrieving v56.0 metadata from mohan.chinnappan.n.sel@gmail.com using the v57.0 SOAP API Preparing retrieve request... done === Retrieved Source FULL NAME TYPE PROJECT PATH ───────── ──────── ───────────────────────────────────────────────────────── Address Settings force-app/main/default/settings/Address.settings-meta.xml- Now you can commit this into your version control and make changes to the

Address.settings-meta.xmlin your editor

vi force-app/main/default/settings/Address.settings-meta.xmlDeploy the updated Address.settings-meta.xml into the org

sfdx force:source:deploy -u mohan.chinnappan.n.sel@gmail.com -x package.xmlDeploying v56.0 metadata to mohan.chinnappan.n.sel@gmail.com using the v57.0 SOAP API Deploy ID: 0Af4x00000YsZyUCAV DEPLOY PROGRESS | ████████████████████████████████████████ | 1/1 Components === Deployed Source FULL NAME TYPE PROJECT PATH ───────── ──────── ───────────────────────────────────────────────────────── Address Settings force-app/main/default/settings/Address.settings-meta.xml Deploy Succeeded.Project Management

Topics

Semantic Doc Compare and Group

Project Estimates

Estimate Comments Planning estimates It is a rough order of estimate(ROM). ROM is an approximation strategy that helps project managers present important financial estimates to clients and consumers. Helps in determining the feasibility of a project and is important for the decision-making process Backlog estimates Provides data on the amount of work that isn't complete - works has started but not yet completed Timebox estimates Shows how long it might take to complete a project - important part of Agile and Lean software development. Involves subdividing a project into smaller chunks of work and estimating each chunk separately. Order-of-magnitude estimates Helps to estimate the cost and effort to complete a project - help you determine how big something is without knowing the exact value. Feasibility estimates useful when determining if a project or task is doable and how much it may cost Detailed estimates Includes several line items or categories of work for a project. You can use it to estimate the time and resources necessary to complete a project Analogous estimates uses experience to predict the time and cost required for a project. For example, if you've completed similar projects in the past and know how long each one took, you can use this information to estimate future projects Top-down estimates starts with a high-level project scope, which you simplify into smaller pieces. References

PERT - Program Evaluation and Review Technique

-

Statistical tool used in project management, which was designed to analyze and represent the tasks involved in completing a given project.

-

First developed by the United States Navy in 1958, it is commonly used in conjunction with the critical path method (CPM) that was introduced in 1957.

Event Monitoring

Viewing and Visualizing Event Log files using DX

Topics

Listing the Event Types

- Requires 0.0.115 version of the plugin

- sfdx-mohanc-plugins@0.0.115

- How to install the plugin

Usage

USAGE $ sfdx mohanc:monitoring:em:types OPTIONS -u, --targetusername=targetusername username or alias for the target org; overrides default target org -v, --targetdevhubusername=targetdevhubusername username or alias for the dev hub org; overrides default dev hub org --apiversion=apiversion override the api version used for api requests made by this command --json format output as json --loglevel=(trace|debug|info|warn|error|fatal) logging level for this command invocation EXAMPLE ** Show Event Types ** sfdx mohanc:monitoring:em:types -u <userName>Demo

$ sfdx mohanc:monitoring:em:types -u mohan.chinnappan.n_ea2@gmail.com API ApexExecution ApexTrigger BulkApi ContentTransfer DocumentAttachmentDownloads LightningInteraction LightningPageView LightningPerformance Login Logout RestApi URI WaveChange WaveInteraction WavePerformanceGet the Event files for given Event Type

Usage

$ sfdx mohanc:monitoring:em:get -h Event Files for the given Event Type USAGE $ sfdx mohanc:monitoring:em:get OPTIONS -e, --enddate=enddate End DateTime for the logs in the format YYYY-MM-DDThh:mm:ssZ,, example: 2012-12-30:23:00:00Z -o, --eventfileout=eventfileout Output CSV file name to write the events into -s, --startdate=startdate Start DateTime for the logs in the format YYYY-MM-DDThh:mm:ssZ, example: 2012-12-30T00:10:00Z -t, --eventtype=eventtype Event Type for which Event File is requested -u, --targetusername=targetusername username or alias for the target org; overrides default target org -v, --targetdevhubusername=targetdevhubusername username or alias for the dev hub org; overrides default dev hub org --apiversion=apiversion override the api version used for api requests made by this command --json format output as json --loglevel=(trace|debug|info|warn|error|fatal) logging level for this command invocation EXAMPLE ** Event Files for the given Event Type ** sfdx mohanc:monitoring:em:get -u <userName> -t <eventType> -o <outFileName> -s <startDateTime> -e <endDateTime>Demo

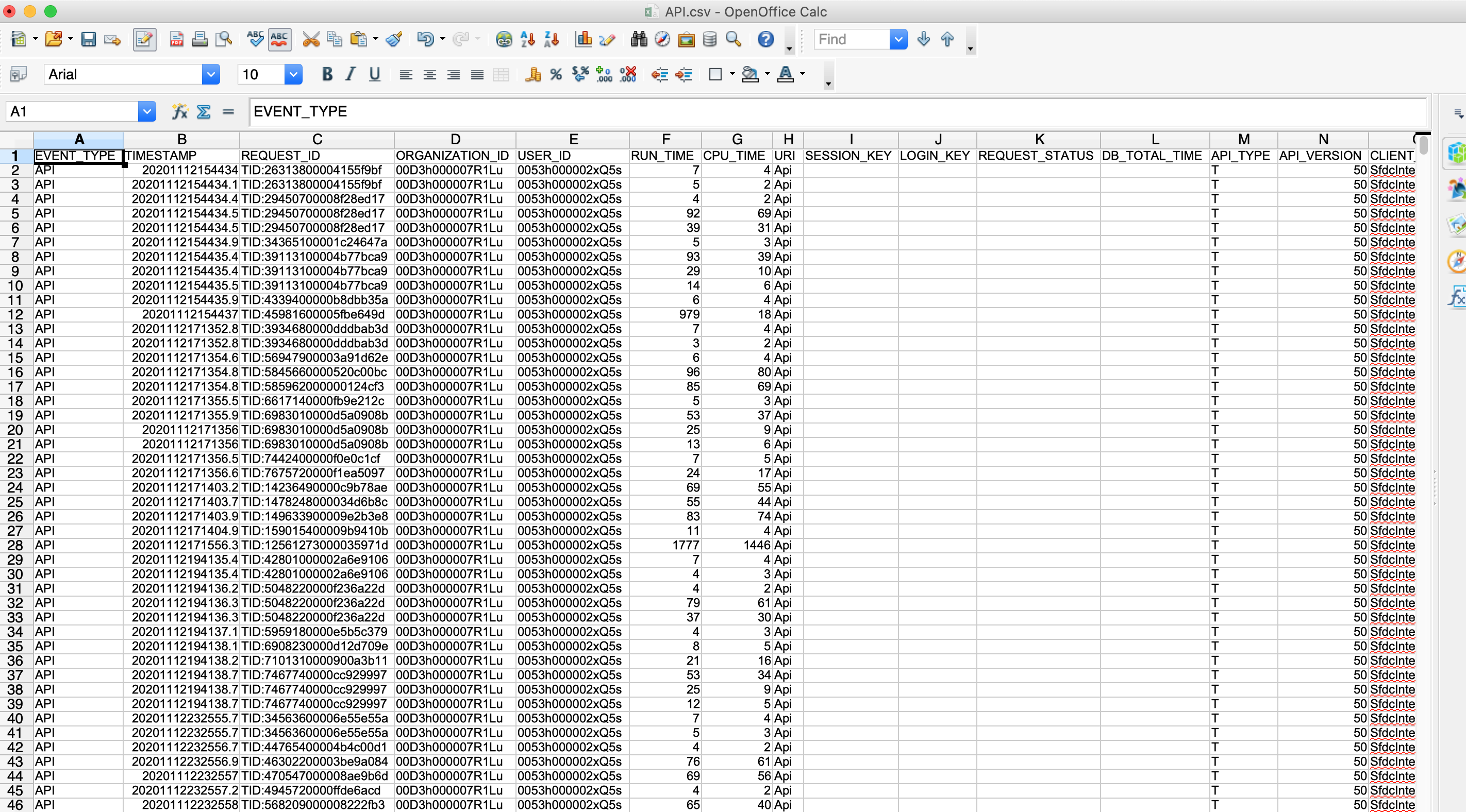

$ sfdx mohanc:monitoring:em:get -t API -u mohan.chinnappan.n_ea2@gmail.com -o API2d.csv -s 2020-11-10T00:01:00Z -e 2020-11-13T00:02:00Z === Deleting old output file API2d.csv ... === Collecting logs for the EventType: API ... === Getting log file for LogDate: 2020-11-11T00:00:00.000+0000 of Size: 15113, Total: 15113 Bytes ... === Getting log file for LogDate: 2020-11-10T00:00:00.000+0000 of Size: 5590, Total: 20703 Bytes ... === Getting log file for LogDate: 2020-11-08T00:00:00.000+0000 of Size: 16036, Total: 36739 Bytes ... === Getting log file for LogDate: 2020-11-09T00:00:00.000+0000 of Size: 4159, Total: 40898 Bytes ... Total Bytes written: 40898 === Opening API2d.csv ...

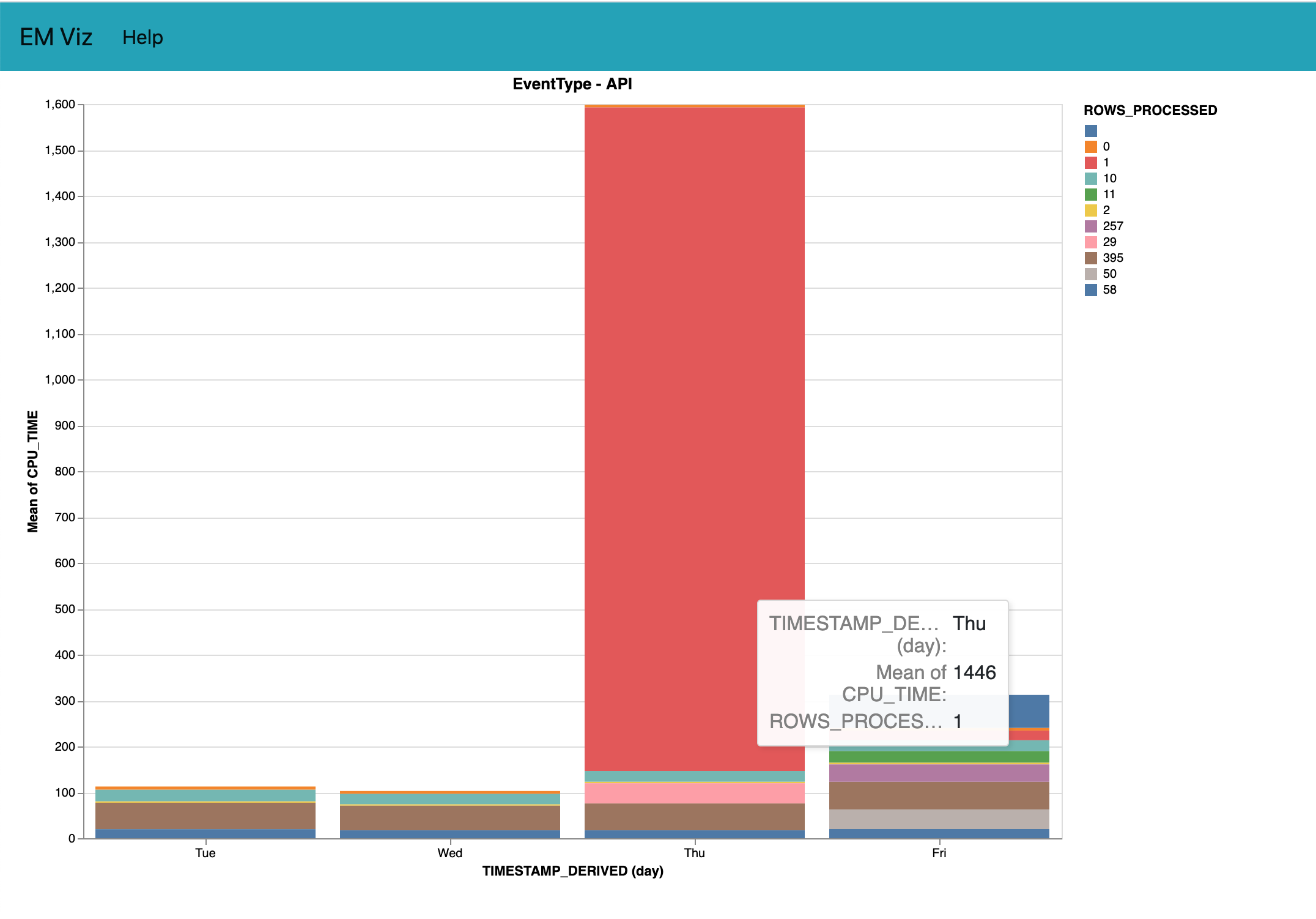

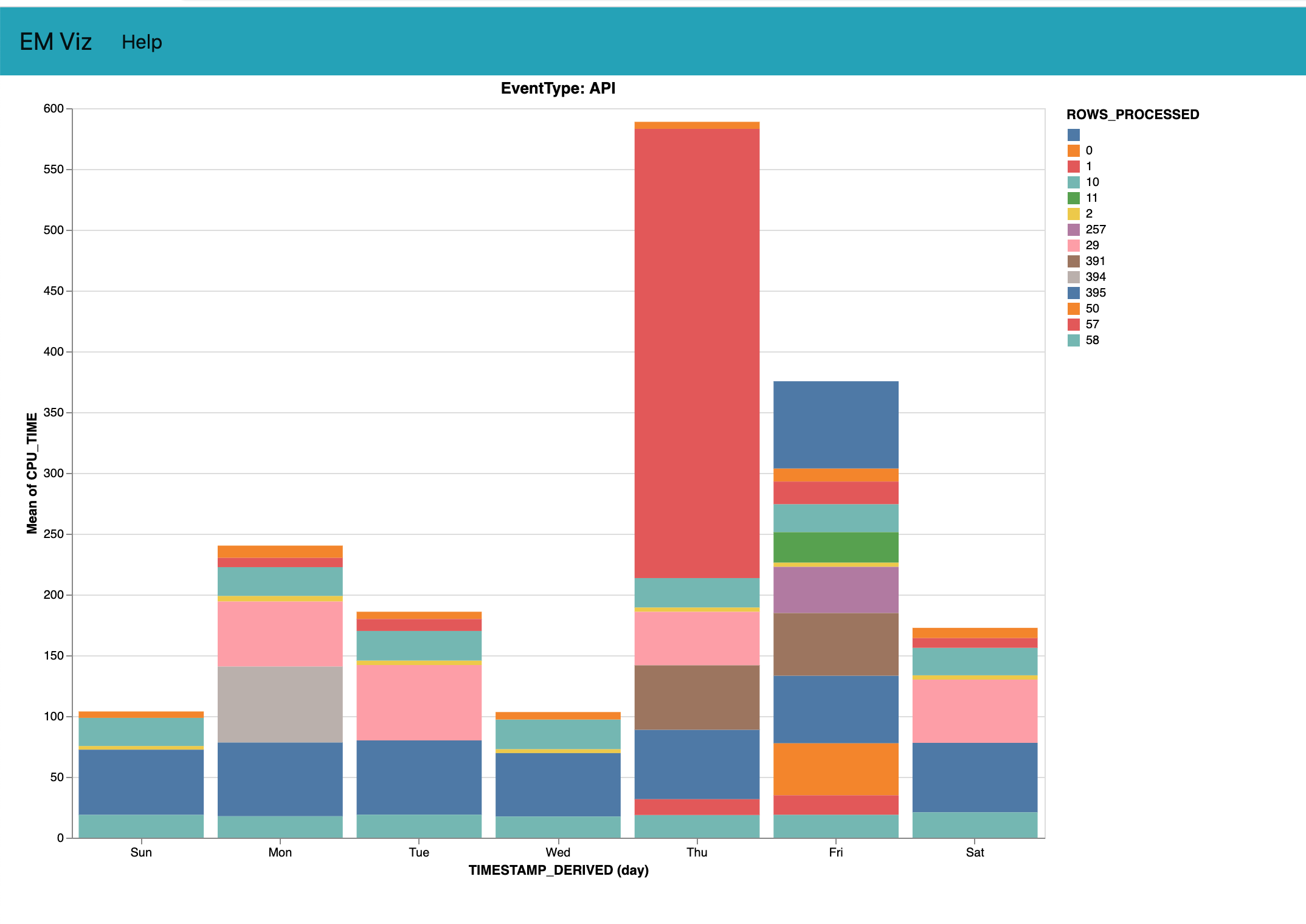

Charts

$ sfdx mohanc:data:viz:barChart -d ./API2d.csv -e ./API.csv.encoding-2.json -m bar -o ./APIbarchart.html -p ./apiChart.json === Opening ./APIbarchart.html via Local Web Server on port :7020 ...

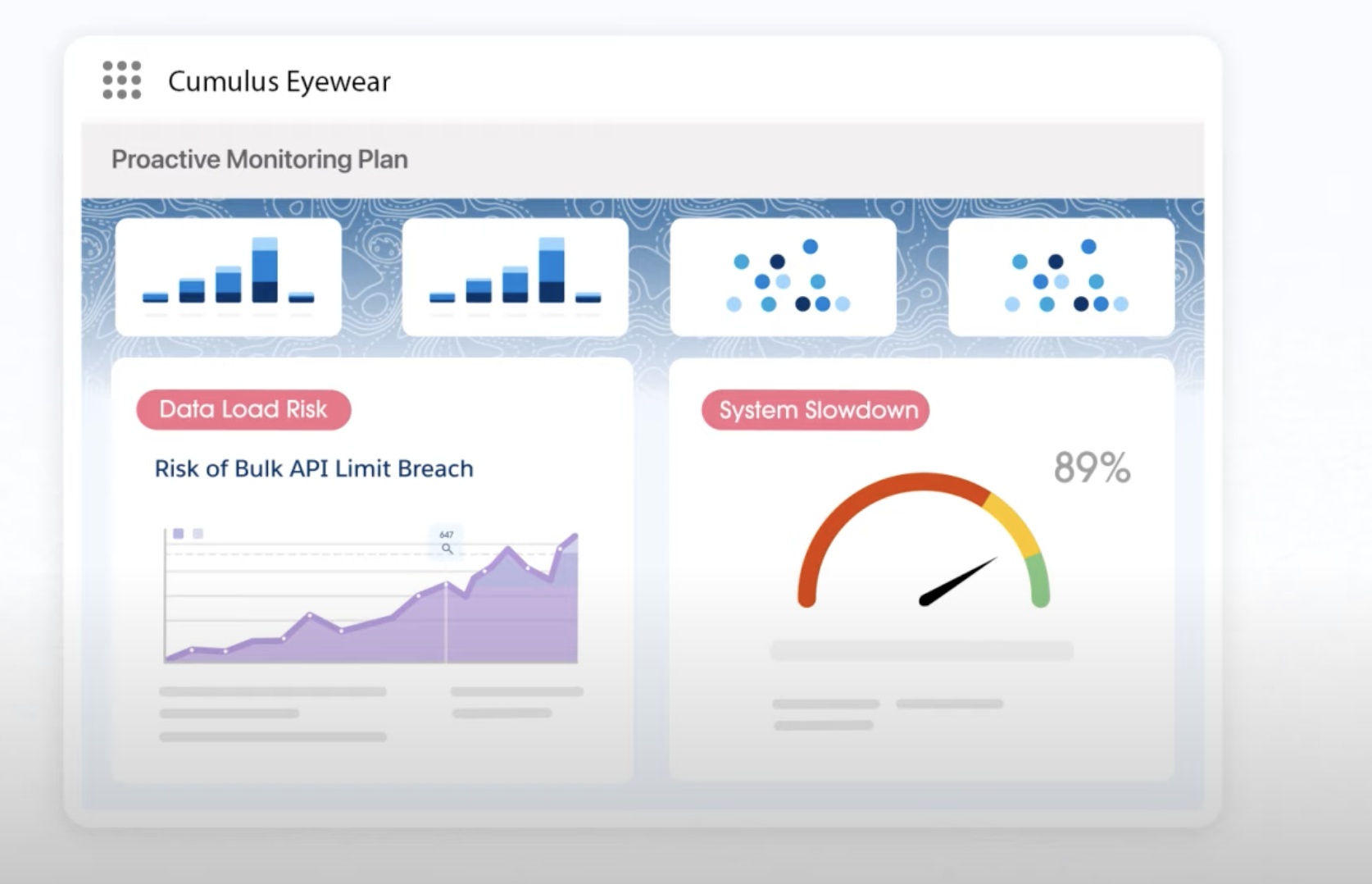

Proactive Monitoring

- Part of Signature Success Plan

Signature Success Plan

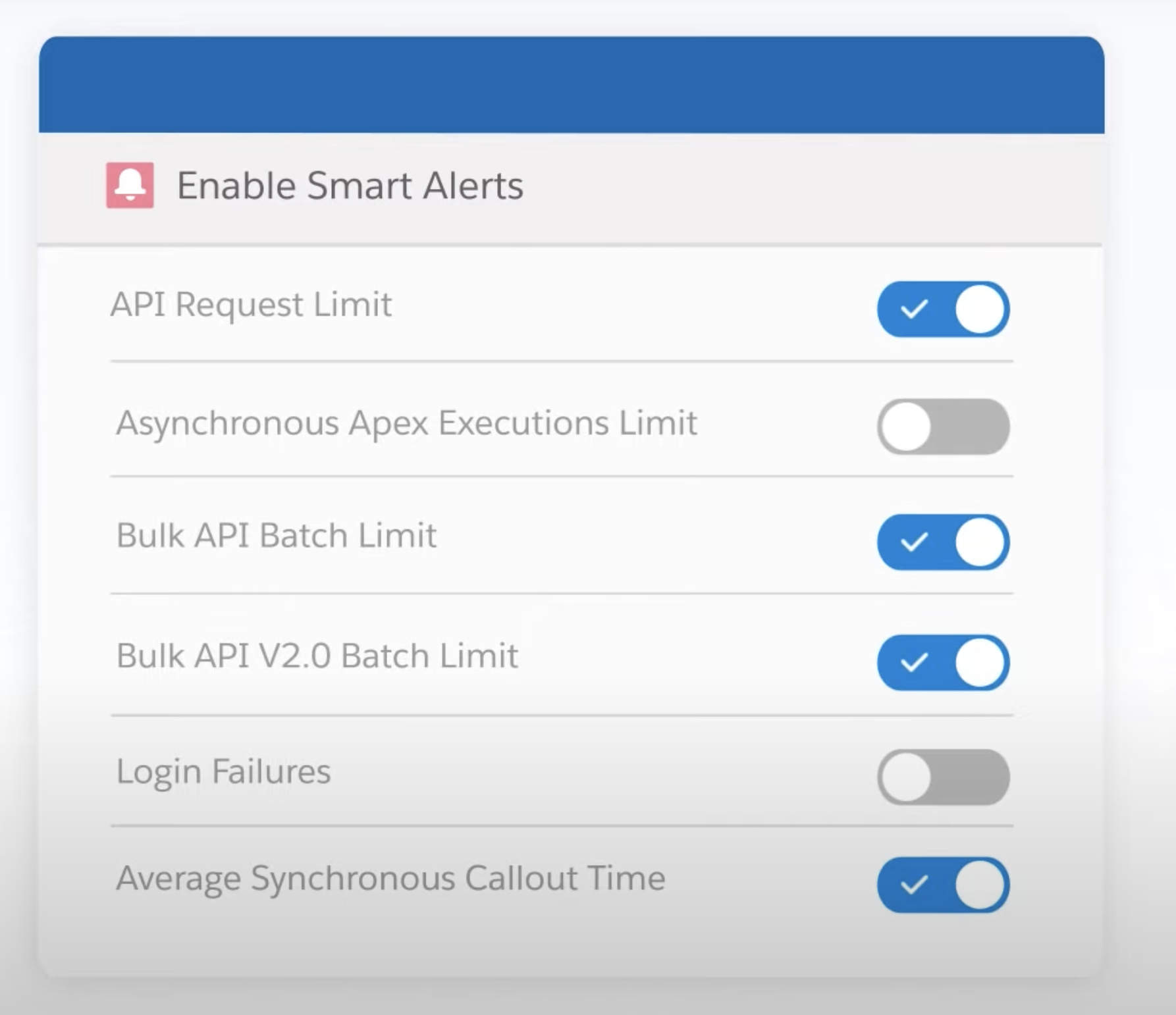

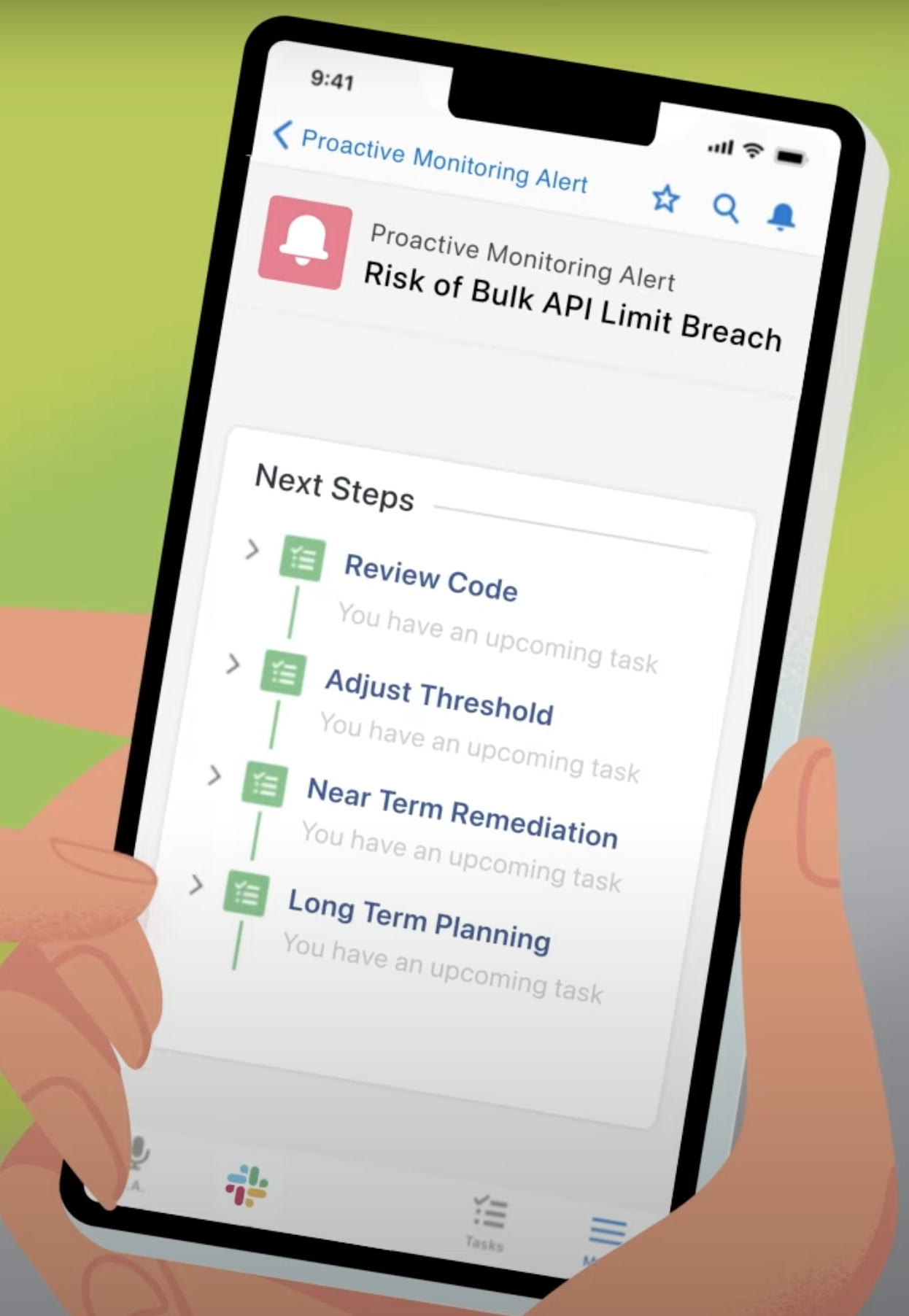

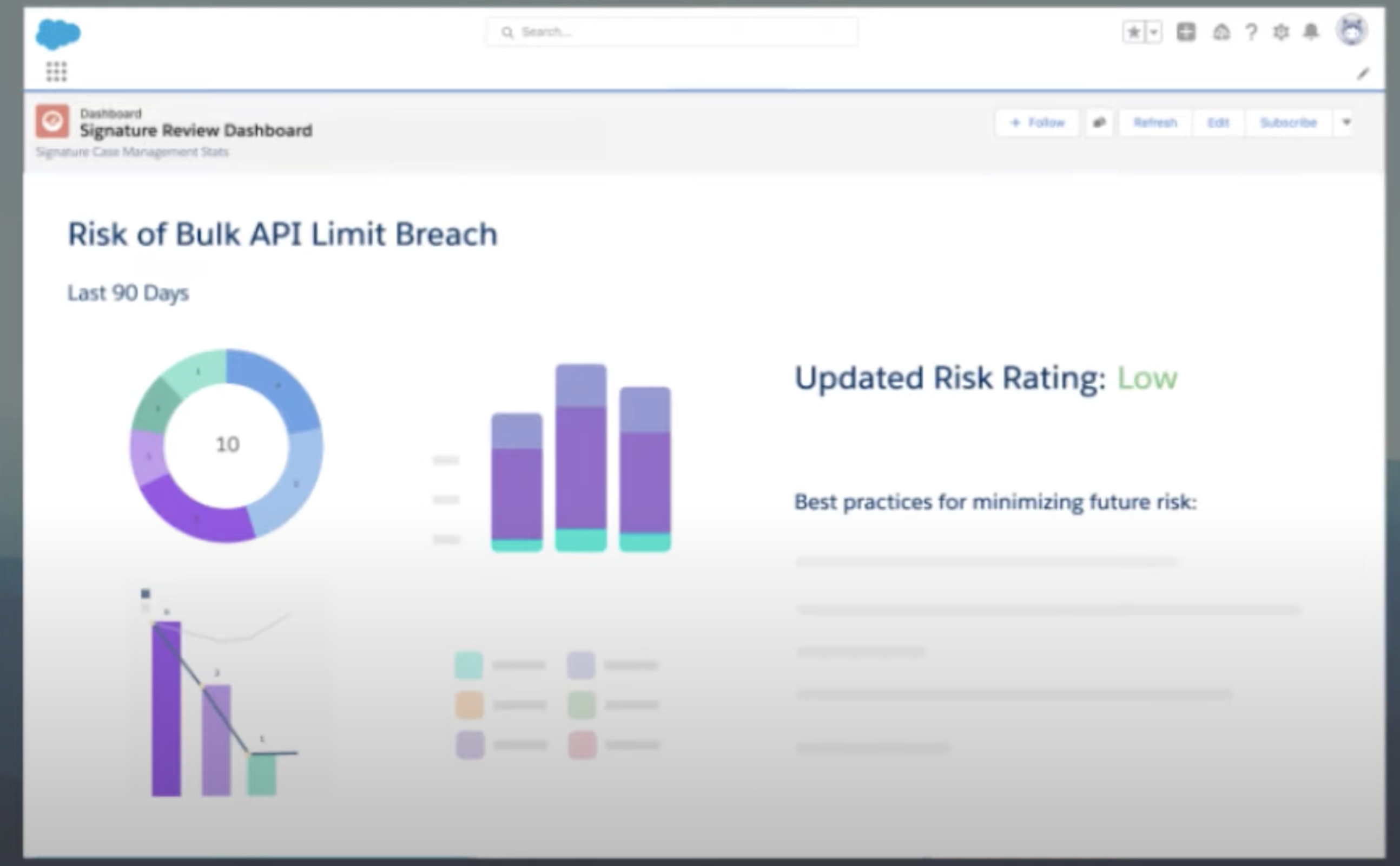

Feature Comments Technical Account Manager (TAM) Designated TAM Personalized Trends and Insights Key Event Management 24/7 Proactive Monitoring Predict and prevent disruptions before they occur. Includes customized monitoring plan including Smart Alerts to detect potential risks and root causes Continuous 24/7 monitoring

Salesforce team watches your production Org limits day and night, armed with proprietary diagnostic tools and the insights that come with overseeing a broad universe of Salesforce orgs. Continuous 24/7 monitoring of your key Salesforce solutions helps you predict and prevent issues.

Earlier warning of critical issues featuring Smart Alert

Salesforce support teams can swing into action more quickly, solving issues faster and reducing the likelihood that issues become severe.

Technical guidance

Salesforce team provides technical guidance from Salesforce monitoring experts, based upon best practices learned supporting more than 150,000 customers, can help the customer to restore functionality more quickly and help to reduce the likelihood of future errors and system problems.

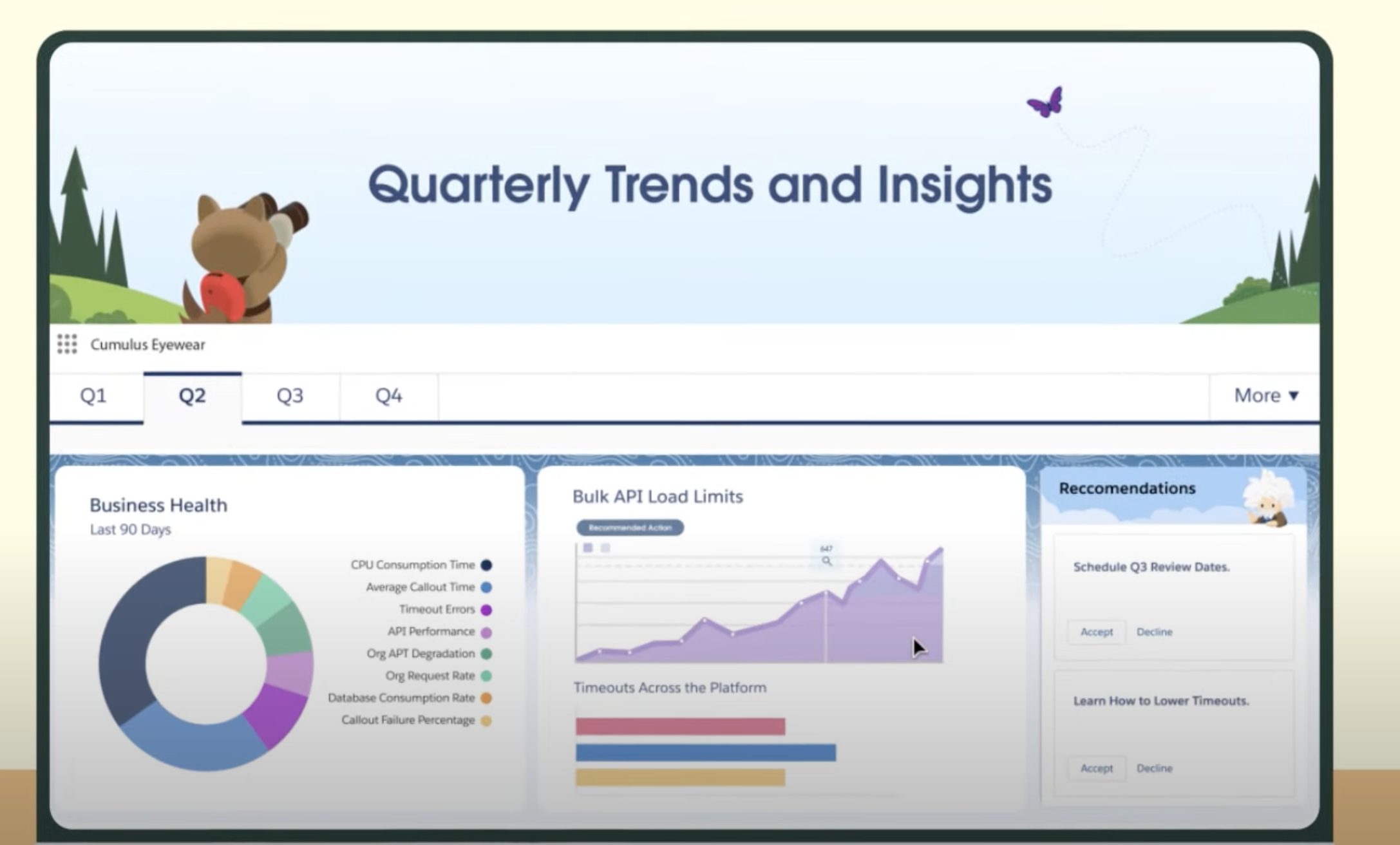

Quarterly trends and reports

Customer will receive Quarterly trends and reports. And Annual health review from the Signature team.

Samples

-

Salesforce team will also able to initiate emergency measures quickly (like increasing login limits), to keep your business running smoothly while issues are being addressed.

-

When the customer chooses the automations and triggered sends that customer wants to be monitored, and Salesforce team tracks them 24/7.

-

Salesforce team is always monitoring the CPU utilization, which is yet another indicator of when and where problems may arise. Salesforce team is empowered to go right to work reviewing custom code – allowing them to identify issues and recommend adjustments without delay. This service doesn’t fix the code, but we’ll diagnose the problem and tell you what you should do next to keep this from happening.

-

Batch process issues can slow down the org if not best practices are followed. Salesforce team can quickly help the customer to align rogue batch processes (caused by custom code or third-party vendors) with the best practices.

References

Real-Time Event Monitoring

- Monitor and detect standard events in Salesforce in near real-time

- Event data can be stored for auditing or reporting purposes

Insights

Insight Comments Who viewed what data and when? Where data was accessed? When a user changes a record using the UI? Who is logging in and from where? Who in your org is performing actions related to Platform Encryption administration? Which admins logged in as another user and the actions the admin took as that user? How long it takes a Lightning page to load? Threats detected in your org, such as anomalies in how users view or export reports, session hijacking attacks, or credential stuffing attacks Key terms

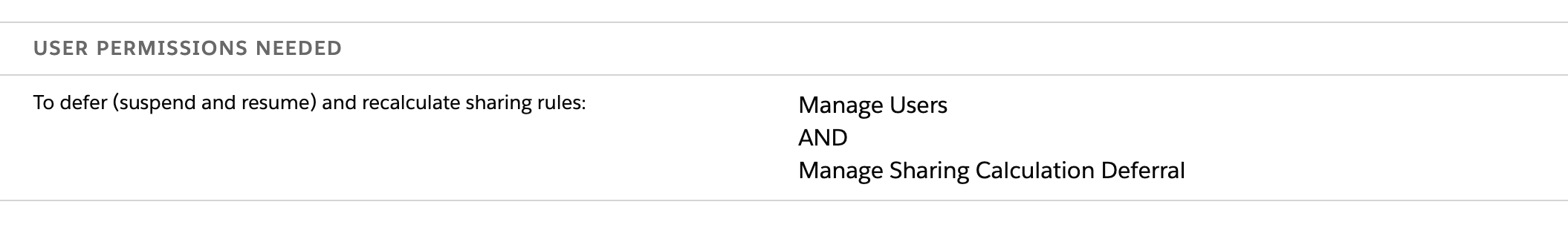

Term Definition Event An event is anything that happens in Salesforce, including user clicks, record state changes, and measuring values. Events are immutable and timestamped. Event Channel A stream of events on which an event producer sends event messages and event consumers read those messages. Event Subscriber A subscriber to a channel that receives messages from the channel. For example, a security app is notified of new report downloads. Event Message A message used to transmit data about the event. Event Publisher The publisher of an event message over a channel, such as a security and auditing app. Setting up - Enable Access to Real-Time Event Monitoring

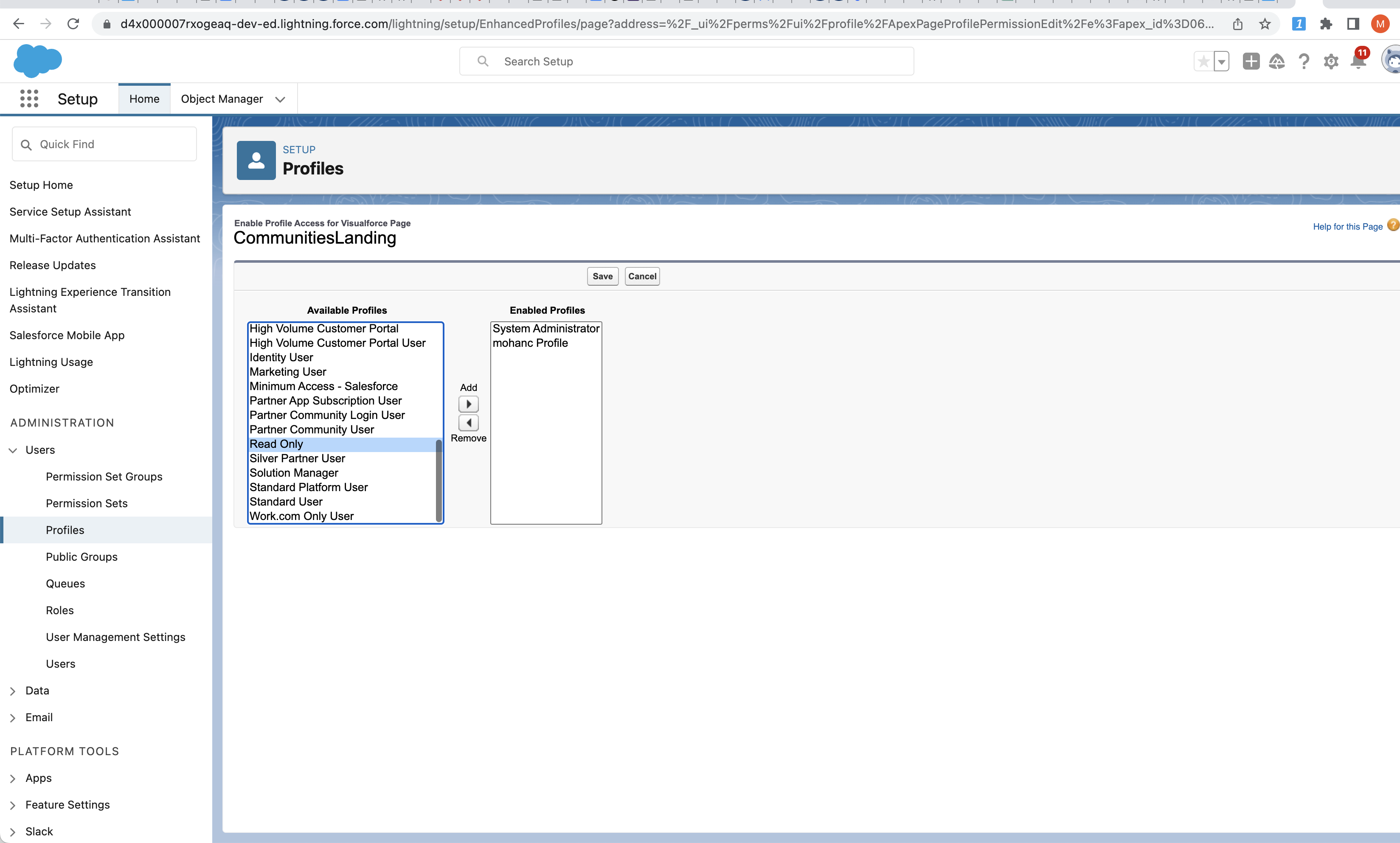

Via Profile or PermissionSet

View Real-Time Event Monitoring DataCustomize Application

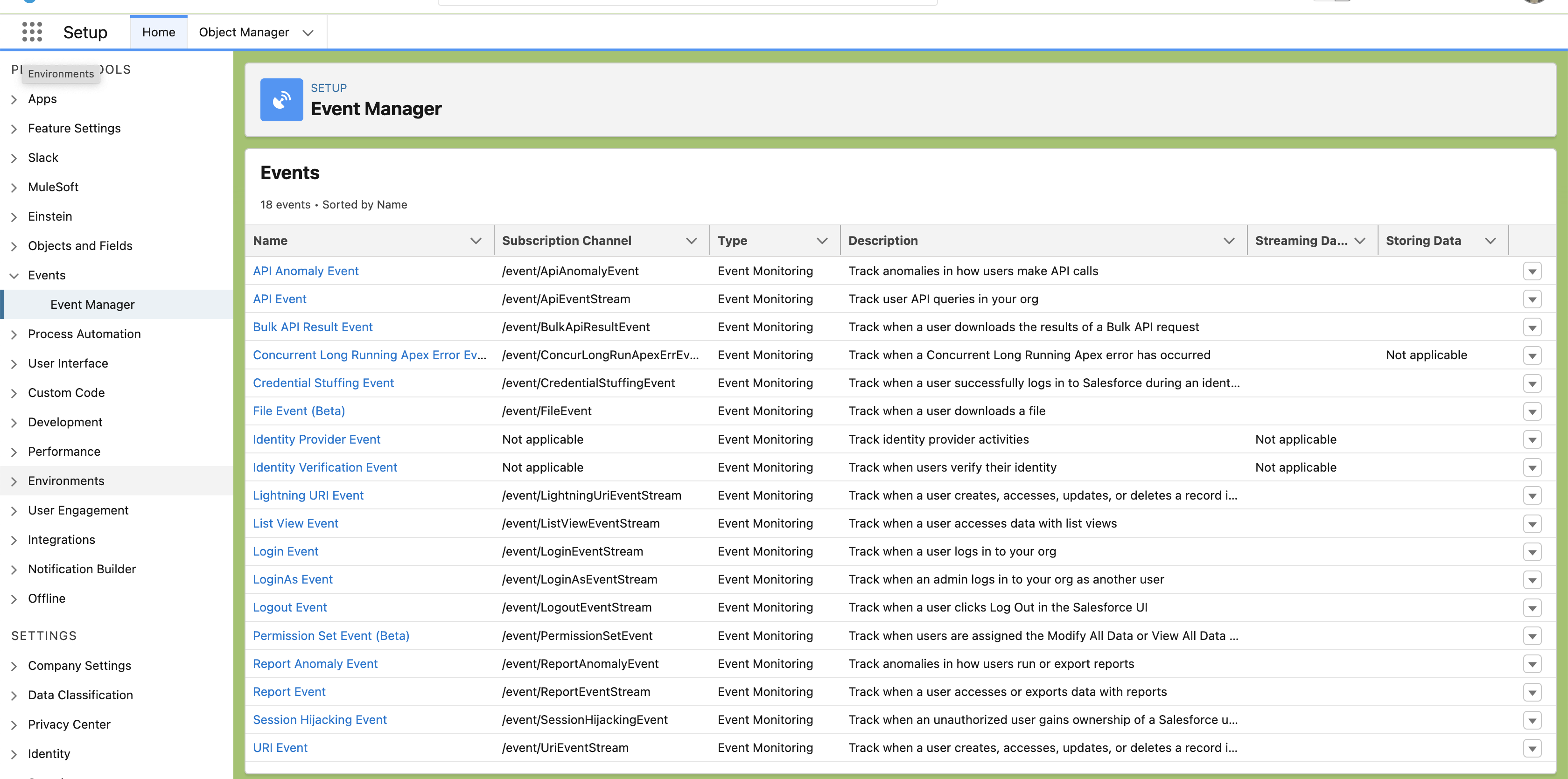

Event Manager

- From Setup, in the Quick Find box, enter Events, then select Event Manager.

- Next to the event you want to enable or disable streaming for, click the dropdown menu.

- Select whether you want to enable or disable streaming or storing on the event.

Querying RealTimeEventSettings